Solving real-world scientific research problems requires deep domain understanding, access to public data sets, and complex computation analysis with advanced visualizations. Leveraging the Cloud computing and RLCatalyst Research Gateway, we explain simplifying the process with a common use case for Earth Sciences Atmosphere modelling and analysis.

Click here for the full story.

2021 Blog, AWS Service, Blog, Featured

Working on non-scientific tasks such as setting up instances, installing software libraries, making model compile, and preparing input data are some of the biggest pain points for atmospheric scientists or any scientist for that matter. It’s challenging for scientists as it requires them to have strong technical skills deviating them from their core areas of analysis & research data compilation. Further adding to this, some of these tasks require high-performance computation, complicated software, and large data. Lastly, researchers need a real-time view of their actual spending as research projects are often budget-bound. Relevance Lab help researchers “focus on science and not servers” in partnership with AWS leveraging the RLCatalyst Research Gateway (RG) product.

Why RLCatalyst Research Gateway?

Speeding up scientific research using AWS cloud is a growing trend towards achieving “Research as a Service”. However, the adoption of AWS Cloud can be challenging for Researchers with surprises on costs, security, governance, and right architectures. Similarly, Principal Investigators can have a challenging time managing the research program with collaboration, tracking, and control. Research Institutions will like to provide consistent and secure environments, standard approved products, and proper governance controls. The product was created to solve these common needs of Researchers, Principal Investigator and Research Institutions.

- Available on AWS Marketplace and can be consumed in both SaaS as well as Enterprise mode

- Provides a Self-Service Cloud Portal with the ability to manage the provisioning lifecycle of common research assets

- Gives a real time visibility of the spend against the defined project budgets

- The principal investigator has the ability to pause or stop the project in case the budget is exceeded till the new grant is approved

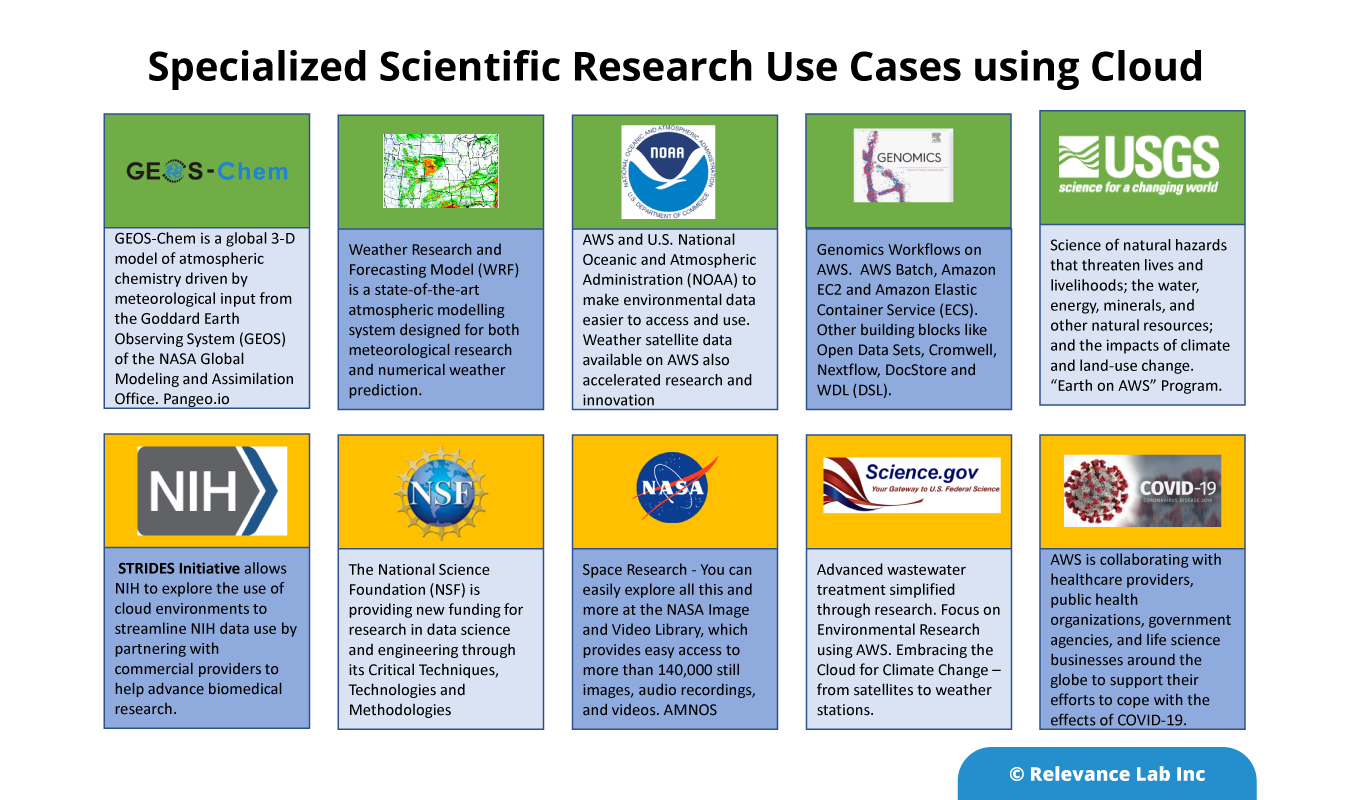

In this blog, we explain how the product has been used to solve a common research problem of GEOS-Chem used for Earth Sciences. It covers a simple process that starts with access to large data sets on public S3 buckets, creation of an on-demand compute instance with the application loaded, copying the latest data for analysis, running the analysis, storing the output data, analyzing the same using specialized AI/ML tools and then deleting the instances. This is a common scenario faced by researchers daily, and the product demonstrates a simple Self-Service frictionless capability to achieve this with tight controls on cost and compliance.

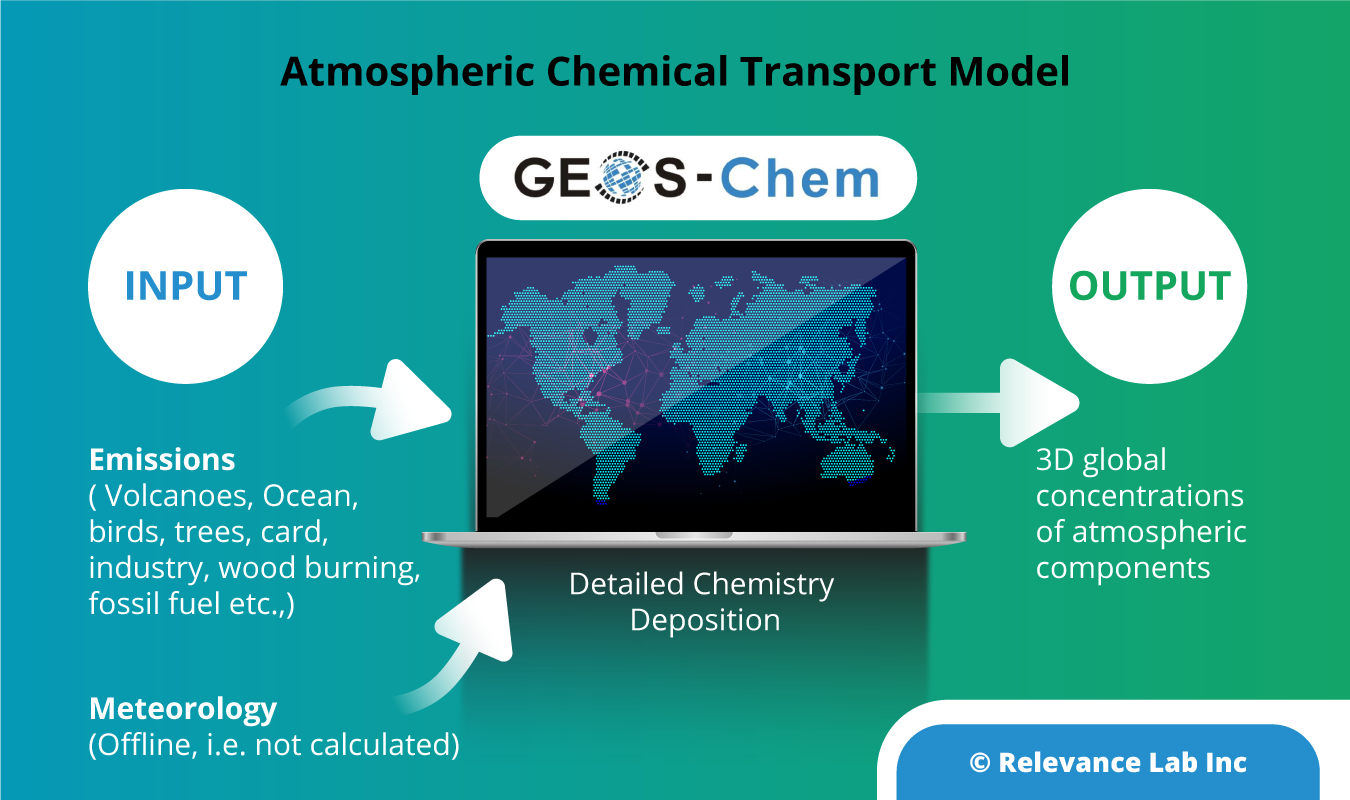

GEOS-Chem enables simulations of atmospheric composition on local to global scales. It can be used off-line as a 3-D chemical transport model driven by assimilated meteorological observations from the Goddard Earth Observing System (GEOS) of the NASA Global Modeling Assimilation Office (GMAO).

The figure below shows the basic construct on GEOS-Chem input and output analysis.

Being a common use case, there is documentation available in the public domain by researchers on how to run GEOS-Chem on AWS Cloud. The product makes the process simpler using a Self-Service Cloud portal. To know more about similar use cases and advanced computing options, refer to AWS HPC for Scientific Research.

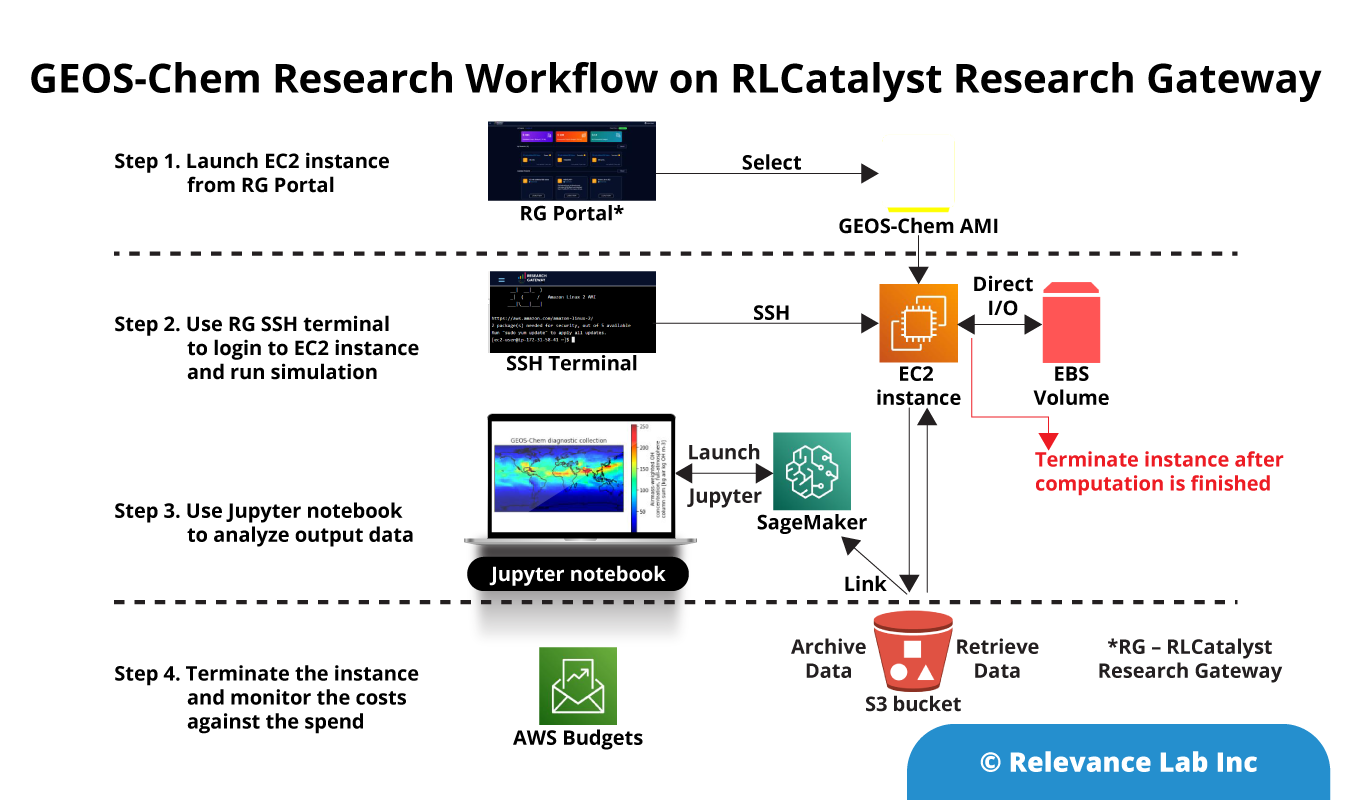

Steps for GEOS-Chem Research Workflow on AWS Cloud

Prerequisites for researcher before starting data analysis.

- A valid AWS account and an access to the RG portal

- A publicly accessible S3 bucket with large Research Data sets accessible

- Create an additional EBS volume for your ongoing operational research work. (For occasional usage, it is recommended to upload the snapshot in S3 for better cost management.)

- A pre-provisioned SageMaker Jupyter notebook to analyze output data

Once done, below are the steps to execute this use case.

- Login to the RG Portal and select the GEOS-Chem project

- Launch an EC2 instance with GEOS-Chem AMI

- Login to EC2 using SSH and configure AWS CLI

- Connect to a public S3 bucket from AWS CLI to list NASA-NEX data

- Run the simulation and copy the output data to a local S3 bucket

- Link the local S3 bucket to AWS SageMaker instance and launch a Jupyter notebook for analysis of the output data

- Once done, terminate the EC2 instance and check for the cost spent on the use case

- All costs related to GEOS-Chem project and researcher consumption are tracked automatically

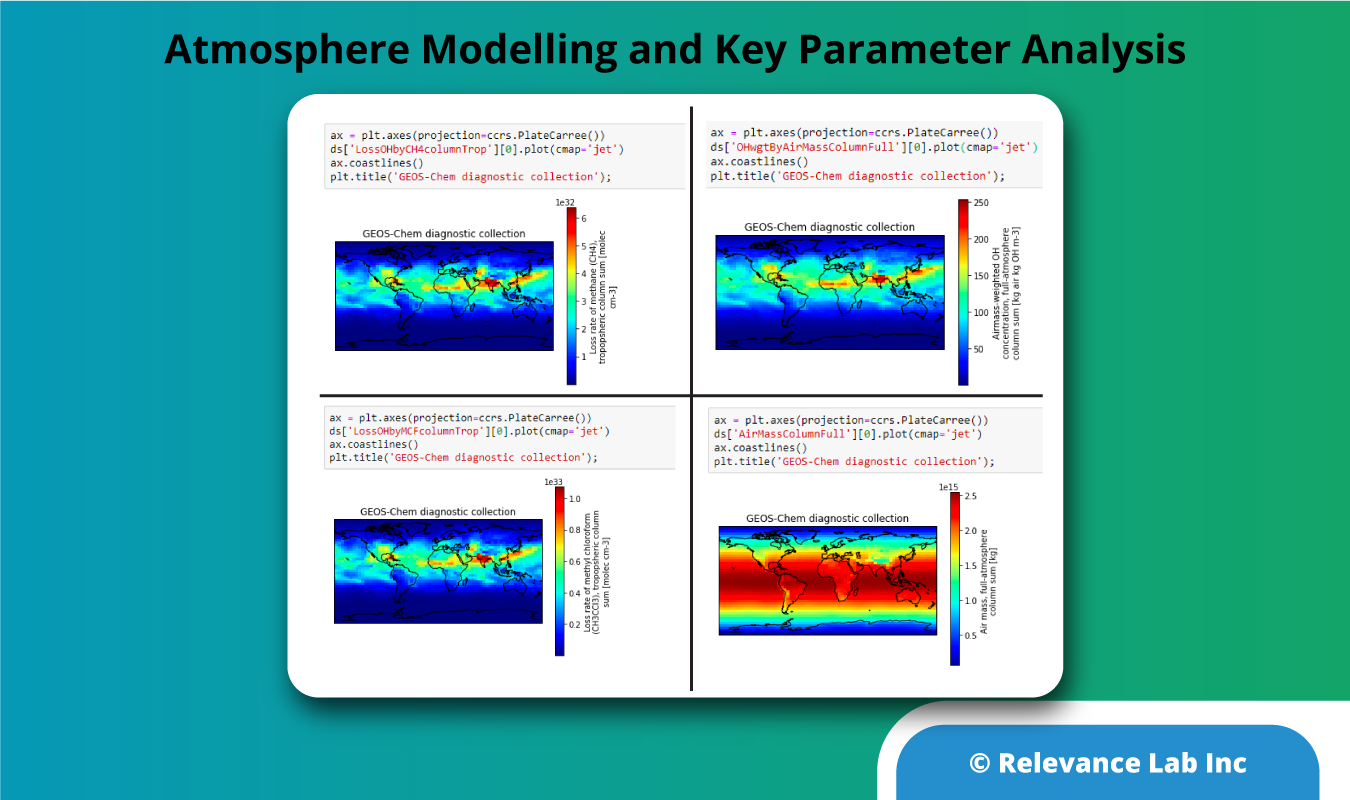

Sample Output Analysis

Once you run the output files on the Jupyter notebook, it does the compilation and provides output data in a visual format, as shown in the sample below. The researcher can then create a snapshot and upload it to S3 and terminate the EC2 instance (without deleting the additional EBS volume created along with EC2).

Output to analyze loss rate and Air mass of Hydroxide pertaining to Atmospheric Science.

Summary

Scientific computing can take advantage of cloud computing to speed up research, scale-up computing needs almost instantaneously, and do all this with much better cost-efficiency. Researchers no longer need to worry about the expertise required to set up the infrastructure in AWS as they can leave this to tools like RLCatalyst Research Gateway, thus compressing the time it takes to complete their research computing tasks.

The steps demonstrated in this blog can be easily replicated for similar other research domains. Also, it can be used to onboard new researchers with pre-built solution stacks provided in an easy to consume option. RLCatalyst Research Gateway is available in SaaS mode from AWS Marketplace and research institutions can continue to use their existing AWS account to configure and enable the solution for more effective Scientific Research governance.

To learn more about GEOS-Chem use cases, click here.

If you want to learn more about the product or book a live demo, feel free to contact marketing@relevancelab.com.

References

Enabling Immediate Access to Earth Science Models through Cloud Computing: Application to the GEOS-Chem Model

Enabling High‐Performance Cloud Computing for Earth Science Modeling on Over a Thousand Cores: Application to the GEOS‐Chem Atmospheric Chemistry Model

HPC Blog, 2021 Blog, AWS Service, Blog, Featured

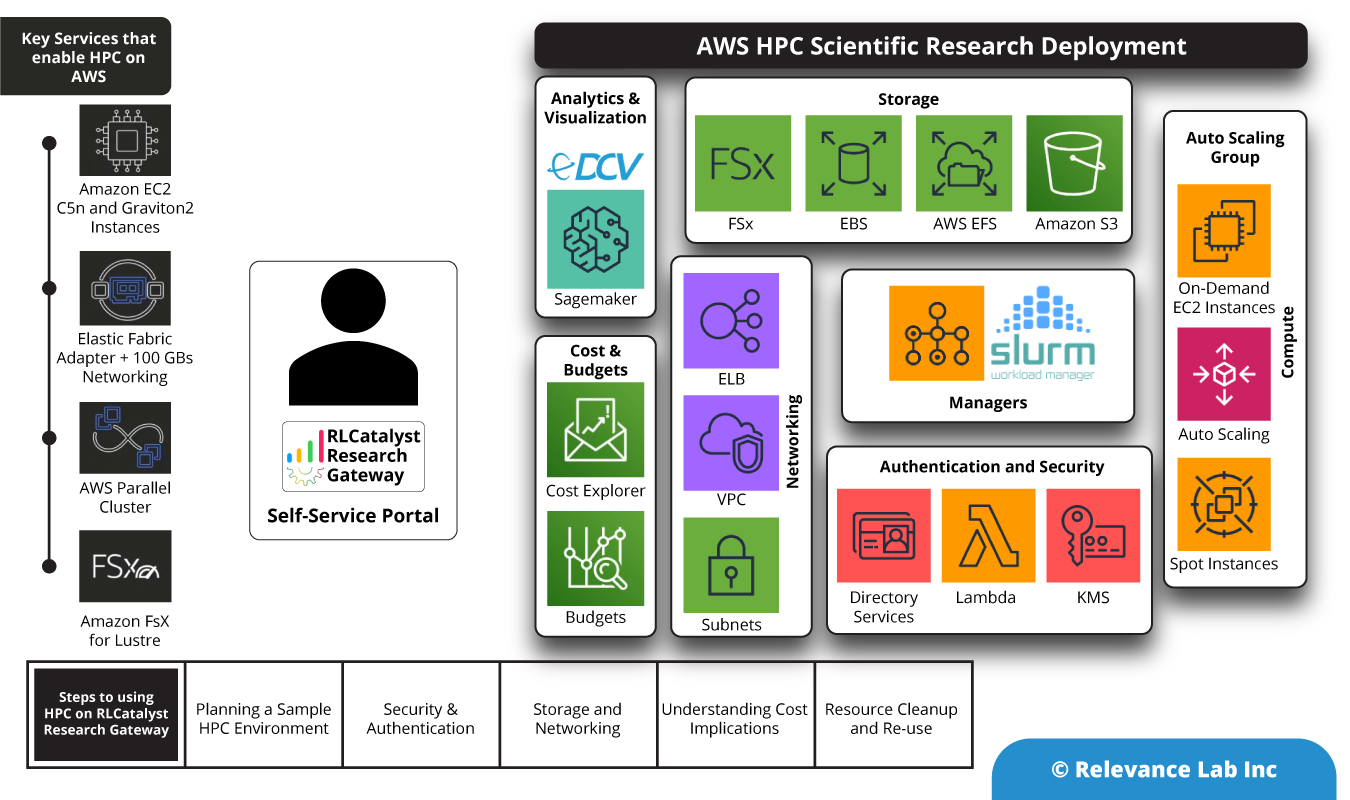

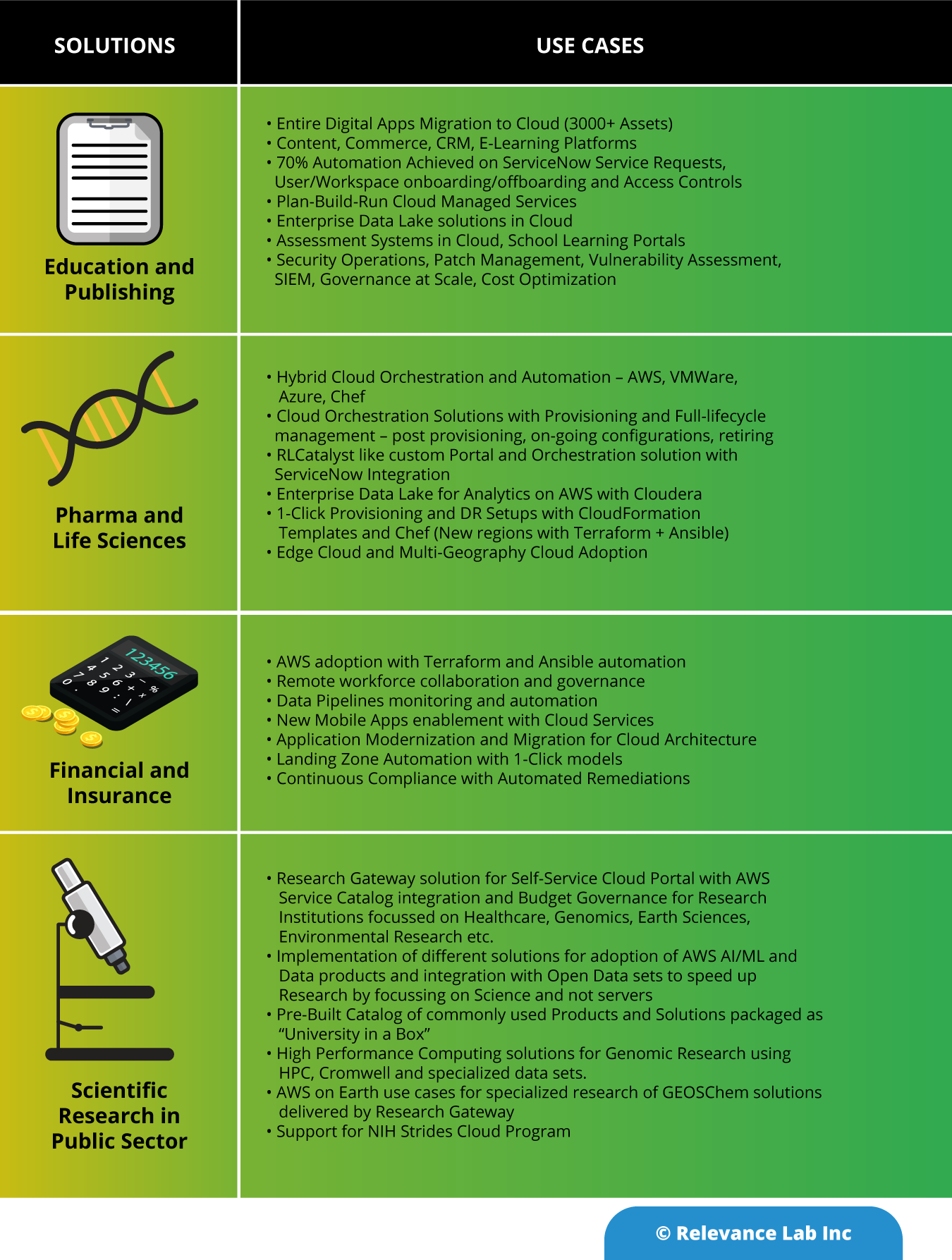

AWS provides a comprehensive, elastic, and scalable cloud infrastructure to run your HPC applications. Working with AWS in exploring HPC for driving Scientific Research, Relevance Lab leveraged their RLCatalyst Research Gateway product to provision an HPC Cluster using AWS Service Catalog with simple steps to launch a new environment for research. This blog captures the steps used to launch a simple HPC 1.0 cluster on AWS and roadmap to extend the functionality to cover more advanced use cases of HPC Parallel Cluster.

AWS delivers an integrated suite of services that provides everything needed to build and manage HPC clusters in the cloud. These clusters are deployed over various industry verticals to run the most compute-intensive workloads. AWS has a wide range of HPC applications spanning from traditional applications such as genomics, computational chemistry, financial risk modeling, computer-aided engineering, weather prediction, and seismic imaging to new applications such as machine learning, deep learning, and autonomous driving. In the US alone, multiple organizations across different specializations are choosing cloud to collaborate for scientific research.

Similar programs exist across different geographies and institutions across EU, Asia, and country-specific programs for Public Sector programs. Our focus is to work with AWS and regional scientific institutions in bringing the power of Supercomputers for day-to-day researchers in a cost-effective manner with proper governance and tracking. Also, with Self-Service models, the shift needs to happen from worrying about computation to focus on Data, workflows, and analytics that requires a new paradigm of considering prospects of serverless scientific computing that we cover in later sections.

Relevance Lab RLCatalyst Research Gateway provides a Self-Service Cloud portal to provision AWS products with a 1-Click model based on AWS Service Catalog. While dealing with more complex AWS Products like HPC there is a need to have a multi-step provisioning model and post provisioning actions that are not always possible using standard AWS APIs. In these situations requiring complex orchestration and post provisioning automation RLCatalyst BOTs provide a flexible and scalable solution to complement based Research Gateway features.

Building blocks of HPC on AWS

AWS offers various services that make it easy to set up an HPC setup.

An HPC solution in AWS uses the following components as building blocks.

- EC2 instances are used for Master and Worker nodes. The master nodes can use On-Demand instances and the worker nodes can use a combination of On-Demand and Spot Instances.

- The software for the manager nodes is built as an AMI and used for the creation of Master nodes.

- The agent software for the managers to communicate with the worker nodes is built into a second AMI that is then used for provisioning the Worker nodes.

- Data is shared between different nodes using a file-sharing mechanism like FSx Lustre.

- Long-term storage uses AWS S3.

- Scaling of nodes is done via Auto-scaling.

- KMS for encrypting and decrypting the keys.

- Directory services to create the domain name for using HPC via UI.

- Lambda function service to create user directory.

- Elastic Load Balancing is used to distribute incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, IP addresses, Lambda functions, and virtual appliances.

- Amazon EFS is used for regional service storing data within and across multiple Availability Zones (AZs) for high availability and durability. Amazon EC2 instances can access your file system across AZs.

- AWS VPC to launch the EC2 instances in private cloud.

Evolution of HPC on AWS

- HPC clusters first came into existence in AWS using the CfnCluster Cloud Formation template. It creates a number of Manager and Worker nodes in the cluster based on the input parameters. This product can be made available through AWS Service Catalog and is an item that can be provisioned from the RLCatalyst Research Gateway. The cluster manager software like Slurm, Torque, or SGE is pre-installed on the manager nodes and the agent software is pre-installed on the worker nodes. Also pre-installed is software that can provide a UI (like Nice EngineFrame) for the user to submit jobs to the cluster manager.

- AWS Parallel Cluster is a newer offering from AWS for provisioning an HPC cluster. This service provides an open-source, CLI-based option for setting up a cluster. It sets up the manager and worker nodes and also installs controlling software that can watch the job queues and trigger scaling requests on the AWS side so that the overall cluster can grow or shrink based on the size of the queue of jobs.

Steps to Launch HPC from RLCatalyst Research Gateway

A standard HPC launch involves the following steps.

- Provide the input parameters for the cluster. This will include

- The compute instance size for the master node (vCPUs, RAM, Disk)

- The compute instance size for the worker nodes (vCPUs, RAM, Disk)

- The minimum and maximum number of worker nodes.

- Select the workload manager software (Slurm, Torque, SGE)

- Connectivity options (SSH keys etc.)

- Launch the product.

- Once the product is in Active state, connect to the URL in the Output parameters on the Product Details page. This connects you to the UI from where you can submit jobs to the cluster.

- You can SSH into the master nodes using the key pair selected in the Input form.

RLCatalyst Research Gateway uses the CfnCluster method to create an HPC cluster. This allows the HPC cluster to be created just like any other products in our Research Gateway catalog items. Though this provisioning may take upto 45 minutes to complete, it creates an URL in the outputs which we can use to submit the jobs through the URL.

Advanced Use Cases for HPC

- Computational Fluid Dynamics

- Risk Management & Portfolio Optimization

- Autonomous Vehicles – Driving Simulation

- Research and Technical Computing on AWS

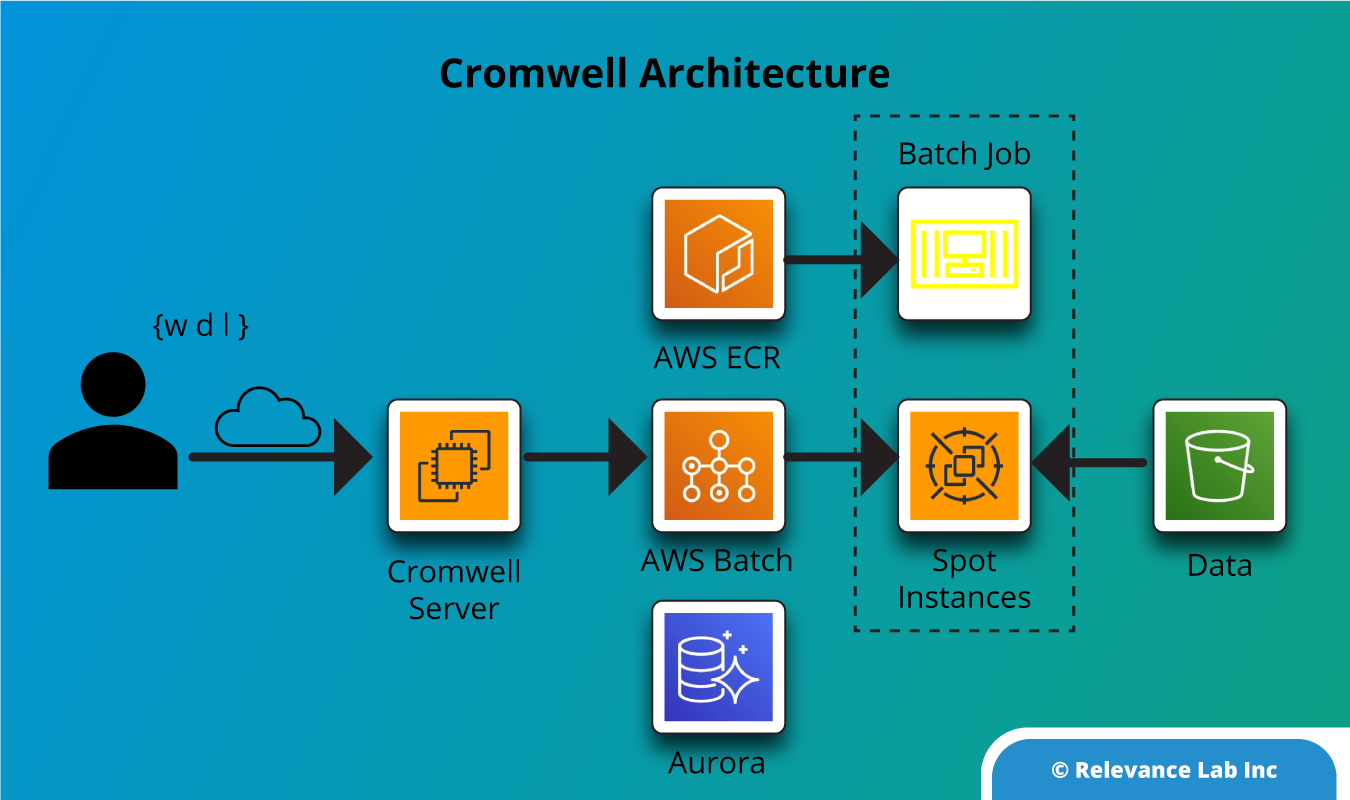

- Cromwell on AWS

- Genomics on AWS

We have specifically looked at the use case that pertains to BioInformatics where a lot of the research uses Cromwell server to process workflows defined using the WDL language. The Cromwell server acts as a manager that controls the worker nodes, which execute the tasks in the workflow. A typical Cromwell setup in AWS can use AWS Batch as the backend to scale the cluster up and down and execute containerized tasks on EC2 instances (on-demand or spot).

Prospect of Serverless Scientific Computing and HPC

“Function As A Service” Paradigm for HPC and Workflows for Scientific Research with the advent of serverless computing and its availability on all major computing platforms, it is now possible to take the computing that would be done on a High Performance Cluster and run it as lambda functions. The obvious advantage to this model is that this virtual cluster is highly elastic, and charged only for the exact execution time of each lambda function executed.

One of the limitations of this model currently is that only a few run-times are currently supported like Node.js and Python while a lot of the scientific computing code might be using additional run-times like C, C++, Java etc. However, this is fast changing and cloud providers are introducing new run-times like Go and Rust.

Summary

Scientific computing can take advantage of cloud computing to speed up research, scale-up computing needs almost instantaneously and do all this with much better cost efficiency. Researchers no longer worry about the expertise required to set up the infrastructure in AWS as they can leave this to tools like RLCatalyst Research Gateway, thus compressing the time it takes to complete their research computing tasks.

To learn more about this solution or participate in using the same for your internal needs feel free to contact marketing@relevancelab.com

References

Getting started with HPC on AWS

HPC on AWS Whitepaper

AWS HPC Workshops

Genomics in the Cloud

Serverless Supercomputing: High Performance Function as a Service for Science

FaaSter, Better, Cheaper: The Prospect of Serverless Scientific Computing and HPC

Working with research labs and institutions around the world, AWS and Relevance Lab are creating solutions to help researchers process complex workloads leveraging High-Performance Computing (HPC) on Cloud.

Click here for the full story.

Accelerate your Cloud adoption journey without worrying about Security, Governance and Cost Management with AWS Control Tower, AWS Security Hub and Cloud Custodian.

Click here for the full story.

thank you, 2021 Blog, AWS Governance, Governance360, Blog, Featured

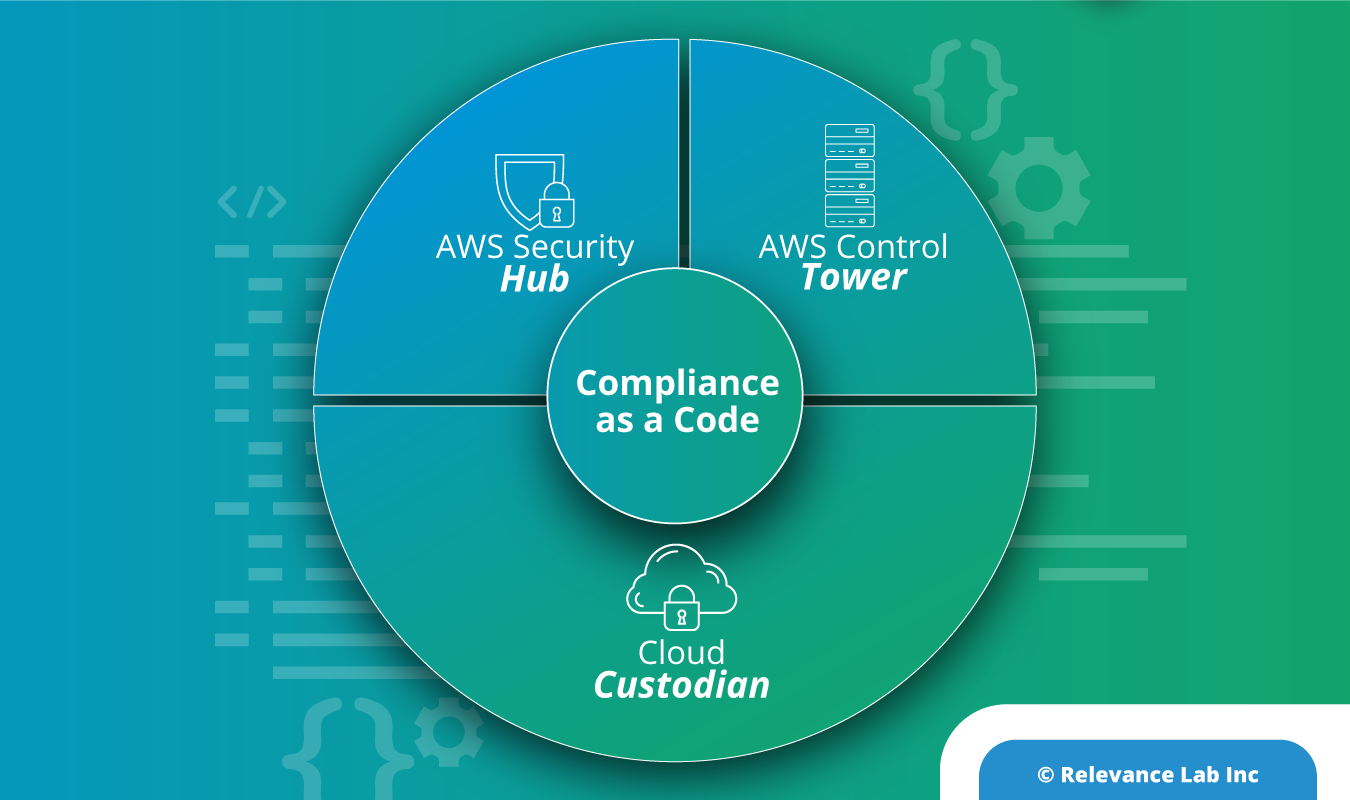

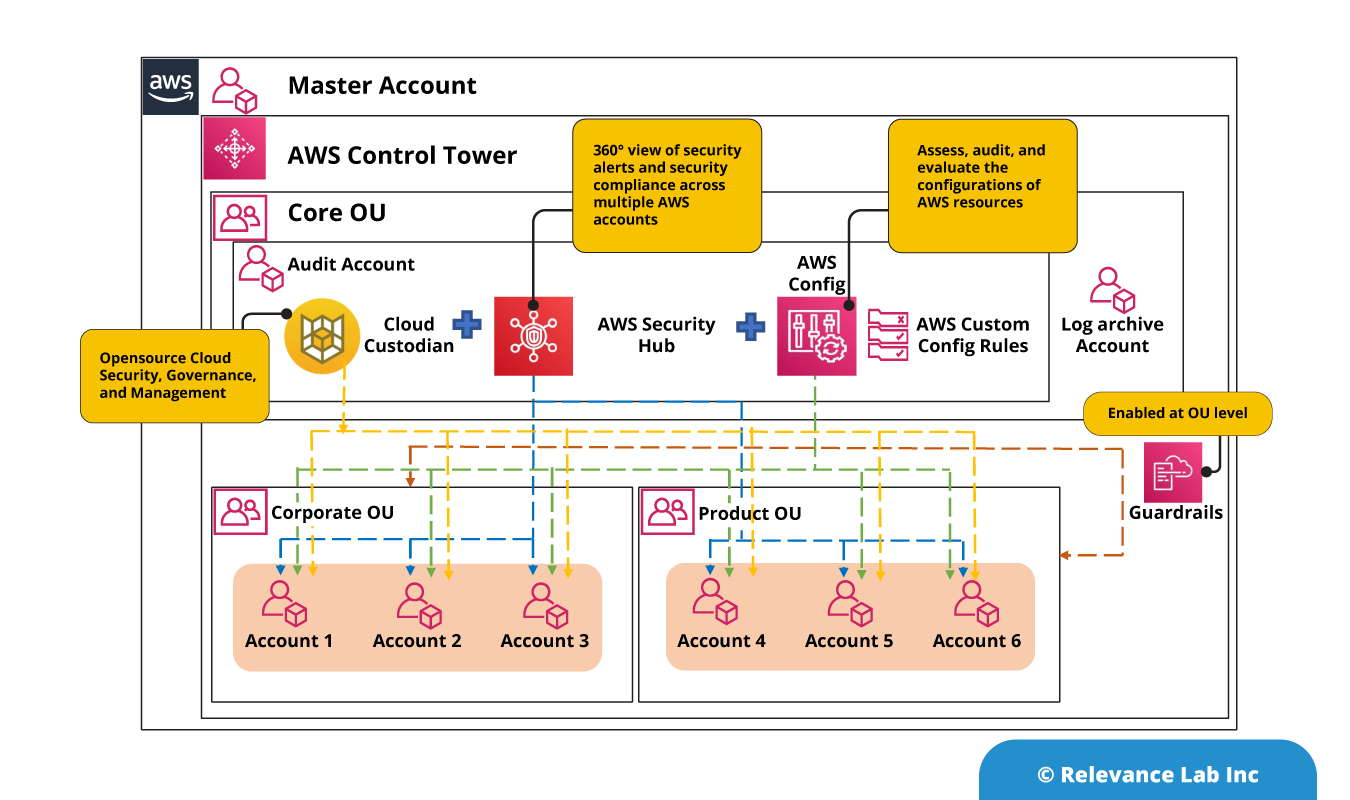

Compliance on the Cloud is an important aspect in today’s world of remote working. As enterprises accelerate the adoption of cloud to drive frictionless business, there can be surprises on security, governance and cost without a proper framework. Relevance Lab (RL) helps enterprises speed up workload migration to the cloud with the assurance of Security, Governance and Cost Management using an integrated solution built on AWS standard products and open source framework. The key building blocks of this solution are.

Why do enterprises need Compliance as a Code?

For most enterprises, the major challenge is around governance and compliance and lack of visibility into their Cloud Infrastructure. They spend enormous time on trying to achieve compliance in a silo manner. Enterprises also spend enormous amounts of time on security and compliance with thousands of man hours. This can be addressed by automating compliance monitoring, increasing visibility across cloud with the right set of tools and frameworks. Relevance Labs Compliance as a Code framework, addresses the need of enterprises on the automation of these security & compliance. By a combination of preventive, detective and responsive controls, we help enterprises, by enforcing nearly continuous compliance and auto-remediation and there-by increase the overall security and reduce the compliance cost.

Key tools and framework of Cloud Governance 360°

AWS Control Tower: AWS Control Tower (CT) helps Organizations set up, manage, monitor, and govern a secured multi-account using AWS best practices. Setting up a Control Tower on a new account is relatively simpler when compared to setting it up on an existing account. Once Control Tower is set up, the landing zone should have the following.

- 2 Organizational Units

- 3 accounts, a master account and isolated accounts for log archive and security audit

- 20 preventive guardrails to enforce policies

- 2 detective guardrails to detect config violations

Apart from this, you can customize the guard rails and implement them using AWS Config Rules. For more details on Control Tower implementation, refer to our earlier blog here.

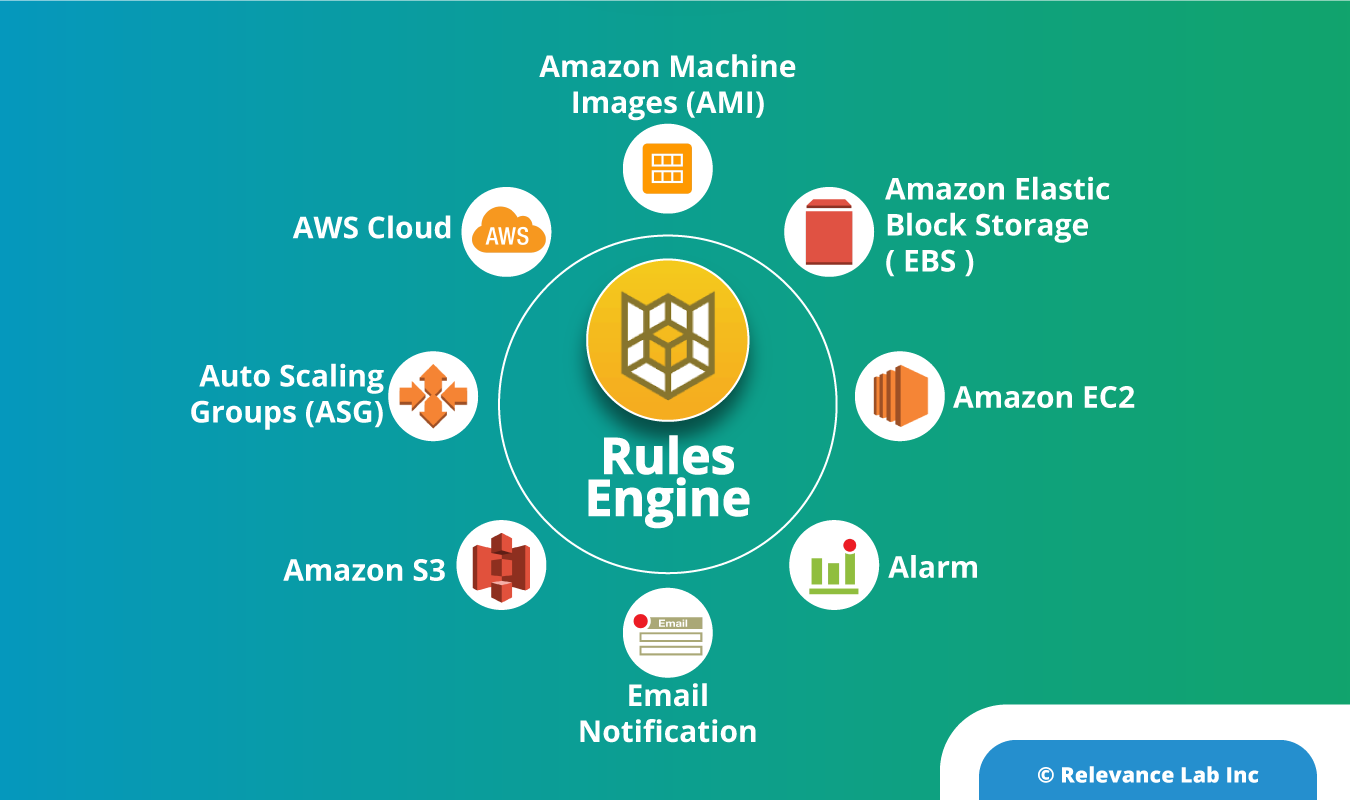

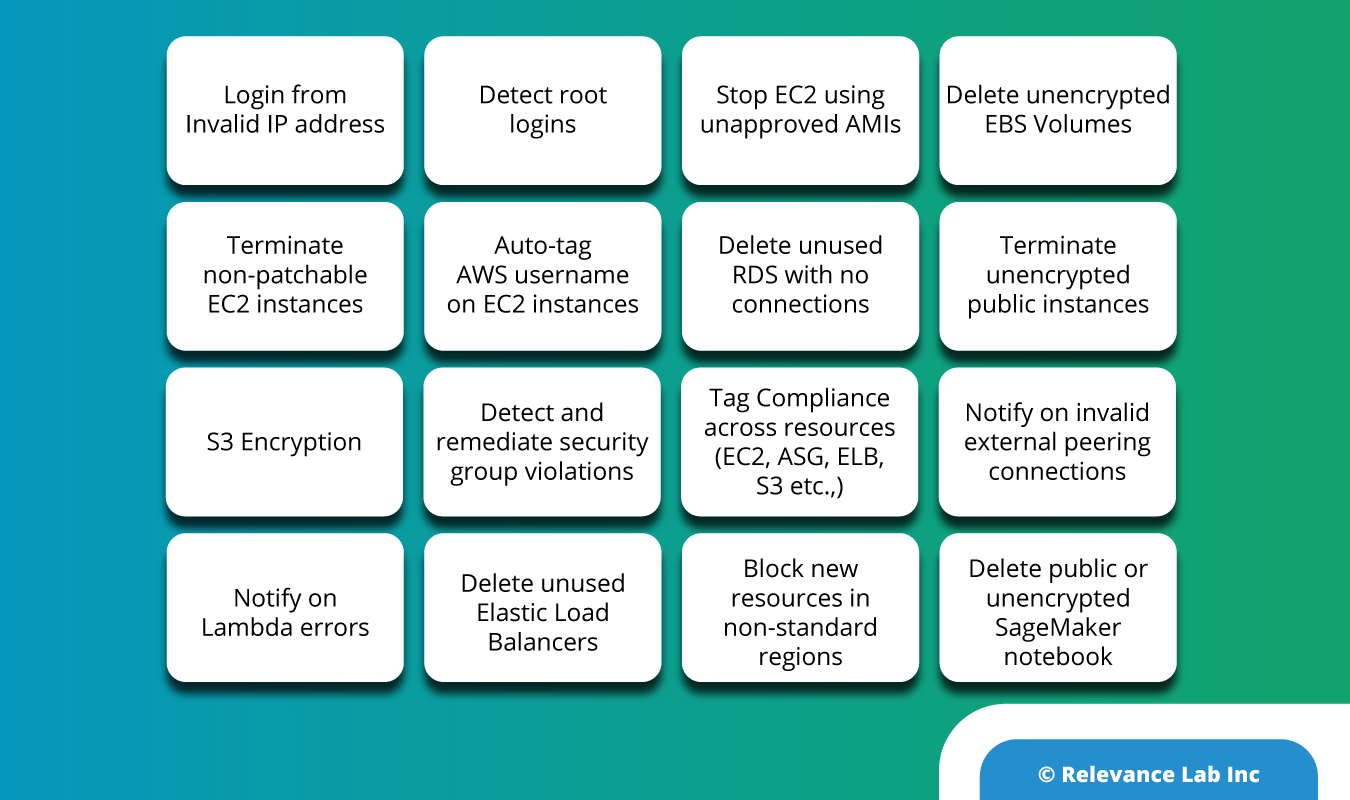

Cloud Custodian: Cloud Custodian is a tool that unifies the dozens of tools and scripts most organizations use for managing their public cloud accounts into one open-source tool. It uses a stateless rules engine for policy definition and enforcement, with metrics, structured outputs and detailed reporting for Cloud Infrastructure. It integrates tightly with serverless runtimes to provide real time remediation/response with low operational overhead.

Organizations can use Custodian to manage their cloud environments by ensuring compliance to security policies, tag policies, garbage collection of unused resources, and cost management from a single tool. Custodian adheres to a Compliance as Code principle, to help you validate, dry run, and review changes to your policies. The policies are expressed in YAML and include the following.

- The type of resource to run the policy against

- Filters to narrow down the set of resources

Cloud Custodian is a rules engine for managing public cloud accounts and resources. It allows users to define policies to enable a well managed Cloud Infrastructure, that’s both secure and cost optimized. It consolidates many of the ad hoc scripts organizations have into a lightweight and flexible tool, with unified metrics and reporting.

Security Hub: AWS Security Hub gives you a comprehensive view of your security alerts and security posture across your AWS accounts. It’s a single place that aggregates, organizes, and prioritizes your security alerts, or findings, from multiple AWS services, such as Amazon GuardDuty, Amazon Inspector, Amazon Macie, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Firewall Manager, as well as from AWS Partner solutions like Cloud Custodian. You can also take action on these security findings by investigating them in Amazon Detective or by using Amazon CloudWatch Event rules to send the findings to an ITSM, chat, Security Information and Event Management (SIEM), Security Orchestration Automation and Response (SOAR), and incident management tools or to custom remediation playbooks.

Below is the snapshot of features across AWS Control Tower, Cloud Custodian and Security Hub, as shown in the table, these solutions complement each other across the common compliance needs.

| SI No | AWS Control Tower | Cloud Custodian | Security Hub |

|---|---|---|---|

| 1 | Easy to implement or configure AWS Control Tower within few clicks | Light weight and flexible framework (Open source) which helps to deploy the cloud policies | Gives a comprehensive view of security alerts and security posture across AWS accounts |

| 2 | It helps to achieve “Governance at Scale” – Account Management, Security, Compliance Automation, Budget and Cost Management | Helps to achieve Real-time Compliance and Cost Management | It’s a single place that aggregates, organizes, and prioritizes your security alerts, or findings, from multiple AWS services |

| 3 | Predefined Guardrails based on best practices – Establish / Enable Guardrails | We need to define the rules and Cloud Custodian will enforce them | Continuously monitors the account using automated security checks based on AWS best practices |

| 4 | Guardrails are enabled at Organization level | If an account has any specific requirement to either include or exclude certain policies, those exemptions can be handled | With a few clicks in the AWS Security Hub console, we can connect multiple AWS accounts and consolidate findings across those accounts |

| 5 | Automate Compliant Account Provisioning | Can be included in Account creation workflow to deploy the set of policies to every AWS account as part of the bootstrapping process | Automate continuous, account and resource-level configuration and security checks using industry standards and best practices |

| 6 | Separate Account for Centralized logging of all activities across accounts | Offers comprehensive logs whenever the policy is executed and can be stored to S3 bucket | Create and customize your own insights, tailored to your specific security and compliance needs |

| 7 | Separate Account for Audit. Designed to provide security and compliance teams read and write access to all accounts | Can be integrated with AWS Config, AWS Security Hub, AWS System Manager and AWS X-Ray Support | Diverse ecosystem of partner integrations |

| 8 | Single pane view dashboard to get visibility on all OU’S, accounts and guardrails | Needs Integration with Security Hub to view all the policies which have been implemented in regions / across accounts | Monitor your security posture and quickly identify security issues and trends across AWS accounts in Security Hub’s summary dashboard |

Relevance Lab Compliance as a Code Framework

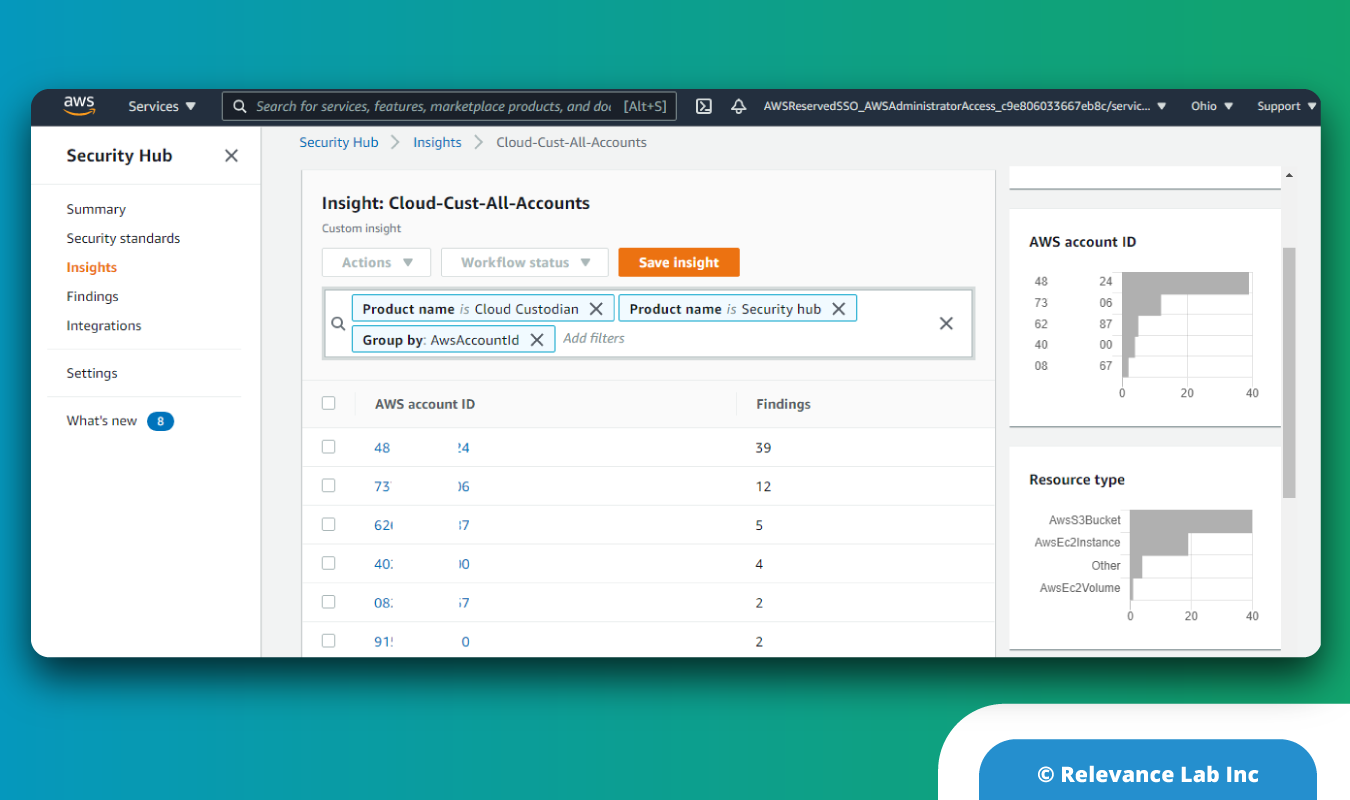

Relevance Lab’s Compliance as a Code framework is an integrated model between AWS Control Tower (CT), Cloud Custodian and AWS Security Hub. As shown below, CT helps organizations with pre-defined multi-account governance based on the best practices of AWS. The account provision is standardized across your hundreds and thousands of accounts within the organization. By enabling Config rules, you can bring in the additional compliance checks to manage your security, cost and account management. To implement events and action based policies, Cloud Custodian is implemented as a complementary solution to the AWS CT which helps to monitor, notify and take remediation actions based on the events. As these policies run in AWS Lambda, Cloud Custodian enforces Compliance-As-Code and auto-remediation, enabling organizations to simultaneously accelerate towards security and compliance. The real-time visibility into who made what changes from where, enables us to detect human errors and non-compliance. Also take suitable remediations based on this. This helps in operational efficiency and brings in cost optimization.

For eg: Custodian can identify all the non tagged EC2 instances or EBS volumes that are not mounted to an EC2 instance and notify the account admin that the same would be terminated in next 48 to 72 hours in case of no action. Having a Custom insight dashboard on Security Hub helps admin monitor the non-compliances and integrate it with an ITSM to create tickets and assign it to resolver groups. RL has implemented the Compliance as a Code for its own SaaS production platform called RLCatalyst Research Gateway, a custom cloud portal for researchers.

Common Use Cases

How to get started

Relevance Lab is a consulting partner of AWS and helps organizations achieve Compliance as a Code, using the best practices of AWS. While enterprises can try and build some of these solutions, it is a time consuming activity and error prone and needs a specialist partner. RL has helped 10+ enterprises on this need and has a reusable framework to meet the security and compliance needs. To start with Customers can enroll for a 10-10 program which gives an insight of their current cloud compliance. Based on an assessment, Relevance Lab will share the gap analysis report and help design the appropriate “to-be” model. Our Cloud governance professional services group also provides implementation and support services with agility and cost effectiveness.

For more details, please feel free to reach out to marketing@relevancelab.com

COVID-19 increased momentum for Cloud adoption worldwide in solving new challenges with frictionless approach leveraging Automation, touchless interactions and remote workforces. Relevance Lab in partnership with AWS responded with niche solutions and helping clients adopt AWS the right way.

Click here for the full story.

2021 Blog, AWS Platform, Blog, Featured

The year 2020 undoubtedly brought in unprecedented challenges with COVID-19 that required countries, governments, business and people respond in a proactive manner with new normal approaches. Certain businesses managed to respond to the dynamic macro environment with a lot more agility, flexibility and scale. Cloud computing had a dominant effect in helping businesses stay connected and deliver critical solutions. Relevance Lab scaled up its partnership with AWS to align with the new focus areas resulting in a significant increase in coverage of products, specialized solutions and customer impacts in the past 12 months compared to what has been achieved in the past.

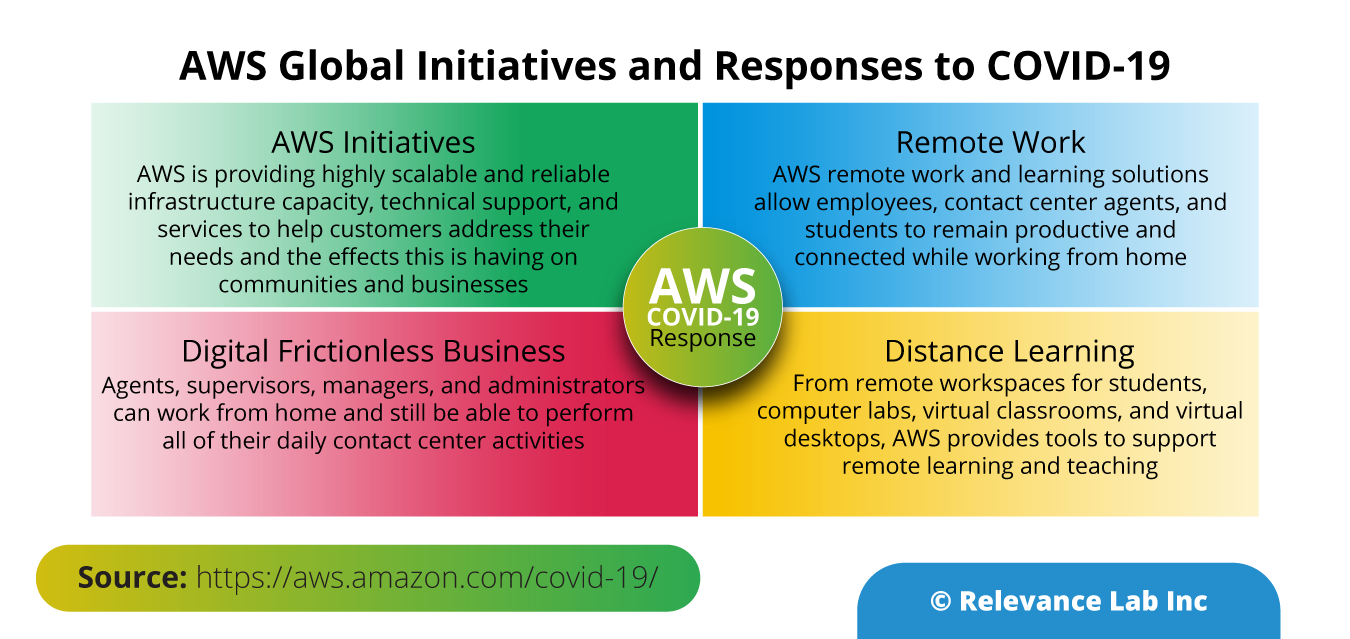

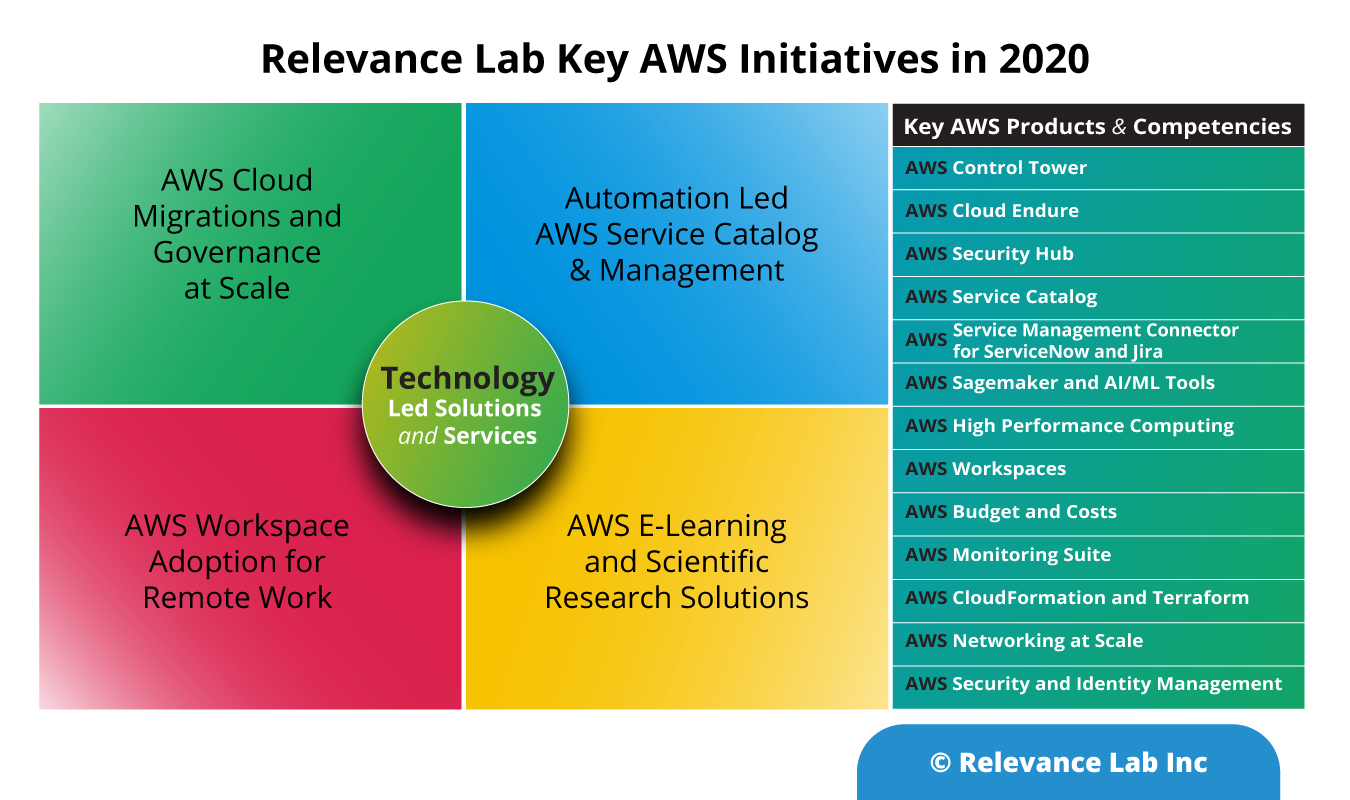

AWS launched a number of new global initiatives in 2020 and in response to the business challenges resulting from the COVID-19 pandemic. The following picture describes those initiatives in a nutshell.

Relevance Lab’s Focus areas have been very well aligned

Given the macro environment challenges, Relevance Lab quickly aligned their focus on helping customers deal with the emerging challenges and those areas were very complementary to AWS’ global initiatives and responses listed above.

Relevance Lab’s aligned its AWS solutions & services across four dominant themes and by leveraging RL’s own IP based platforms and deep cloud competencies. The following picture highlights those key initiatives and the native AWS products and services leveraged in the process.

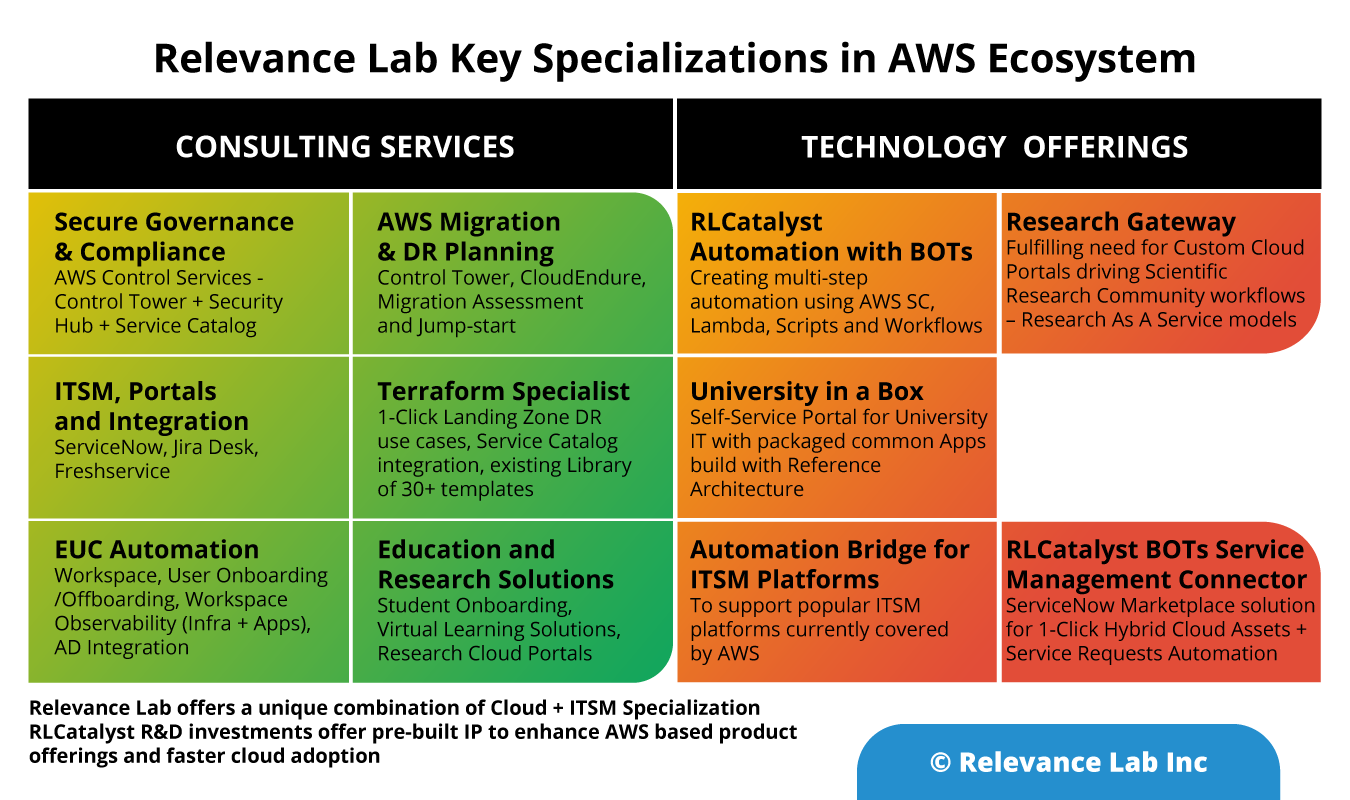

Relevance Lab also made major strides in improving Initiative to progress along AWS Partnership Maturity Levels for Specialized Consulting and Key Product offerings. The following picture highlights the major achievements in 2020 in a nutshell.

AWS Specializations & Spotlights

Relevance Lab is a specialist cloud managed services company with deep expertise in Devops, Service Automation and ITSM integrations. It also has an RLCatalyst Platform built to support Automation across AWS Cloud and ITSM platforms. RLCatalyst family of solutions help in Self-service Cloud portals, IT service monitoring and automation through BOTs. While maintaining multi-sector client base, we are also uniquely focused with existing solutions for Higher Education, Public Sector and Research sector clients.

Spotlight-1: AWS Cloud Portals Solution

Relevance Lab have developed a family of cloud portal solutions on top of our RLCatalyst platform. Cloud Portal solutions aim to simplify AWS consumption using self-service models with emphasis on 1-click provisioning / lifecycle management, dashboard views of budget / cost consumption and modeling personas, roles & responsibilities with a sector context.

A unique feature of the above solutions is that they promote a hybrid model of consumption wherein users can bring their own AWS accounts (consumption accounts) under the framework of our cloud portal solution and benefit from being able to consume AWS for their educational and research needs in an easy self-service model.

The solutions can be consumed as either an Enterprise or as a SaaS license. In addition, the solutions will be made available on AWS Marketplace soon.

Spotlight-2: AWS Security Governance at Scale Framework

The framework and deployment architecture uses AWS Control Tower as the foundational service and other closely aligned and native AWS products and services such as AWS Service Catalog, AWS Security Hub, AWS Budgets, etc. and addresses subject areas such as multi-account management, cost management, security, compliance & governance.

Relevance Lab can assess, design and deploy or migrate to a fully secure AWS environment that lends itself to governance at scale. To encourage clients to adopt this journey, we have launched a 10-10 Program for AWS Security Governance that provides clients with an upfront blueprint of the entire migration or deployment process and end-state architecture so that they can make an informed decision

Spotlight-3: Automated User Onboarding/Offboarding for Enterprises Use Case

Relevance Lab is a unique partner that possesses deep expertise on AWS and ITSM platforms such as ServiceNow, freshservice, JIRA Service Desk, etc. The intersection of these platforms lends itself to relevant use cases for the industry.

Relevance Lab has come up with a solution for automated User onboarding & offboarding in an enterprise context. This solution brings together multiple systems in a workflow model to accomplish user onboarding and offboarding tasks for an enterprise. It includes integration across HR systems, AWS Service Catalog and other services, ITSM platforms such as ServiceNow and assisted by Relevance Lab’s RLCatalyst BOTs engine to perform an end-to-end user onboarding/offboarding orchestration in an unassisted manner.

Key Customer Use Cases and Success Stories in 2020

Relevance Lab helped customers across verticals with growing momentum on Cloud adoption in the post COVID-19 situation to rewrite their digital solutions in adopting the new touchless interactions, remote distributed workforces and strong security governance solutions for enabling frictionless business.

AWS Best Practices – Blogs, Campaigns, Technical Write-ups

The following is a collection of knowledge articles and best practices published throughout the year related to our AWS centered solutions & services.

AWS Service Management

- AWS & ServiceNow Integration for User Onboarding

- Blog & Demo on AWS & Jira SD Integration

- Blog & Demo on AWS, Freshservice & RLCatalyst Integration

AWS Practice Solution Campaigns

AWS Governance at Scale

- Blog & Demo on AWS Control Tower adoption

- AWS Security Governance for Enterprises “The Right Way”

- Intelligent SOX Automation

- Automated Patch Management

AWS Workspaces

RLCatalyst Product Details

- RLCatalyst on AWS Marketplace

- RLCatalyst supports SAML 2.0 with OKTA

- RLCatalyst BOTs Service Management Connector

- How to make your BOTs Intelligent?

- Automation Service Bus – Intelligent and Touchless model

AWS Infrastructure Automation

AWS E-Learning & Research Workbench Solutions

- AWS Scientific Research with RLCatalyst Research Gateway

- AWS Servicenow Research Workbench

- AWS UniBox offering

Cloud Networking Best Practices

Summary

The momentum of Cloud adoption in 2020 is quite likely to continue and grow in the new year 2021. Relevance Lab is a trusted partner in your cloud adoption journey driven by focus on following key specializations:

- Cloud First Approach for Workload Planning

- Cloud Governance 360 for using AWS Cloud the Right-Way

- Automation Led Service Delivery Management with Self Service ITSM and Cloud Portals

- Driving Frictionless Business and AI-Driven outcomes for Digital Transformation and App Modernization

To learn more about our services and solutions listed above or engage us in consultative discussions for your AWS and other IT service needs, feel free to contact us at marketing@relevancelab.com

Analyze and debug distributed applications performance management using AWS X-Ray. AWS X-Ray provides an end-to-end view of requests as they travel through your application, and shows a map of your application’s underlying components.

Click here for the full story.

2021 Blog, Blog, Command blog, Featured

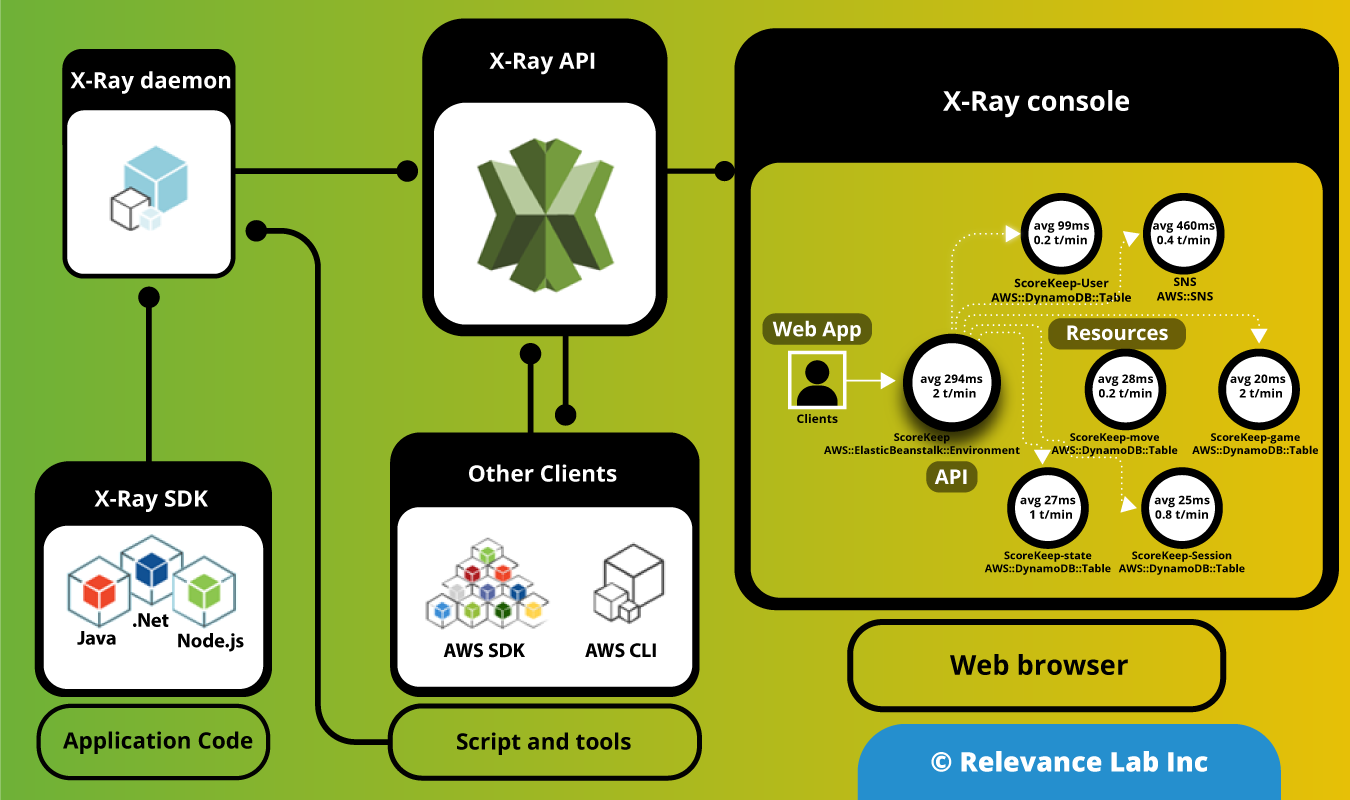

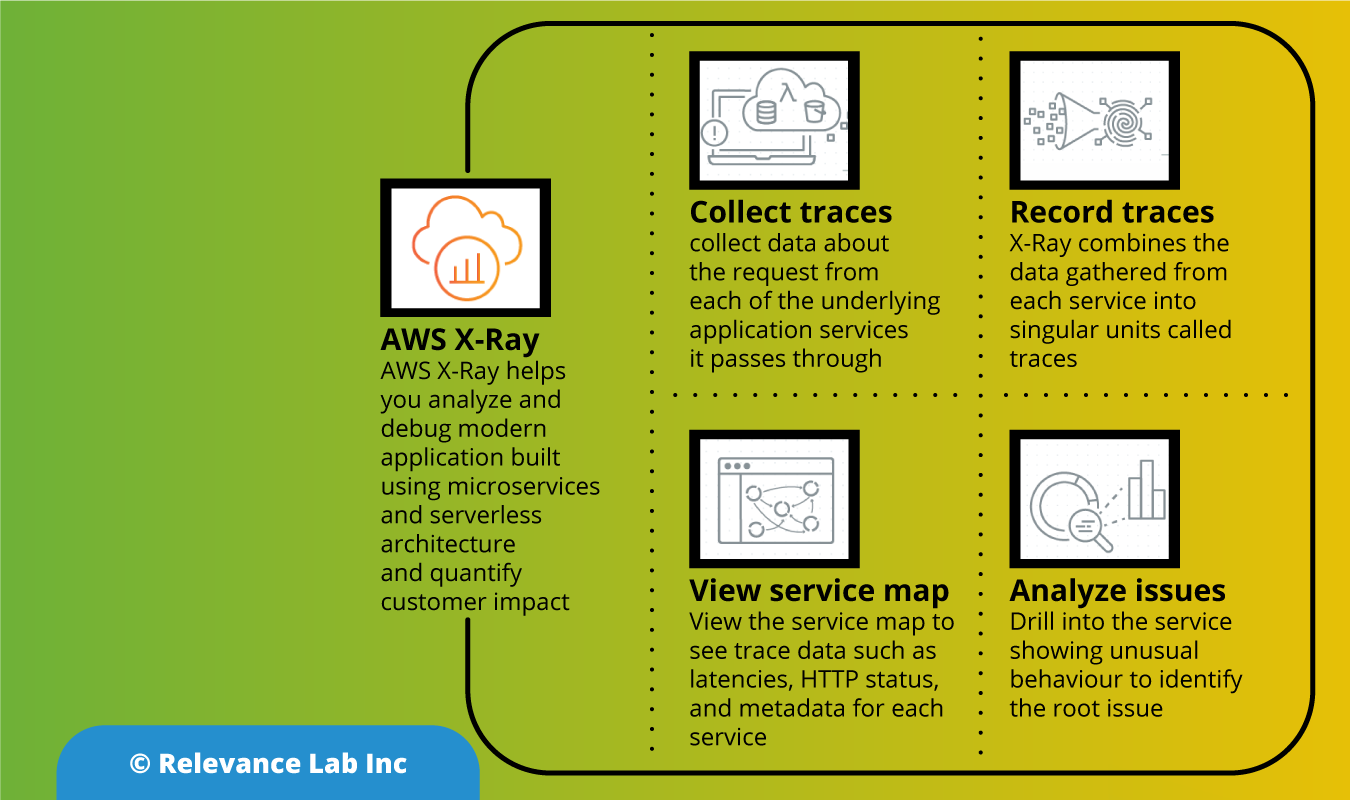

AWS X-Ray is an application performance service that collects data about requests that your application processes, and provides tools to view, filter, and gain insights into that data to identify issues and opportunities for optimization. It enables a developer to create a service map that displays an application’s architecture. For any traced request to your application, you can see detailed information not only about the request and response, but also about calls that your application makes to downstream AWS resources, microservices, databases and HTTP web APIs. It is compatible with microservices and serverless based applications.

The X-Ray SDK provides

- Interceptors to add to your code to trace incoming HTTP requests

- Client handlers to instrument AWS SDK clients that your application uses to call other AWS services

- An HTTP client to use to instrument calls to other internal and external HTTP web services

The SDK also supports instrumenting calls to SQL databases, automatic AWS SDK client instrumentation, and other features.

Instead of sending trace data directly to X-Ray, the SDK sends JSON segment documents to a daemon process listening for UDP traffic. The X-Ray daemon buffers segments in a queue and uploads them to X-Ray in batches. The daemon is available for Linux, Windows, and macOS, and is included on AWS Elastic Beanstalk and AWS Lambda platforms.

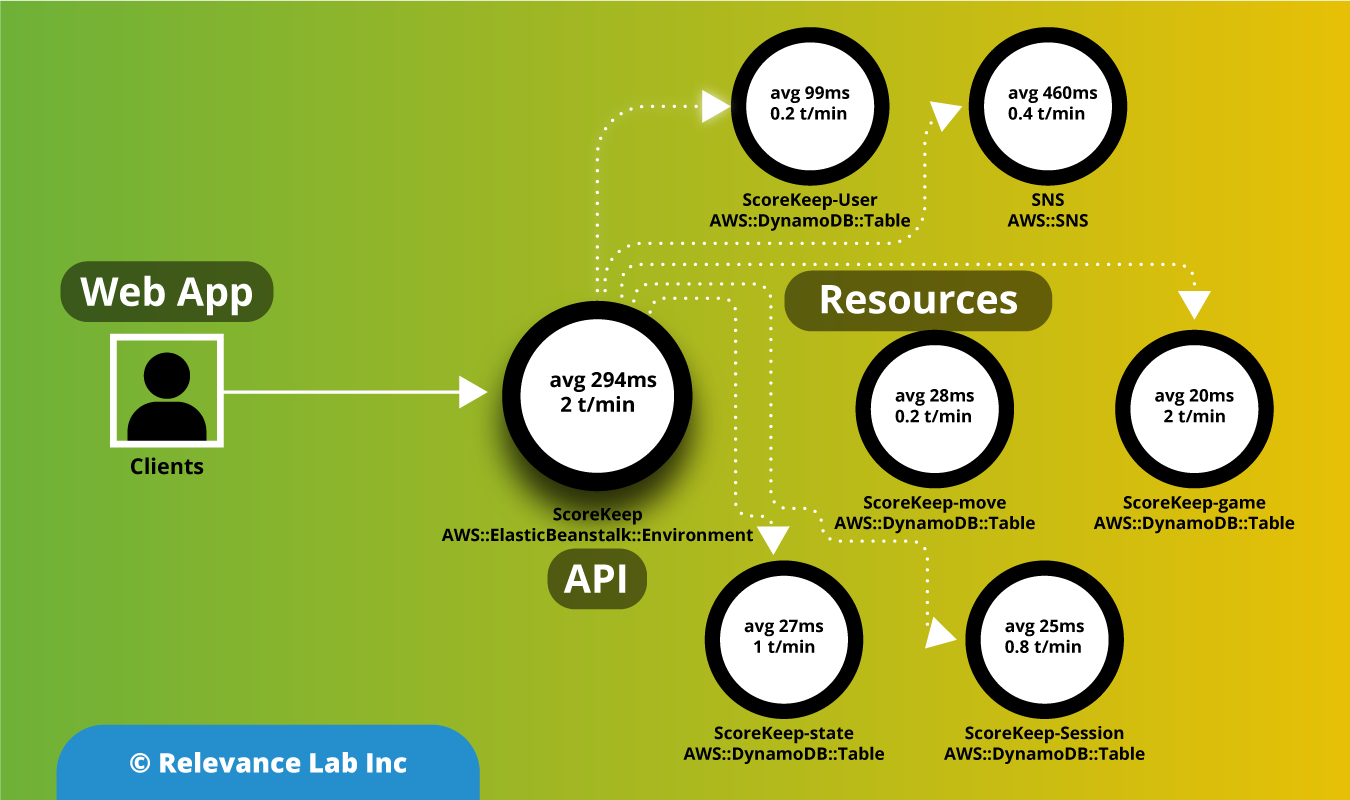

X-Ray uses trace data from the AWS resources that power your cloud applications to generate a detailed service graph. The service graph shows the client, your front-end service, and corresponding backend services to process requests and persist data. Use the service graph to identify bottlenecks, latency spikes, and other issues to solve to improve the performance of your applications.

AWS X-Ray Analytics helps you quickly and easily understand

- Any latency degradation or increase in error or fault rates

- The latency experienced by customers in the 50th, 90th, and 95th percentiles

- The root cause of the issue at hand

- End users who are impacted, and by how much

- Comparisons of trends based on different criteria. For example, you could understand if new deployments caused a regression

How AWS X-Ray Works

AWS X-Ray receives data from services as segments. X-Ray groups the segments that have a Common request into traces. X-Ray processes the traces to generate a service map which provides a visual depiction of the application

AWS X-Ray features

- Simple setup

- End-to-end tracing

- AWS Service and Database Integrations

- Support for Multiple Languages

- Request Sampling

- Service map

Benefits of Using AWS X-Ray

Review Request Behaviour

AWS X-Ray traces customers’ requests and accumulates the information generated by the individual resources and services, which makes up your application, granting you an end-to-end view on the actions and performance of your application.

Discover Application Issues

Having AWS X-Ray, you could extract insights about your application performance and finding out root causes. As AWS X-Ray is having tracing features, you can easily follow request paths to diagnose where in your application and what is creating performance issues.

Improve Application Performance

AWS X-Ray’s service maps allow you to see connection between resources and services in your application in actual time. You could simply notice where high latencies are visualizing node, occurring and edge latency distribution for services, and after that, drilling down into the different services and paths having impact on the application performance.

Ready to use with AWS

AWS X-Ray operates with Amazon EC2 Container Service, Amazon EC2, AWS Elastic Beanstalk, and AWS Lambda. You could utilize AWS X-Ray with applications composed in Node.js, Java, and .NET, which are used on these services.

Designed for a Variety of Applications

AWS X-Ray operates for both simple and complicated applications, either in production or in development. With X-Ray, you can simply trace down the requests which are made to the applications that span various AWS Regions, AWS accounts, and Availability Zones.

Why AWS X-Ray?

Developers spend a lot of time searching through application logs, service logs, metrics, and traces to understand performance bottlenecks and to pinpoint their root causes. Correlating this information to identify its impact on end users comes with its own challenges of mining the data and performing analysis. This adds to the triaging time when using a distributed microservices architecture, where the call passes through several microservices. To address these challenges, AWS launched AWS X-Ray Analytics.

X-Ray helps you analyze and debug distributed applications, such as those built using a microservices architecture. Using X-Ray, you can understand how your application and its underlying services are performing to identify and troubleshoot the root causes of performance issues and errors. It helps you debug and triage distributed applications wherever those applications are running, whether the architecture is serverless, containers, Amazon EC2, on-premises, or a mixture of all of these.

Relevance Lab is a specialist AWS partner and can help Organizations in implementing the monitoring and observability framework including AWS X-ray to ease the application management and help identify bugs pertaining to complex distributed workflows.

For a demo of the same, please click here

For more details, please feel free to reach out to marketing@relevancelab.com