2023 Blog, AI Blog, Blog, Featured

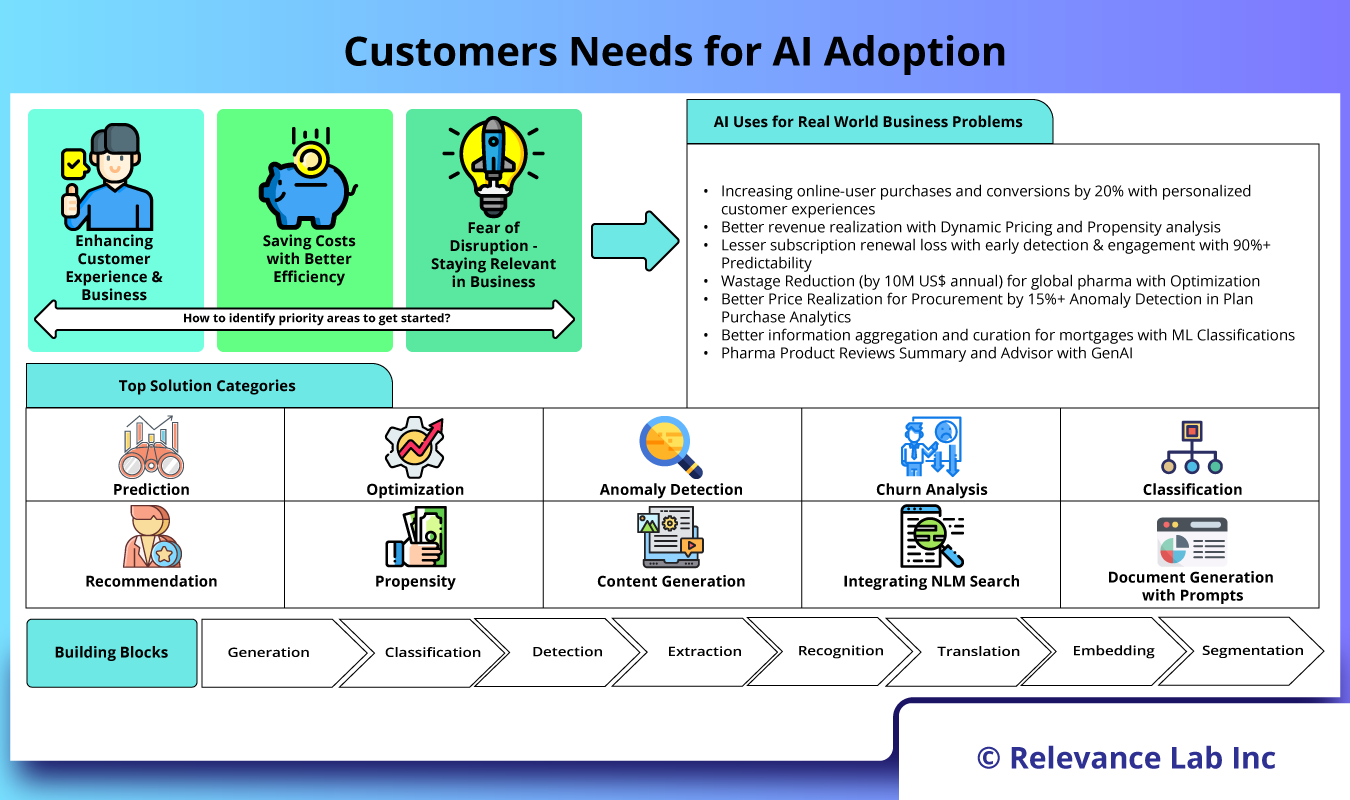

With the rise of Artificial intelligence (AI), many enterprises and existing customers are looking into ways to leverage this technology for their own development purposes and use cases. The field is rapidly attracting investments and efforts for adoption in an iterative manner starting with simple use cases to more complex business problems. In working with early customer, we have found the following themes as the first use cases for GenAI adoption in enterprise context:

- Interactive Chatbots for simple Questions & Answers

- Enhanced Search with Natural Language Processing (NLP) using Document Repositories with data controls

- Summarization of Enterprise Documents and Expert Advisor Tools

While OpenAI provides a model to build solutions, a number of early adopters are preferring use of Microsoft Azure OpenAI Service for better enterprise features.

Microsoft’s Azure OpenAI Service provides REST API access to OpenAI’s powerful language models the GPT-4, GPT-35-Turbo, and Embeddings model series. With Azure OpenAI, customers get the security capabilities of Microsoft Azure while running the same models as OpenAI. Azure OpenAI offers private networking, regional availability, and responsible AI content filtering, security and governance.

Introduction to GenAI

- What is Generative AI?

- A class of artificial intelligence systems that can create new content, such as images, text, or videos, resembling human-generated data by learning patterns from existing data.

- What is the purpose?

- To create new content or generate responses that are not based on predefined templates or fixed responses.

- How does it work?

- Data is collected through various methods like scrapping and/or read documents/directories or indexes, then data is preprocessed to clean and format it for analysis. AI models, such as machine learning and deep learning algorithms, are trained on this preprocessed data to make predictions or classifications. By learning patterns from existing data and using that knowledge to produce new, original content through models.

- How can it be used by enterprises?

- To assist end users (internal or external) in the form of next generation Chatbots.

- To assist stakeholders with automating certain internal content creation processes.

Early Customer Adoption Experience

Customers wanted to experience GenAI for building awareness, validation of early use cases, and “testing the waters” with enterprise-grade security and governance for GenAI technology.

Early Use Cases Identified for Development

The primary focus area was in the content management space for enterprise data with focus on the following:

- End User Assistance (Chatbot)

- Product Website Chatbot

- Intranet Chatbot

- Content Creation

- Document Summarization

- Template based Document Generation

- SharePoint

- Optical Character Recognition (OCR)

- Cognitive Search

- Decision-making & Insights

Key Considerations for GenAI Leverage

- Limitations on current Chatbots

- OCR

- Closed chatbot allowing selection of pre-populated options

- Limited scope and intelligence of responses

- Benefits expected from GenAI enhanced Chatbots

- OCR

- Human like responses

- Ability to adapt quickly to new information

- Multi-lingual

- Restricts available data that Chatbot can draw from to verified Enterprise sites

- Potential Concerns

- Can contain biases unintentionally learned by the model

- Potential for errors and hallucinations

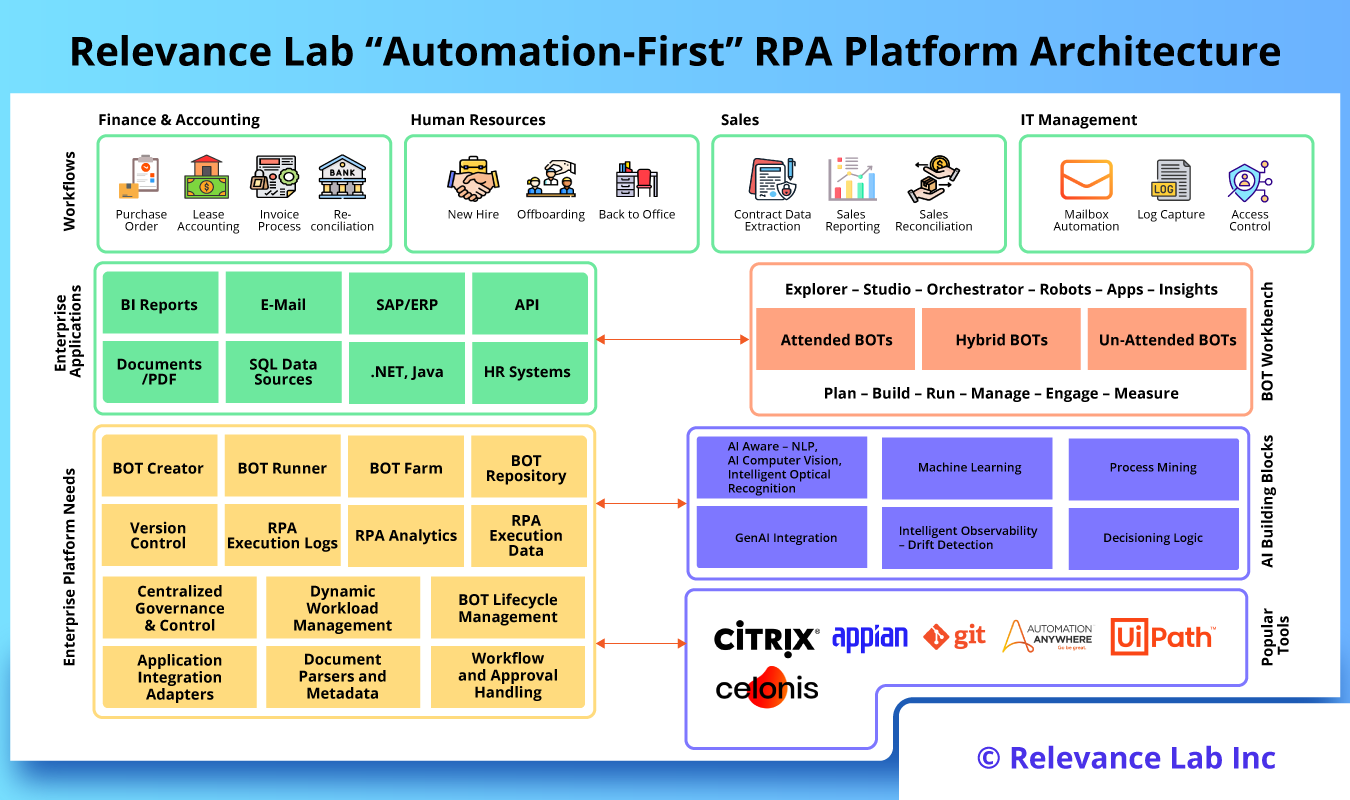

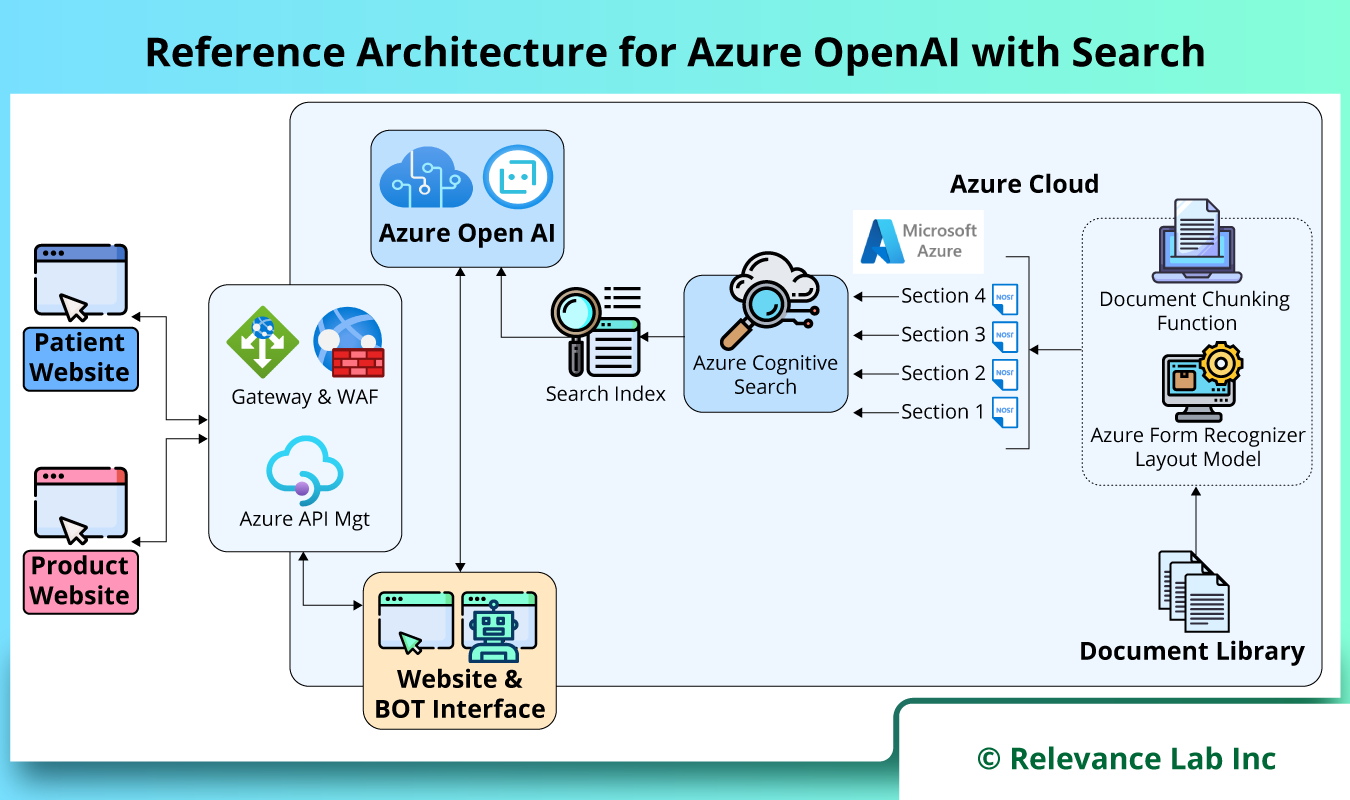

System Architecture

The system architecture using Azure Open AI takes advantage of several services provided by Azure.

The architecture may include the following components:

Azure Open AI Services

Azure Open AI Service is a comprehensive suite of AI-powered services and tools provided by Microsoft Azure. It offers a wide range of capabilities, including natural language processing, speech recognition, computer vision, and machine learning. With Azure Open AI Service, developers can easily integrate powerful AI models and APIs into their applications, enabling them to build intelligent and transformative solutions.

Azure Cognitive Services

Azure Cognitive Services offers a range of AI capabilities that can enhance Chatbot interactions. Services like Language Understanding (LUIS), Speech Services, Search Service, Vision Services and Knowledge Mining can be integrated to enable natural language understanding, speech recognition, and knowledge extraction.

Azure Storage

Azure Storage is a highly scalable and secure cloud storage solution offered by Microsoft Azure. It provides durable and highly available storage for various types of data, including files, blobs, queues, and tables. Azure Storage offers flexible options for storing and retrieving data, with built-in redundancy and encryption features to ensure data protection. It is a fundamental building block for storing and managing data in cloud-based applications.

Form Recognizer

Form Recognizer is a service provided by Azure Cognitive Services that uses machine learning to automatically extract information from structured and unstructured forms and documents. By analyzing documents such as invoices, receipts, or contracts, Form Recognizer can identify key fields and extract relevant data. This makes it easier to process and analyze large volumes of documents. It simplifies data entry and enables organizations to automate document processing workflows.

Service Account

A new service account would be required for team to establish connection with Azure services programmatically. The service account will need elevated privileges as needed for APIs to communicate with Azure services.

Azure API Management

Azure API Management provides a robust solution to address hurdles like throttling and monitoring. It facilitates the secure exposure of Azure OpenAI endpoints, ensuring their safeguarding, expeditiousness, and observability. Furthermore, it offers comprehensive support for the exploration, integration, and utilization of these APIs by both internal and external users.

Typical Interaction Steps between Components

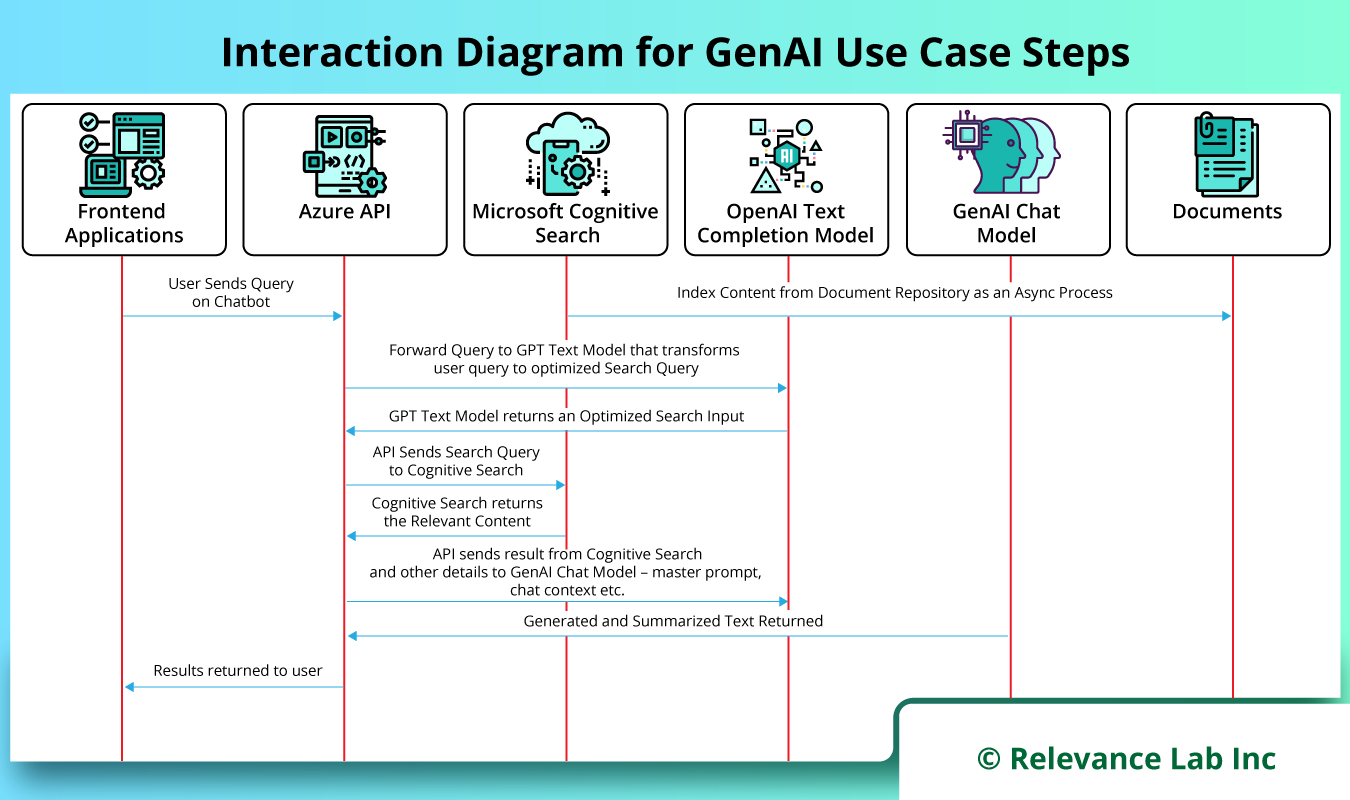

The diagram below shows the typical interaction steps between different components.

- Microsoft Cognitive Search Engine indexes content from Document Repository as an Async Process.

- Using Frontend Application, the user interacts and sends query on Chatbot.

- The Azure API forwards the query to GPT Text Model that transforms the user query to an optimized Search Input.

- GPT Text Model returns this optimized Search Input to Azure API Orchestration Layer.

- API Layer sends Search Query to Cognitive Search.

- Cognitive Search returns the Relevant Content.

- API Layer sends the result from Cognitive Search with other details like Prompt, Chat context and history to GenAI for Response Generation.

- Generated and Summarized content is returned from GenAI.

- The meaningful results are shared back to user.

The above interactions clearly demonstrate that in the above architecture the documents remain inside the secure Azure network and are managed by Search engine. This ensured that the raw content is not being shared with OpenAI layer hence providing a controlled governance for data security and privacy.

Summary

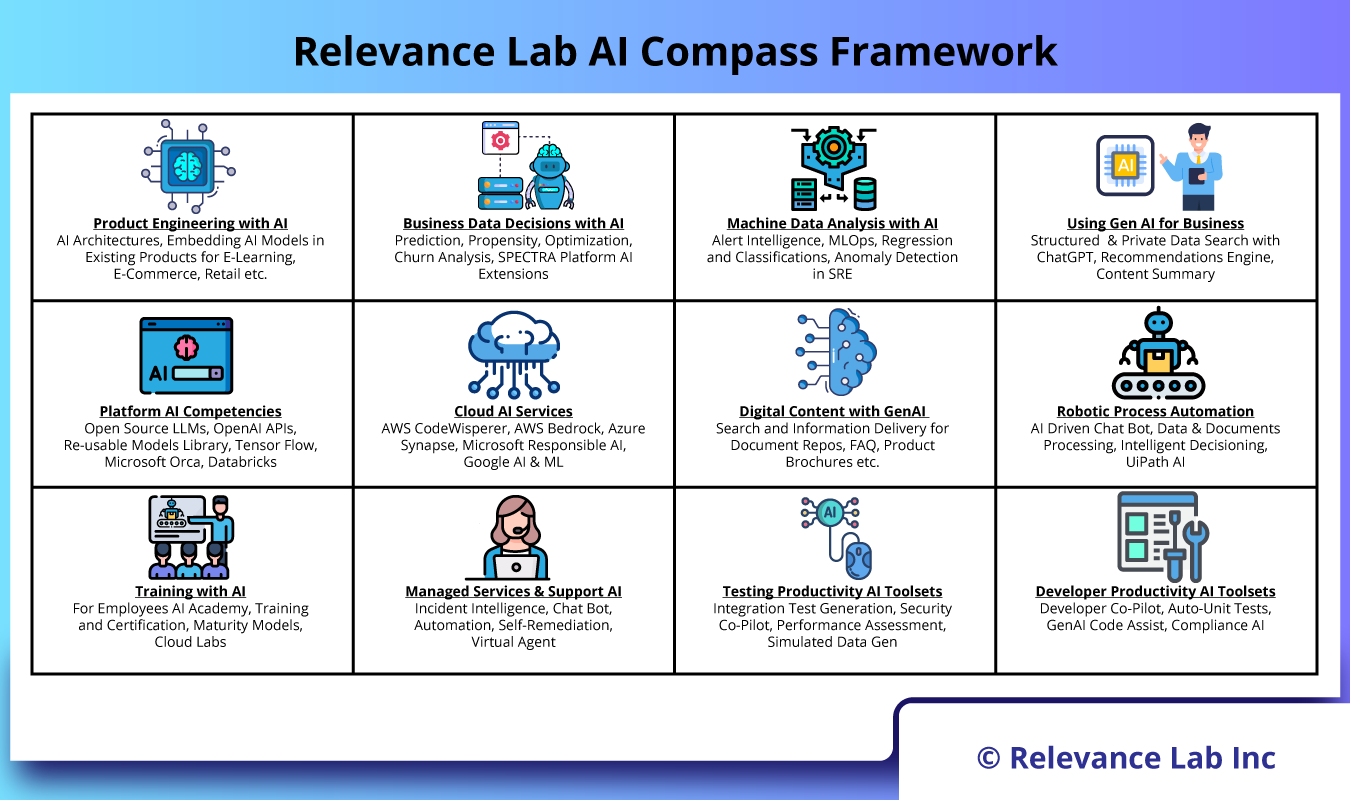

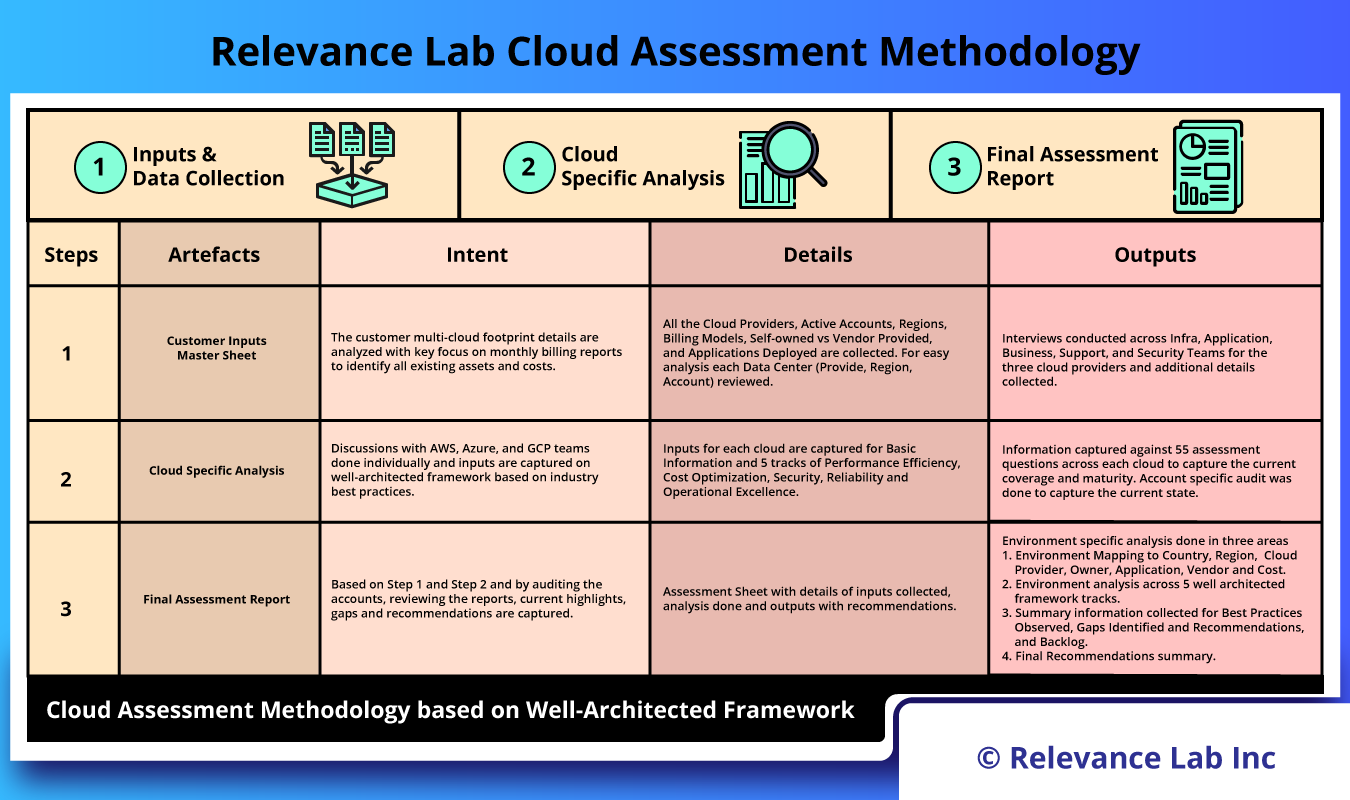

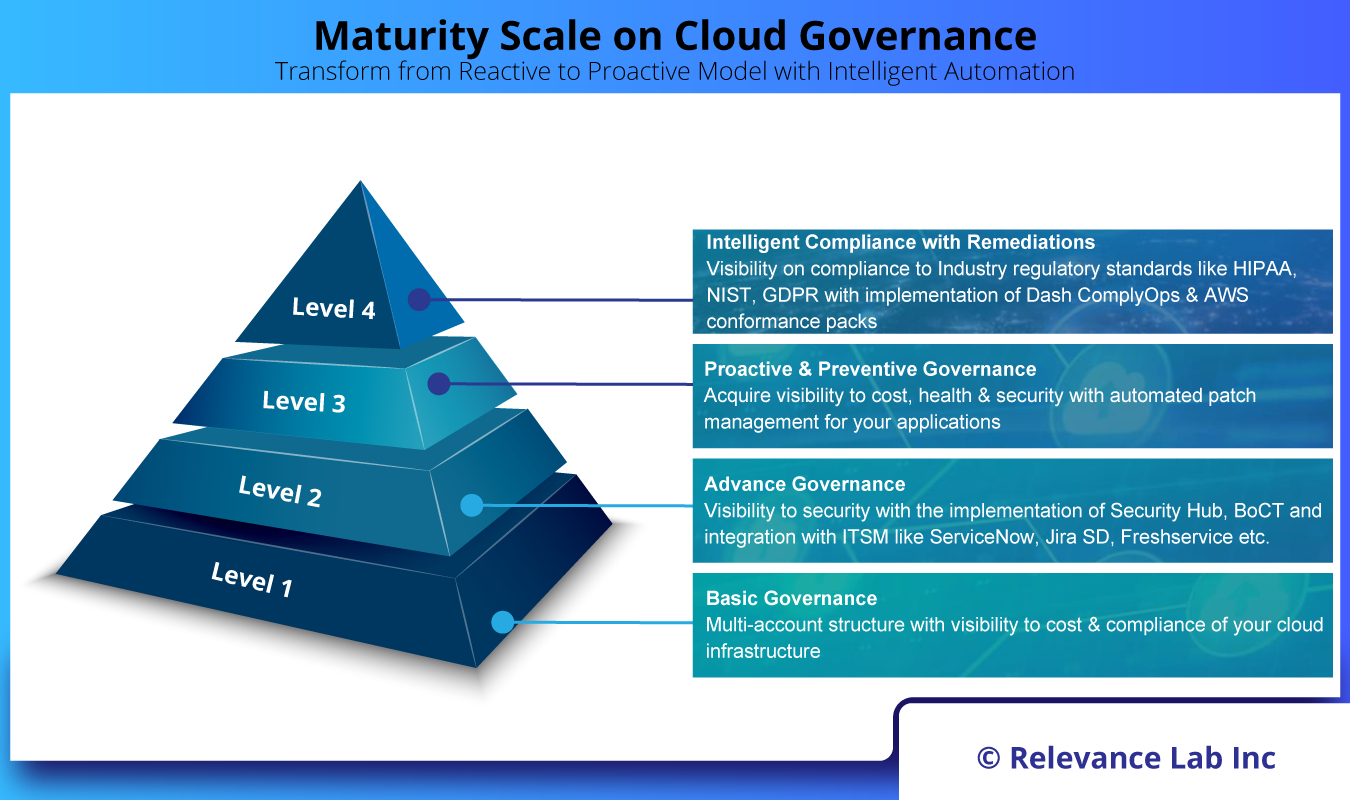

Relevance Lab is working with early customers for GenAI Adoption using our AI Compass Framework. The customers’ needs vary from initial concept understanding to deploying with enterprise-grade guardrails and data privacy controls. Relevance Lab has already worked on 20+ GenAI BOTs across different architectures leveraging different LLM Models and Cloud providers with a reusable AI Compass Orchestration solution.

To know more about how we can help you adopt GenAI solutions “The Right Way” feel free to write to us at marketing@relevancelab.com and for a demonstration of the solution at AICompass@relevancelab.com

References

Revolutionize your Enterprise Data with ChatGPT

Augmenting Large Language Models with Verified Information Sources: Leveraging AWS

AWS SageMaker and OpenSearch for Knowledge-Driven Question Answering

What’s Azure Cognitive Search?