Relevance Lab helps Organizations to build & adopt AWS Best Practices.

Click here for the full story.

2020 Blog, Governance360, Blog, Command blog, Featured, RLAws Blogs

For Large Enterprise and SMBs with multiple AWS accounts, monitoring and managing multi-accounts is a huge challenge as these are managed across multiple teams running too few hundreds in some organizations.

AWS Control Tower helps Organizations set up, manage, monitor, and govern a secured multi-account using AWS best practices.

Benefits of AWS Control Tower

- Automate the setup of multiple AWS environments in few clicks with AWS best practices

- Enforce governance and compliance using guardrails

- Centralized logging and policy management

- Simplified workflows for standardized account provisioning

- Perform Security Audits using Identity & Access Management

- Ability to customize Control Tower landing zone even after initial deployment

Features of AWS Control Tower

a) AWS Control Tower automates the setup of a new landing zone which includes,

- Creating a multi-account environment using AWS Organizations

- Identity management using AWS Single Sign-On (SSO) default directory

- Federated access to accounts using AWS SSO Centralized logging from AWS CloudTrail, and AWS Config stored in Amazon S3

- Enable cross-account security audits using AWS IAM and AWS SSO

b) Account Factory

- This helps to automate the provisioning of new accounts in the organization.

- A configurable account template that helps to standardize the provisioning of new accounts with pre-approved account configurations.

c) Guardrails

- Pre-bundled governance rules for security, operations, and compliance which can be applied to Organization Units or a specific group of accounts.

- Preventive Guardrails – Prevent policy violations through enforcement. Implemented using AWS CloudFormation and Service Control Policies

- Detective Guardrails – Detect policy violations and alert in the dashboard using AWS Config rules

d) 3 types of Guidance (Applied on Guardrails)

- Mandatory Guardrails – Always Enforced. Enabled by default on landing zone creation.

- Strongly recommended Guardrails – Enforce best practices for well-architected, multi-account environments. Not enabled by default on landing zone creation.

- Elective guardrails – To track actions that are restricted. Not enabled by default on landing zone creation.

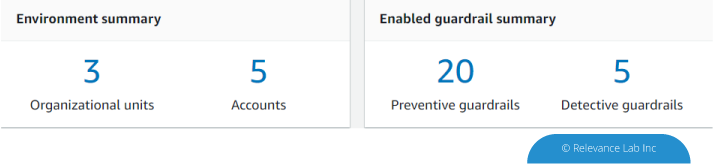

e) Dashboard

- Gives complete visibility of the AWS Environment

- Can view the number of OUs (Organization Units) and accounts provisioned

- Guardrails enabled

- Check the list of non-compliant resources based on guardrails enabled.

e) Customizations for Control Tower

- Gives complete visibility of the AWS Environment

- Trigger workflow during an AWS Control Tower Lifecycle event such as adding a new managed account

- Trigger customizations to AWS Control Tower using user provided configuration changes

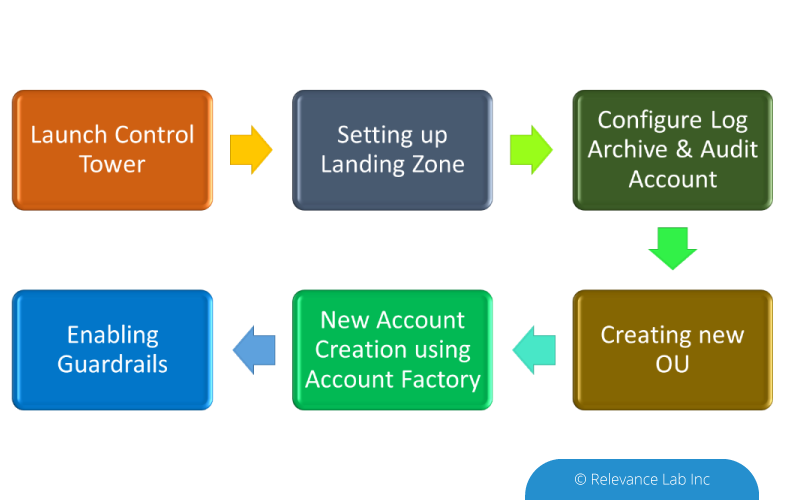

Steps to setup AWS CT

Setting up a Control Tower on a new account is relatively simpler when compared to setting it up on an existing account. Once Control Tower is set up, the landing zone should have the following.

- 2 Organizational Units

- 3 accounts, a master account and isolated accounts for log archive and security audit

- 20 preventive guardrails to enforce policies

- 2 detective guardrails to detect config violations

Steps to customize AWS CT

Customizations to a Control Tower can be done using an AWS CloudFormation template at OU and Account levels and service control policies (SCPs) at the OU level. The setup for enabling CT customizations is provided within an AWS CloudFormation template which creates AWS CodePipeline, AWS CodeBuild projects, AWS Step Functions, AWS Lambda functions, an Amazon EventBridge Event rule, an AWS SQS queue, an Amazon S3 or AWS CodeCommit repository to hold the custom resource package file.

Once the setup is done, customizations to AWS CT can be done as follows

- 2 Organizational Units

- Upload a custom package file to Amazon S3 or AWS CodeCommit repository

- The above action triggers the AWS CodePipeline workflow and corresponding CI/CD pipeline for SCPs and CloudFormation StackSets to implement the customizations

- Alternately when a new account is added, a Control Tower Lifecycle event triggers the AWS CodePipeline workflow via the Amazon EventBridge, AWS SQS and AWS Lambda

The next step is to create a new Organizational unit and then create a new account using the account factory and map it to the OU that was created. Once this is done, you can start setting up your resources and any non-compliance starts reflecting in the Noncompliant resources’ dashboard. In addition to this, any deviation to the standard AWS best practices would be reflected in the dashboard.

Conclusion

With many of the organizations opting for and using AWS cloud services, AWS Control Tower with the centralized management service and ability to customize the initially deployed configurations, offers the simplest way to set up and govern multiple AWS accounts on an ongoing basis securely through beneficial features and established best practices. Provisioning new AWS accounts are as simple as clicking a few buttons while agreeing to the organization’s requirements and policies. Relevance Lab can help your organization to build AWS Control Tower and migrate your existing accounts to Control Tower.

For a demo of Control Tower usage in your organization click here

For more details, please feel free to reach out to marketing@relevancelab.com

2020 News, News

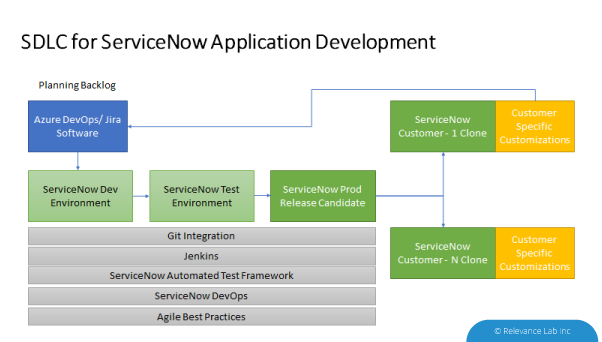

Using GIT configuration management integration in Application Development to achieve higher velocity and quality when releasing value-added features and product.

Click here for the full story.

2020 Blog, Blog, DevOps Blog, Featured, ServiceOne, ServiceNow

Using GIT configuration management integration in Application Development to achieve higher velocity and quality when releasing value-added features and products

ServiceNow offers a fantastic platform for developing applications. All infrastructure, security, application management and scaling etc.is taken up by ServiceNow and the application developers can concentrate on their core competencies within their application domain. However, several challenges are faced by companies that are trying to develop applications on ServiceNow and distribute them to multiple customers. In this article, we take a look at some of the challenges and solutions to those challenges.

A typical ServiceNow customization or application is distributed with several of the following elements:

- Update Sets

- Template changes

- Data Migration

- Role creation

- Script changes

Distribution of an application is typically done via an Update Set which captures all the delta changes on top of a well-known baseline. This base-line could be the base version of a specific ServiceNow release (like Orlando or Madrid) plus a specific patch level for that release. To understand the intricacies of distributing an application we have to first understand the concept of a Global application versus a scoped application.

Typically only applications developed by ServiceNow are in the global scope. However before the Application Scoping feature was released, custom applications also resided in the global scope. This means that other applications can read the application data, make API requests, and change the configuration records.

Scoped applications, which are now the default, are uniquely identified along with their associated artifacts with a namespace identifier. No other application can access the data, configuration records, or the API unless specifically allowed by the application administrator.

While distributing applications, it is easy to do so using update sets if the application has a private scope since there are no challenges with global data dependencies.

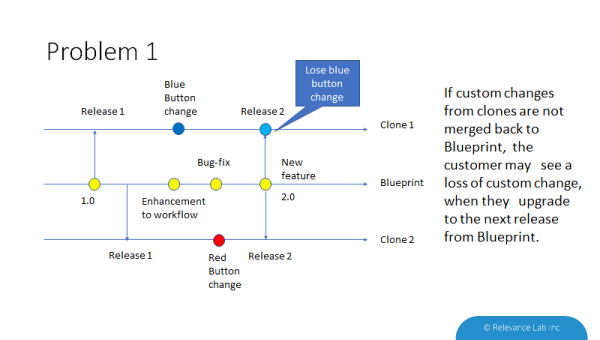

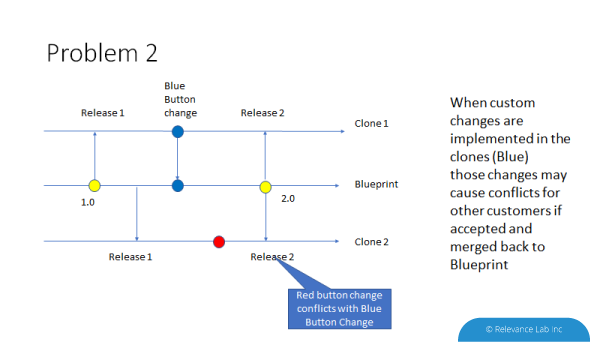

The second challenge is with customizations done after distributing an application. There are two possible scenarios.

- An application release has been distributed (let’s call it 1.0).

- Customer-1 needs customization in the application (say a blue button is to be added in Form-1). Now customer 1 has 1.0 + Blue Button change.

- Customer-2 needs different customization (say a red button is to be added in Form-1)

- The application developer has also done some other changes in the application and plans to release the 2.0 version of the application.

Problem-1: If application 2.0 is released and Customer-1 upgrades to that release, they lose the blue-button changes. They have to redo the blue-button change and retest.

Problem-2: If the developer accepts blue button changes into the application and releases 2.0 with blue button changes, when Customer-2 upgrades to 2.0, they have a conflict of their red button change with the blue-button change.

These two problems can be solved by using versioning control using Git. When the application developers want to accept blue button changes into 2.0 release they can use the Git merge feature to merge the commit of Blue button changes from customer-1 repo into their own repo.

When customer-2 needs to upgrade to 2.0 version they use the Stash feature of Git to store their red button changes prior to the upgrade. After the upgrade, they can apply the stashed changes to get the red button changes back into their instance.

The ServiceNow source control integration allows application developers to integrate with a GIT repository to save and manage multiple versions of an application from a non-production instance.

Using the best practices of DevOps and Version Control with Git it is much easier to deliver software applications to multiple customers while dealing with the complexities of customized versions. To know more about ServiceNow application best practices and DevOps feel free to contact: marketing@relevancelab.com

Optimizing network connectivity model using AWS VPC and services.

Click here for the full story.

2020 Blog, Blog

The industry has witnessed a remarkable evolution in Cloud, Virtualization, and Networking over the last decade. From a futuristic perspective, enterprises should structure the cloud including account creation, management of cloud; and provide VPC (Virtual Private Cloud) scalability and optimize network connectivity model for clouds.

Relevance Lab focuses on optimizing network connectivity model using AWS VPC and services. We are one of the premium partners of AWS and with our extensive experience in cloud engineering including Account Management, AWS VPC and Services, VPC peering and On-premises connectivity. In this blog, we will cover a more significant aspect of connectivity.

As an initial step, we help our customers optimize account management, including policies, billing, structuring the accounts and automate account and policy creations with pre-built templates. Thereby we help leverage AWS Control tower and landing zone.

Distributed Cloud Network and Scalability Challenges

As the AWS cloud Infrastructure and applications are distributed across the globe including North America, South America, Europe, China, Asia Pacific, South Africa, and the Middle East, it brings in much-anticipated scalability issues. Hence, we bring in the best practices while designing the cloud network, including:

- Allocate VPC networks for each region; make sure this does not overlap with On-premises and across VPC peering.

- Subnet sizes are permanent and cannot change, leave ample room for future growth.

- Create EC2 instance growth chart considering your Organization, Customer, and partner’s requirements and their Global distribution. Forecast for the next few years.

- Adapt to the Route scalability supported by AWS

AWS Backbone provides you private connectivity to AWS services, and hence no traffic leaves the backbone, and it avoids the need to set up NAT gateway to connect to the services from On-premises. This brings in enhanced security and cost-effectiveness.

Amazon S3 and DynamoDB services are the services supported behind Gateway Endpoint, and these are freely available to provision.

VPC Peering is the simplest and secure way to connect between two VPCs, and one VPC cannot transit the traffic for other VPCs. The number of peering requirements is very high among the enterprises due to these design challenges, and it grows as the VPC scales. hence, we suggest the VPC peering connectivity model if the number of VPC’s are limited to 10.

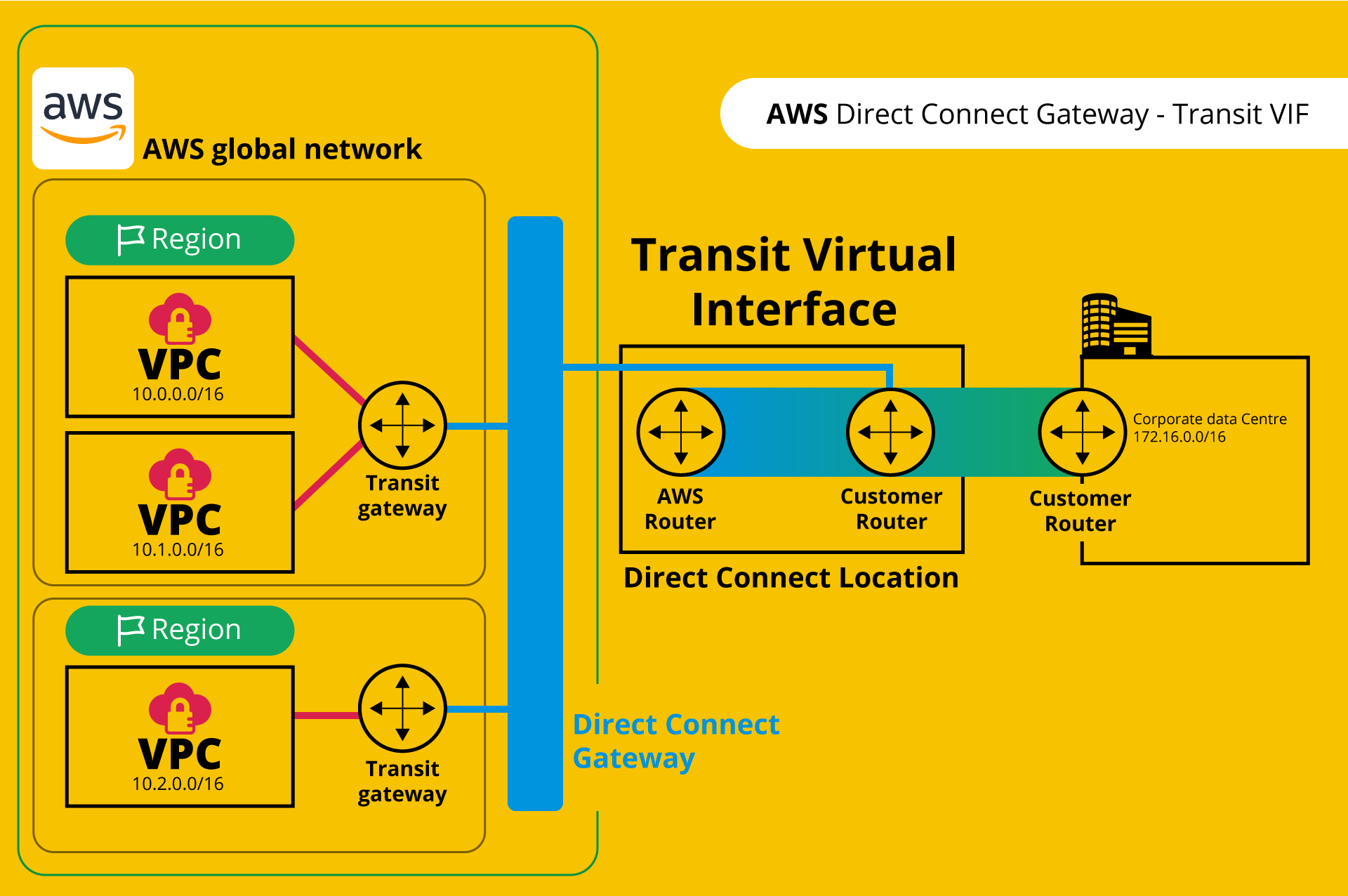

On-premises connectivity models for cloud – Our Approach

The traditional connectivity model which connects site-to-site VPN between VGW (Virtual Private Gateway) and Customer gateway provides lesser bandwidth, less elastic and cannot scale.

A direct connect gateway may be used to aggregate VPC’s across geographies. Relevance Labs recommends introducing a transit gateway between VGW and Direct Connect Gateway. This provides a hub and spoke design for connecting VPCs and on-premises datacentre or enterprise offices. TGW can aggregate thousands of VPCs within a region, and it can work across AWS accounts. If VPCs across multiple geographies are to be connected, TGW can be placed for each region. This option allows you to connect between the on-premises Data Center and up to three TGWs spanning across regions; each of them can aggregate thousands of VPCs.

This model allows connecting to the colocation facility between VPC & Customer Data Center and provides bandwidth up to 40 Gig through link aggregation.

Relevance Lab is evaluating the latest features and helping the Global Customers to migrate to this distributed cloud model which can provide high Scalability and bandwidth along with cost optimization and security.

For more information feel free to contact marketing@relevancelab.com

Overview of Relevance Lab’s Social Distancing Policies in post Covid 19 Pandemic.

Click here for the full story.

2020 Blog, Blog, Featured

Office Social Distancing Compliance in the New Normal

With the ongoing coronavirus pandemic, businesses around the globe are adjusting their work practices to the new normal. One of the critical requirements is the practice of social distancing with employees being asked to mostly work from home and limit their visits to the office. To comply with newly issued corporate (and even government) guidelines around social distancing, offices around the world are faced with the need to monitor, control, and restrict the number of employees attending office premises on a given day. The thresholds are typically defined as a percentage of maximum office space/floor capacity to ensure sufficient room for social distancing.

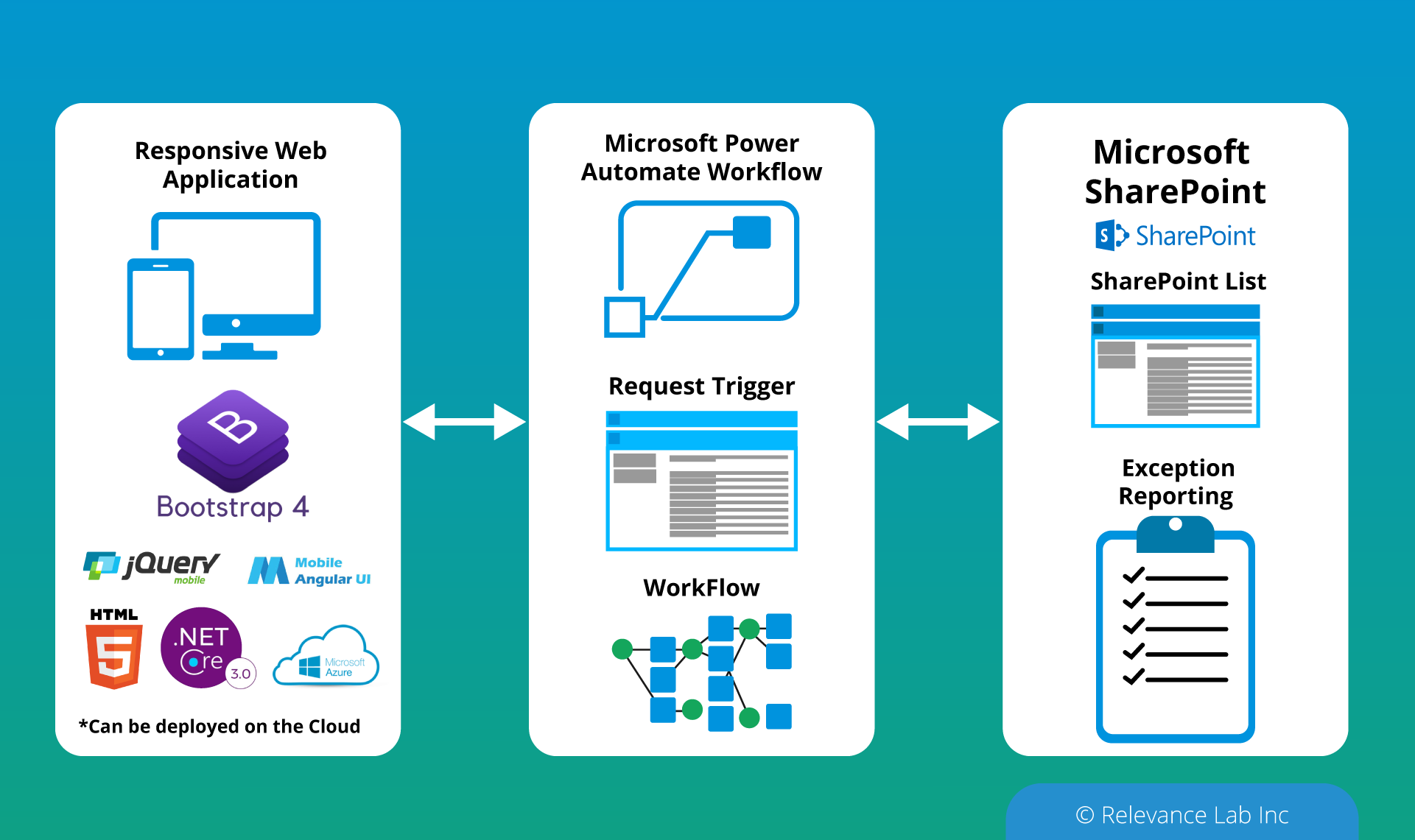

Office Attendance Control System

In response to the above client needs, an Office Attendance Control System has been implemented which provides capabilities for request/approval workflows for employees with multi-level approvals, monitoring and reporting dashboards for employee attendance, attendance thresholds, and compliance. Employees can raise requests for self or a group of team members and also multiple date ranges. The system is implemented as a responsive web application and with the email used as the tool to approve/reject requests.

The solution is implemented as a scalable and cloud-based solution that can be deployed rapidly for companies with a local or global presence with offices around the world.

Sample Architecture

Conclusion

The coronavirus has altered “what is normal” (and perhaps permanently in a few cases) which requires companies & solution providers to implement innovative solutions to function effectively under the new circumstances. Relevance Lab’s implementation of “Office Attendance Control Solution” helps companies with global offices to effectively implement social distancing practices in the office environment.

Please reach out to us if interested so that we can help meet your critical business needs.

About Relevance Lab

Relevance Lab is a platform-enabled new-age IT services company driving friction out of IT and Business Operations.

Relevance Lab works with re-usable technology assets & proven expertise in the area of DevOps, Cloud, Automation, Service Delivery and Supply Chain Analytics that help global organizations achieve frictionless business by transforming their traditional infrastructure, applications, and data. In the changing landscape of technologies and consumer preferences, Relevance Lab enables global organizations to

- Adopt “asset lite” growth models by leveraging cloud (IAAS, PAAS, SAAS)

- Shift CAPEX to OPEX

- Automate to improve efficiency and reduce costs

- Build an end-to-end ecosystem connecting digital products to backend ERP platforms

- Use agile analytics (our spectra platform) to provide real-time business insights and improve customer experiences

Relevance Lab has invested in a unique IP-based DevOps Automation platform called RL Catalyst. Enterprises can leverage RL Catalyst Command Center and BOTs Engine with existing 100+ BOTS for managing IT Operations, ensuring Business Service Availability through Monitoring, Diagnostics, and Auto-Remediation. Leveraging its critical partnerships with ServiceNow, Chef, Hashicorp, Oracle Cloud, AWS, Azure, GCP, etc., the unique combination of Relevance Lab Platforms and Services offers a hybrid value proposition to large enterprises and technology companies. Our specialization helps the adoption of Cloud Computing, Digital Solutions, and Big Data Analytics to remove friction, increase business velocity, and achieve scale at lower annual budget spends for clients.

Incorporated in 2011 and headquartered in the USA, Relevance Lab has 450+ specialized professionals spread across its offices in India, USA and Canada.

Listed among “Best Companies to Work for in 2019” – Silicon India magazine, May 2019

Listed among “20 most promising DevOps companies in 2019” – CIOReviewIndia magazine, May 2019

Open Source DevOps Vendor Chef Launches Its First Channel Program. Click here for the full story.