Site Reliability Engineering (SRE) is a critical need for large enterprises with global deployments across multiple clouds, regions, and applications. A platform approach speeds up SRE rollout for an enterprise combining Observability, Service Management, and Actionable Operations. Relevance Lab offers a mature platform that can save 6-12 months effort and guarantee success. Click here for the full story.

2024 Blog, Blog, Featured

With growing complexity across Cloud Infrastructure, Applications deployments, and Data pipelines there is a critical need for effective Site Reliability Engineering (SRE) solutions for large enterprises. While this is a common need, the approach many companies seem to be attempting is solving the problem with multiple siloed efforts. Following are some common problems we have observed:

- Data Center oriented approach to solving SRE adoption with a focus on different layers across Storage, Compute, Network, Apps, and Databases. This approach creates silos with different teams working across the tracks.

- Significant focus on reactive models for Observability with multiple tools and overlapping monitoring coverage across on-prem & cloud systems. This creates alerts fatigue, false alarms, long diagnostics time, and slow recovery cycles.

- Long planning and analysis cycles on defining how to get started on “SRE Transformation Program” with multiple groups, approaches, and discovery cycles.

- Need for Organization clarity on who should drive SRE program across Infra, Apps, DevOps, Data and Service Delivery groups.

- Undefined roadmap for maturity and how to leverage Cloud, Automation, and AIOps to roll-out SRE programs at scale across the enterprise.

- Federated models of IT and Business Units with shared responsibility across global operations and how to balance the need for standardization vs self-service flexibility.

- Missing information on current problems and faults affecting end users with slow response times, surprise outages, unpredictable performance, and view on real time Business Performance Metrics (SLAs).

- Lack of mature Critical Incident Management and Incident Intelligence.

- Custom approach to solutions lacking ability to build a common framework and scale across different units.

- Need for Machine Learning Observability including data collection and alerting, additional data growth, data drift and consumption monitoring.

- Tracking Platform Cost visibility across business, regions, and projects.

With a growing Cloud footprint adoption, these issues have got amplified along with concerns on costs and security in the absence of mature SRE models slowing down digital transformation efforts.

To fix these issues in a prescriptive manner, Relevance Lab has worked with some large customers to evolve a “Platform Centric” model to SRE adoption. This leverages common tools and open-source technologies that can speed up SRE implementation by saving significant time, cost, and efforts. Also, with a rapid deployment model the rollout can be done across a global enterprise with Automation driven templates.

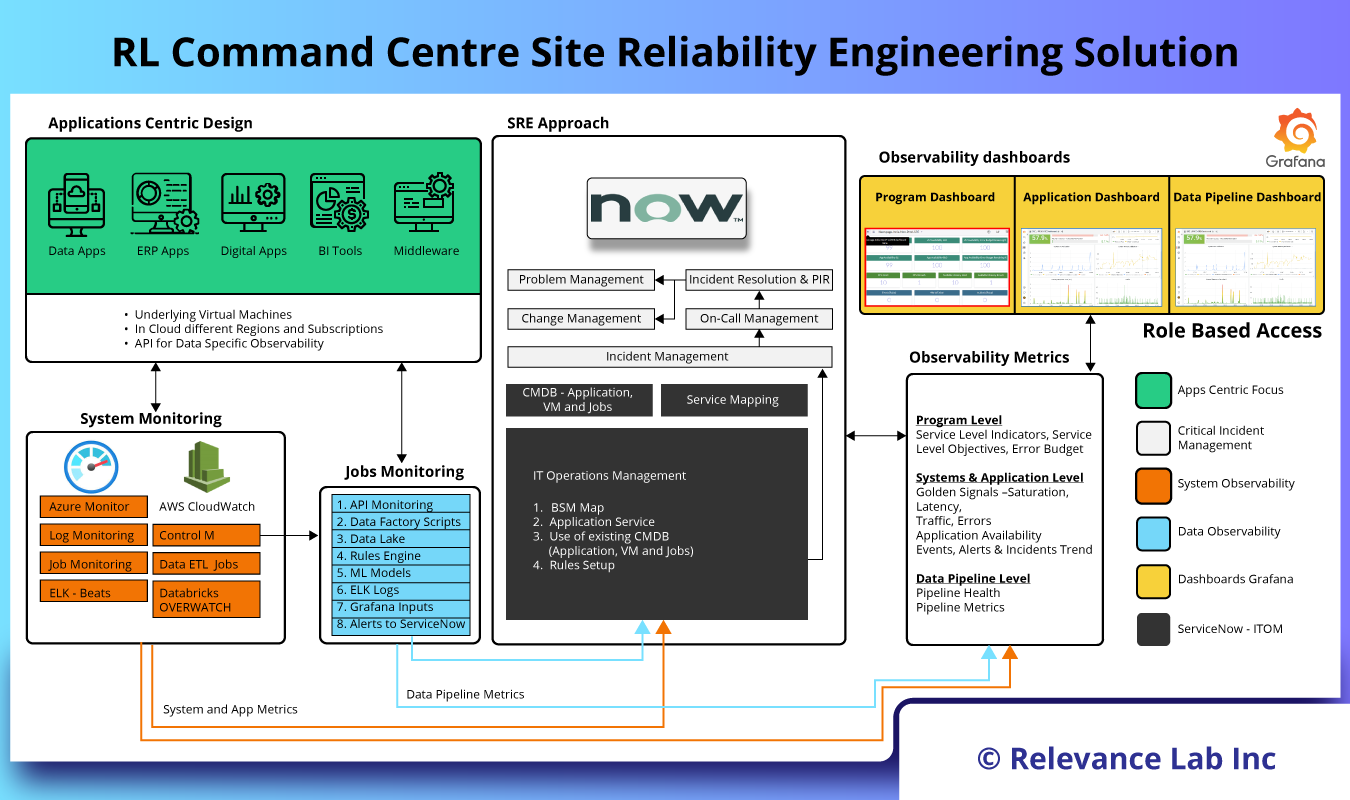

The figure below explains the Command Centre SRE Platform from Relevance Lab.

Building Blocks of SRE Platform

- Application Centric Design

- The first step towards building a mature SRE implementation starts with an application centric view aligned to business services. By using platforms like ServiceNow, we can build relationships or service maps between Infrastructure and application services. This is crucial and helps during an outage in identification of root cause.

- Once all assets are identified, segregated based on type of applications, business services tagged and managed centrally.

- Monitoring

- The next step is to have monitoring sensors enabled for all business-critical systems. Enablement of monitoring sensors could vary based on the type of resources as mentioned below:

- Systems Monitoring: This is typically Infrastructure and Network monitoring and could be enabled either using the native cloud services or using third party tools like AWS CloudWatch, Azure Monitor, Solarwinds, Zabbix etc.

- Applications or Logs Monitoring: Application monitoring involves both performance monitoring as well as logs monitoring, this can also be achieved using the cloud native tools or third-party tools like AppDynamics, ELK, Splunk, AWS X-ray, Azure application insights etc.

- Jobs Monitoring: For monitoring scheduled jobs, tools like NewRelic, Dynatrace, Control-M etc, are used.

- SRE Approach with Event Management

- Now that the monitoring sensors are enabled, this will generate a lot of alerts and most of this would be noise including false alarms and duplicate alerts. Relevance Lab algorithms help de-duplication, alert aggregation, and alert correlation of these alerts and thereby reduce alert fatigue.

- Golden Signals: The golden signals namely latency, traffic, errors, and saturation are defined, configured and setup for any abnormalities during this stage. By integrating these with the standard Incident Management and Problem Management process and ITSM Platforms, the application stability and reliability becomes matured over time.

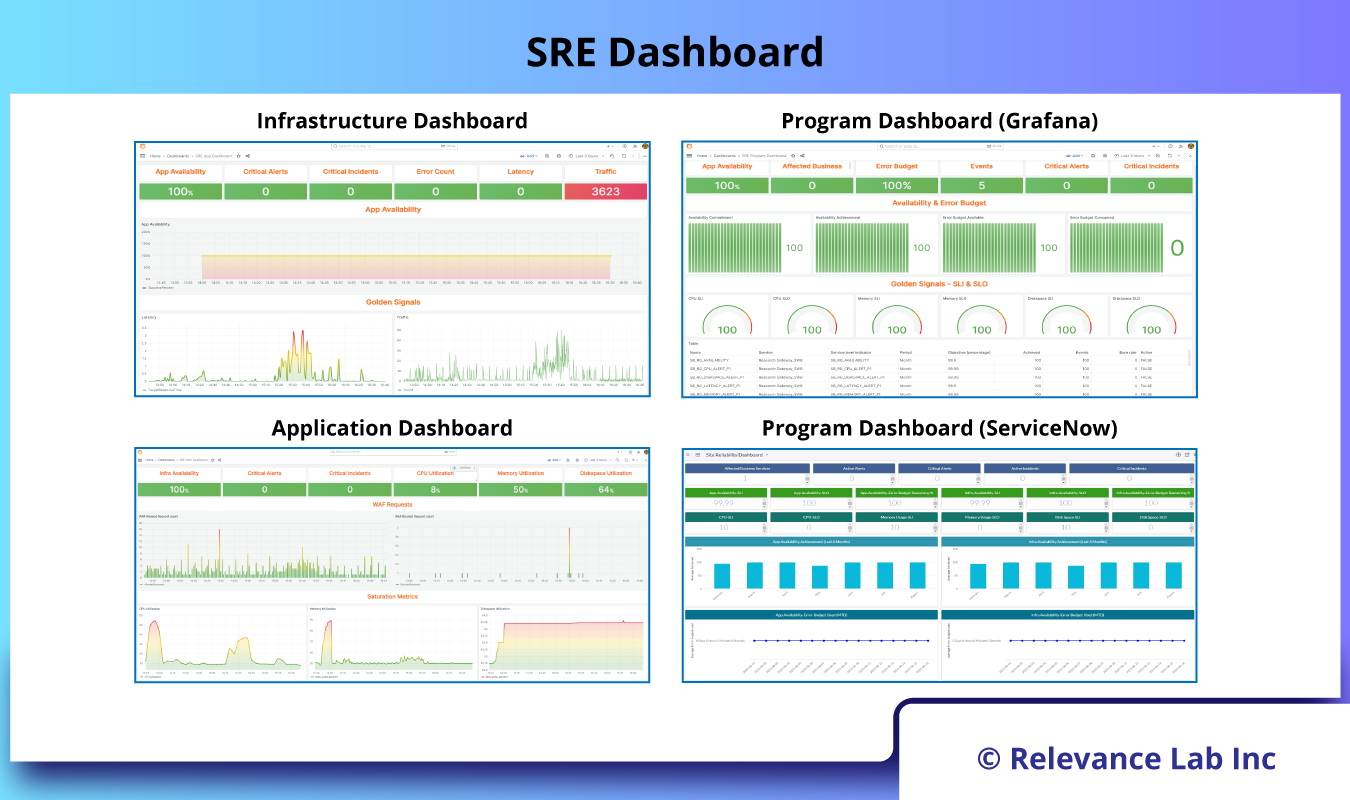

- Observability Dashboards: Having a single pane of glass view across your environment gives you visibility of your Business Apps. Relevance Lab SRE implementation involves below dashboard as a standard out of box.

- Infrastructure Dashboard

- Application Dashboard

- Program Dashboard (Grafana)

- Program Dashboard (ServiceNow)

The figure below shows the SRE Dashboard in detail.

How can new customers benefit from our SRE Platform?

In today’s fast-paced and technology-driven world, organizations need robust and efficient IT operations to stay ahead of the competition. Relevance Lab’s SRE solution provides the necessary tools and frameworks to unlock operational excellence, ensuring high availability, scalability, and reliability of critical business systems. With our SRE solution, organizations can focus on innovation and growth, confident in the knowledge that their IT infrastructure is well-managed and optimized for exceptional performance.

Summary

Relevance Lab is a specialist in SRE implementation and helps organizations achieve reliability and stability with SRE execution. While Enterprises can try and build some of these solutions, it is a time-consuming activity and error-prone and needs a specialist partner. We realize that each large enterprise has a different context-culture-constraint model covering organization structures, team skills/maturity, technology, and processes. Hence the right model for any organization will have to be created as a collaborative model, where Relevance Lab will act as an advisor to Plan, Build and Run the SRE model.

For more details, please feel free to reach out to marketing@relevancelab.com

References

Site Reliability Engineering Ensures Digital Transformation Promises are Delivered to End-Users

What is Site Reliability Engineering (SRE) – Google Definition?

Site reliability engineering documentation

Our goal, at Relevance Lab (RL), is to make scientific research in the cloud ridiculously simple for researchers and principal investigators. We address the needs of public sector Research institutions, Healthcare, Higher Education and Academic Medical Centers with Research Gateway. Our solution allows customers to run secure, cost-effective, and scalable research on the public clouds.

Click here to read the full story.

2024 Blog, Blog, Featured

Our goal, at Relevance Lab (RL), is to make scientific research in the cloud ridiculously simple for researchers and principal investigators. Cloud is driving major advancements in both Healthcare and Higher Education sectors. Rapidly being adopted by various organizations across these sectors in both commercial and public sector segments, research on the cloud is improving day-to-day lives with drug discoveries, healthcare breakthroughs, innovation of sustainable solutions, development of smart and safe cities, etc.

Powering these innovations, public cloud provides an infrastructure with more accessible and useful research-specific products that speed time to insights. Customers get more secure and frictionless collaboration capabilities across large datasets. However, setting up and getting started with complex research workloads can be time-taking. Researchers often look for simple and efficient ways to run their workloads.

RL addresses this issue with Research Gateway, a self-service cloud portal that allows customers to run secure and scalable research on the public clouds without any heavy-lifting of set-ups. In this blog, we will explore different use cases that simplify their workloads and accelerate their outcomes with Research Gateway. We will also elaborate on two specific use cases from the healthcare and higher education sector for the adoption of Research Gateway Software as a Service (SaaS) model.

Who Needs Scientific Research in the Cloud?

The entire scientific community is trying to speed up research for better human lives. While scientists want to focus on “science” and not “infrastructure”, it is not always easy to have a collaborative, secure, self-service, cost-effective, and on-demand research environment. While most customers have traditionally used on-premise infrastructure for research, there is always a key constraint on scaling up with limited resources. Following are some common challenges we have heard our customers say:

- We have tremendous growth of data for research and are not able to manage with existing on-premise storage.

- Our ability to start new research programs despite securing grants is severely limited by a lack of scale with existing setups.

- We have tried the cloud but especially with High Performance Computing (HPC) systems are not confident about total spends and budget controls to adopt the cloud.

- We have ordered additional servers, but for months, we have been waiting for the hardware to be delivered.

- We can easily try new cloud accounts but bringing together Large Datasets, Big Compute, Analytics Tools, and Orchestration workflows is a complex effort.

- We have built on-premise systems for research with Slurm, Singularity Containers, Cromwell/Netflow, custom pipelines and do not have the bandwidth to migrate to the cloud with updated tools and architecture.

- We want to provide researchers the ability to have their ephemeral research tools and environments with budget controls but do not know how to leverage the cloud.

- We are scaling up online classrooms and training labs for a large set of students but do not know how to build secure and cost-effective self-service environments like on-premise training labs.

- We are requiring a data portal for sharing research data across multiple institutions with the right governance and controls on the cloud.

- We need an ability to run Genomics Secondary Analysis for multiple domains like Bacterial research and Precision Medicines at scale with cost-effective per sample runs without worrying about tools, infrastructure, software, and ongoing support.

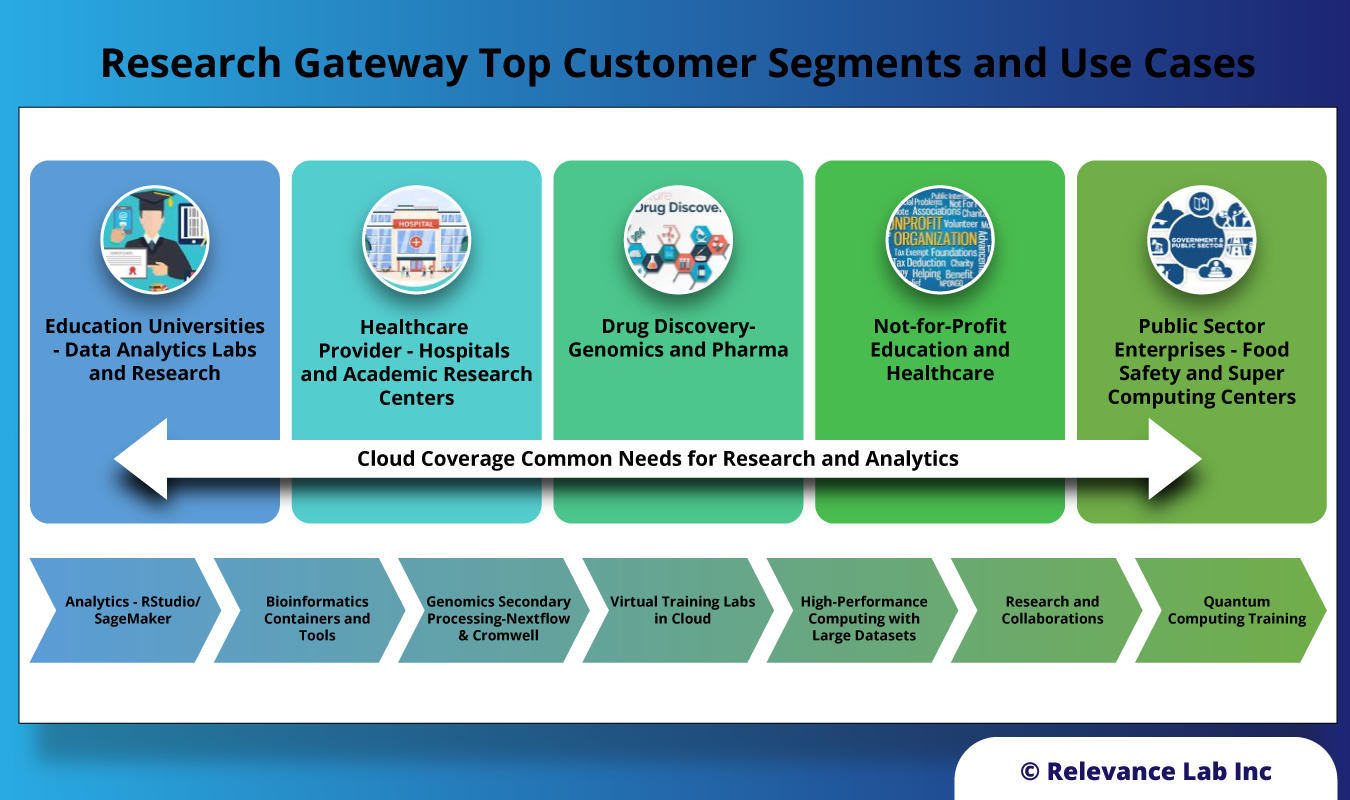

Keeping the above common needs in perspective, Research Gateway is solving the problems for the following key customer segments:

- Education Universities

- Primarily for Data Analytics and Research

- Healthcare Providers

- Hospitals and Academic Medical Centers for Genomics Research

- Drug Discovery Companies

- Especially in Genomics, Precision Medicine, and Vaccine companies

- Not-for-Profit Companies

- Primarily across health, education, and policy research

- Public Sector Companies

- Looking into Food Safety, National Supercomputing centers, etc.

The primary solutions these customers are seeking from Research Gateway have been mentioned below:

- Analytics Workbench with tools like RStudio and Sagemaker

- Bioinformatics Containers and Tools from the standard catalog and bring your own tools

- Genomics Secondary Analysis in Cloud with 1-Click models using open source orchestration engines like Nextflow, Cromwell and specialized tools like DRAGEN, Parabricks, and Sentieon

- Virtual Training Labs in Cloud

- High Performance Computing Infrastructure with specialized tools and large datasets

- Research and Collaboration Portal

- Training and Learning Quantum Computing

The figure below shows the customer segments and their top use cases.

How Research Gateway is Powering Frictionless Outcomes?

Research Gateway allows researchers to conduct just-in-time research with 1-click access to research-specific products, provision pipelines in a few steps, and take control of the budget. This helps in the acceleration of discoveries and enables a modern study environment with projects and virtual classrooms.

Case Study 1: Accelerating Virtual Cloud Labs for the Bioinformatics Department of Singapore-based Higher Education University

During interaction with the university, the following needs were highlighted to the RL team by the university’s bioinformatics department:

Classroom Needs: Primary use case to enable Student Classrooms and Groups for learning Analytics, Genomics Workloads, and Docker-based tools

Research Needs: Used by a small group of researchers pursuing higher degrees in Bioinformatics space

Addressing the Virtual Classroom and Research Needs with Research Gateway

The SaaS model of Research Gateway is used with a hub-and-spoke architecture that allows customers to configure their own AWS accounts for projects to control users, products, and budgets seamlessly.

The primary solution includes:

- Professors set up classrooms and assign students for projects based on semester needs

- Usage of basic tools like RStudio, EC2 with Docker, MySQL, Sagemaker

- Special ask of forwarding and connecting port to shared data on cloud-based for local RStudio IDE was also successfully put to use

- End-of-day automated reports to students and professors on server “still running” for cost optimization

- Ability to create multiple projects in a single AWS Account + Region for flexibility

- Ability to assign and enforce student-level budget controls to avoid overspending

Case Study 2: Driving Genomics Processing for Cancer Research of an Australian Academic Medical Center

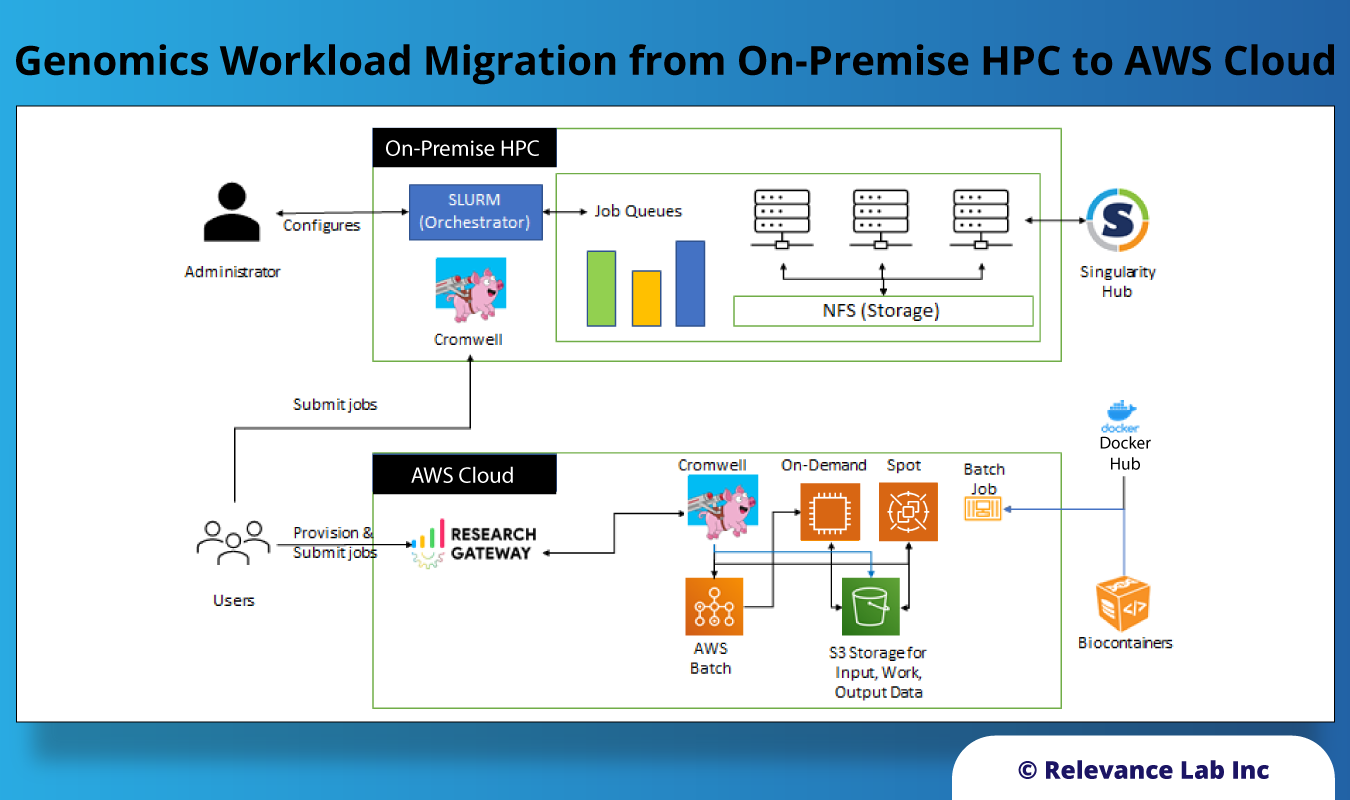

While the existing research infrastructure is for on-premise setup due to security and privacy needs, the team is facing serious challenges with growing data and the influx of new genomics samples to be processed at scale. A team of researchers is taking the lead in evaluating AWS Cloud to solve the issues related to scale and drive faster research in the cloud with in-build security and governance guardrails.

Addressing Genomic Research Cloud Needs with Research Gateway

RL addressed the genomics workload migration needs of the hospital with the Research Gateway SaaS model using the hub-and-spoke architecture that allows the customer to have exclusive access to their data and research infrastructure by bringing their one AWS account. Also, the deployment of the software is in the Sydney region, complying with in-country data norms as per governance standards. Users can easily configure AWS accounts for genomics workload projects. They also get access to genomic research-related products in 1-click along with seamless budget tracking and pausing.

The following primary solution patterns were delivered:

- Migration of existing HPC system using Slurm Workload Manager and Singularity Containers

- Using Cromwell for Large Scale Genomic Samples Processing

- Using complex pipelines with a mix of custom and public WDL pipelines like RNA-Seq

- Large Sample and Reference Datasets

- AWS Batch HPC leveraged for cost-effective and scalable computing

- Specific Data and Security needs met with country-level data safeguards & compliance

- Large set of custom tools and packages

The workload currently operates in an HPC environment on-premise, using slurm as the orchestrator and singularity containers. This involves converting singularity containers to docker containers so that they can be used with AWS Batch. The pipelines are based on Cromwell, which is one of the leading workflow orchestrator software available from the Broad Institute. The following picture shows the existing on-premise system and contrasts that with the target cloud-based system.

Case Study 3: Secure Research Environments for US based Academic Medical Centre

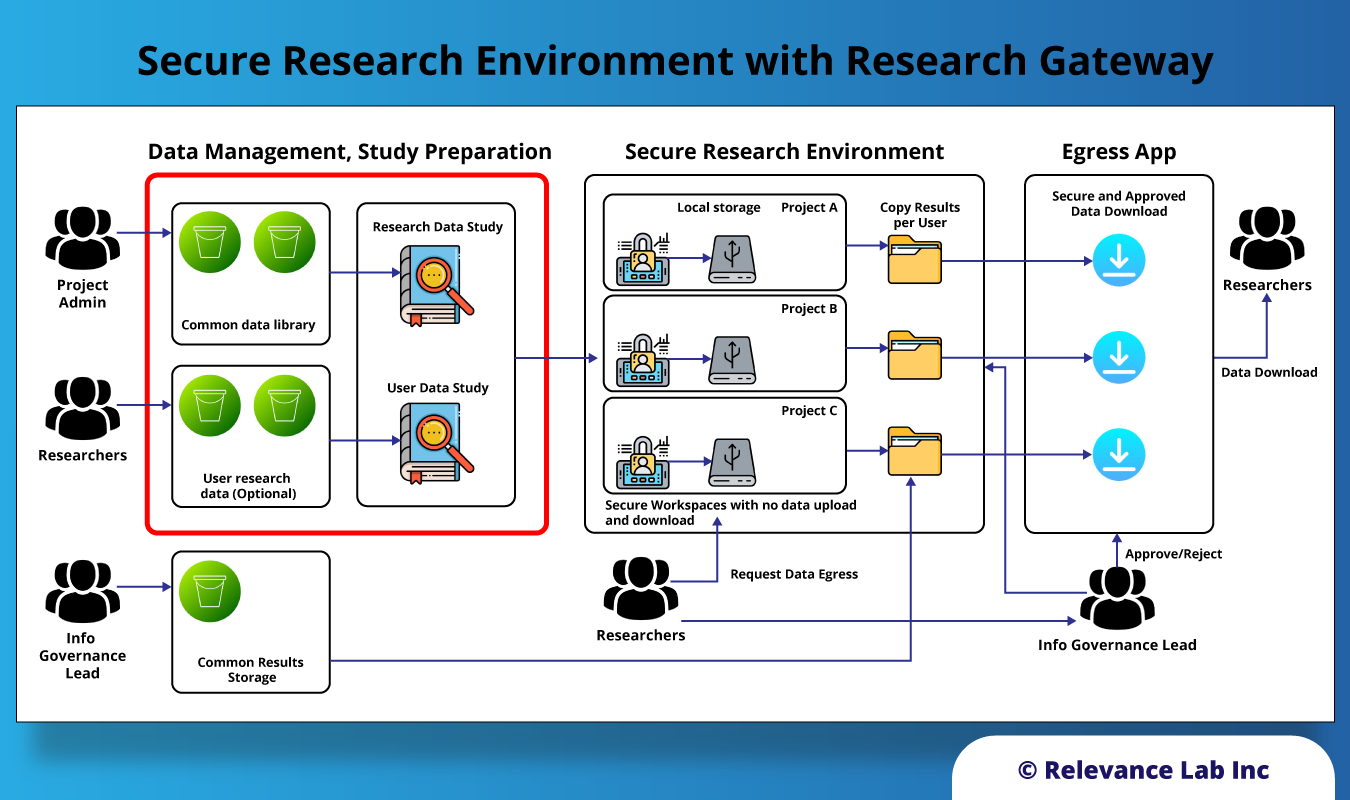

Secure Research Environments (SRE) provide researchers with timely and secure access to sensitive research data, computation systems, and common analytics tools for speeding up Scientific Research in the cloud. Researchers are given access to approved data, enabling them to collaborate, analyze data, share results within proper controls and audit trails. Research Gateway provides this secure data platform with analytical and orchestration tools to support researchers in conducting their work. Their results can then be exported safely, with proper workflows for submission reviews and approvals.

Addressing Secure Research Needs for Senstive Data with Ingress/Egress Controls

RL addressed the SRE needs for a US based Academic Medical Centre with HIPAA Compliant research for Health Sciences group. There are the following key building blocks for the solution:

- Data Ingress/Egress

- Researcher Workflows & Collaborations with costs controls

- On-going Researcher Tools Updates

- Software Patching & Security Upgrades

- Healthcare (or other sensitive) Data Compliances

- Security Monitoring, Audit Trail, Budget Controls, User Access & Management

The figure below shows implementation of SRE solution with Research Gateway.

Conclusion

Relevance Lab, in partnership with public cloud providers, is driving frictionless outcomes by enabling secure and scalable research leveraging Research Gateway for various use-cases. By simplifying the setting up and running research workloads in a seamless manner in just 30 minutes with self-service access and cost control, the solution enables creation of internal virtual labs, acceleration of complex genomic workloads and solving the needs of Secure Research Environments with Ingress/Egress controls.

To know more about virtual Cloud Analytics training labs and launching Genomics Research in less than 30 minutes explore the solution at https://research.rlcatalyst.com or feel free to write to marketing@relevancelab.com

References

Cloud Adoption for Scientific Research in a SAFE and Trusted Manner

Research Data Platform Enabling Scientific Research in Cloud with AWS Open-Source Solution

AWS Cloud Technology & Consulting Specialization for Products and Solutions

Health Informatics and Genomics on AWS with Research Gateway

UK Health Data Research Alliance – Aligning approach to Trusted Research Environments

Trusted (and Productive) Research Environments for Safe Research

Secure research environment for regulated data on Azure

Relevance Lab is proud to announce that we have recently achieved the Advanced Tier status on AWS (Amazon Web Services). This achievement demonstrates our commitment to providing exceptional cloud solutions and services to our clients. As an AWS Advanced Tier Partner, Relevance Lab has demonstrated a high level of proficiency and expertise in AWS cloud technologies. This recognition acknowledges our ability to deliver innovative and scalable solutions, optimize cloud deployments, and effectively manage cloud environments. By attaining the Advanced Tier, Relevance Lab is better positioned to meet the evolving needs of our clients and provide them with the highest level of support. We have access to advanced training and resources from AWS, enabling us to stay current with the latest industry trends and best practices. Our team of certified AWS professionals is equipped to help organizations design, deploy, and manage their cloud infrastructure with the utmost efficiency and security. We can assist with migrating workloads to the AWS cloud, implementing cloud-native architectures, optimizing cost and performance, and ensuring compliance with industry standards. At Relevance Lab, we understand the critical role that the cloud plays in enabling digital transformation and driving business growth. Achieving the Advanced Tier on AWS reinforces our commitment to helping our clients leverage the full potential of cloud technology. The attainment of Advanced Tier status highlights Relevance Lab’s commitment to excellence, technical proficiency, customer success, and adherence to industry best practices in cloud computing. This achievement further strengthens the company’s position as a trusted partner for organizations seeking advanced cloud solutions on the AWS platform. If you have any questions or need further assistance with AWS or any other IT-related matters, please feel free to reach out to our support team at marketing@relevancelab.com

Relevance Lab is proud to announce that we have recently achieved the Advanced Tier Services Partner status on AWS (Amazon Web Services). This achievement demonstrates our commitment to providing exceptional cloud solutions and services to our clients leveraging our unique Platforms driven model of ServiceOne, Governance360, AppInsights and RLCatalyst. With this new maturity we are now a specialist partner for AWS on both Services and Software track with our unique positioning & capabilities to help customers adopt Cloud “The Right Way”.

Click here for more details.

Relevance Lab and AWS HPC teams empowering Researchers to run HPC Workloads with Research Gateway. It helps research computing teams provision a secure, performant, and scalable high-performance computing (HPC) environment on AWS easily with cost and governance controls. This solution is now highlighted on AWS Partner Network Blog as a joint offering.

Click here for the full story.

2023 Blog, Blog, DevOps Blog, Featured

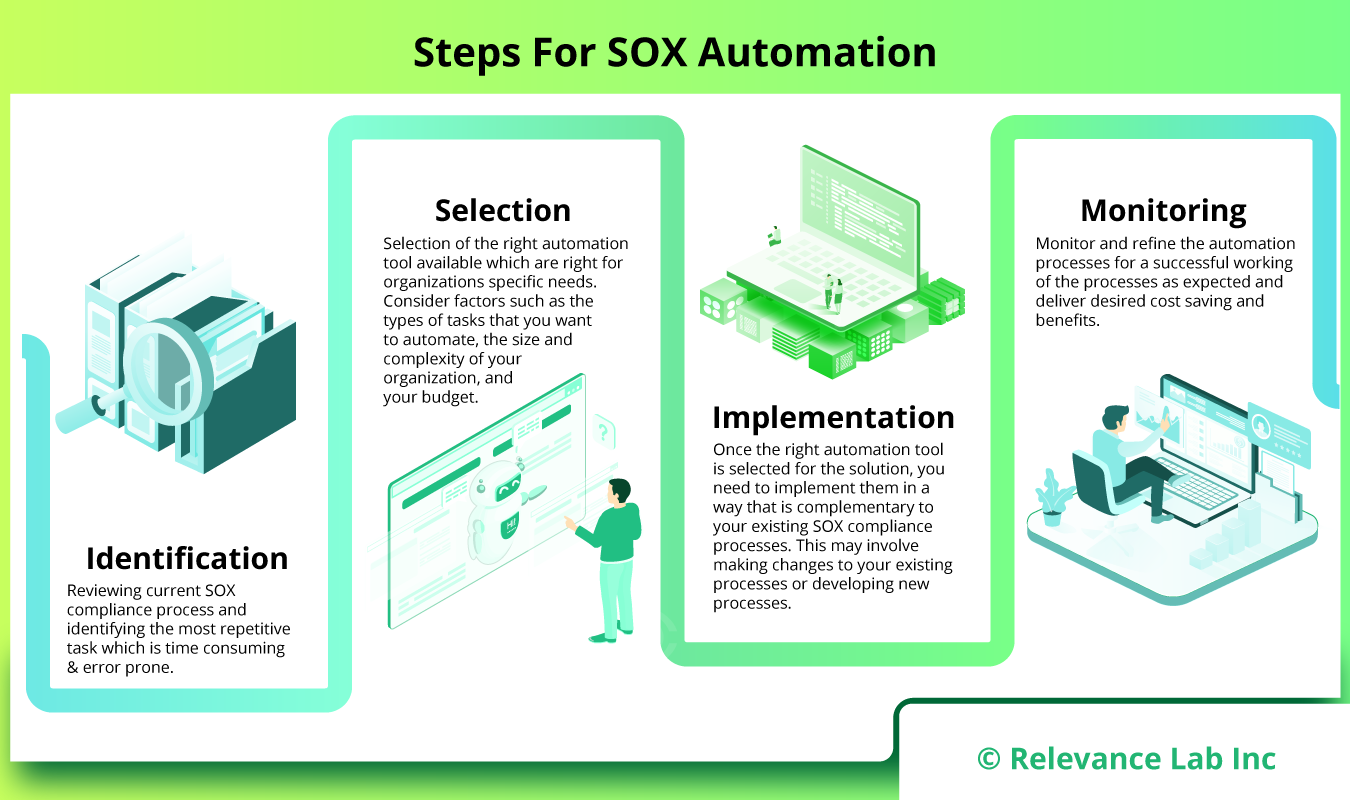

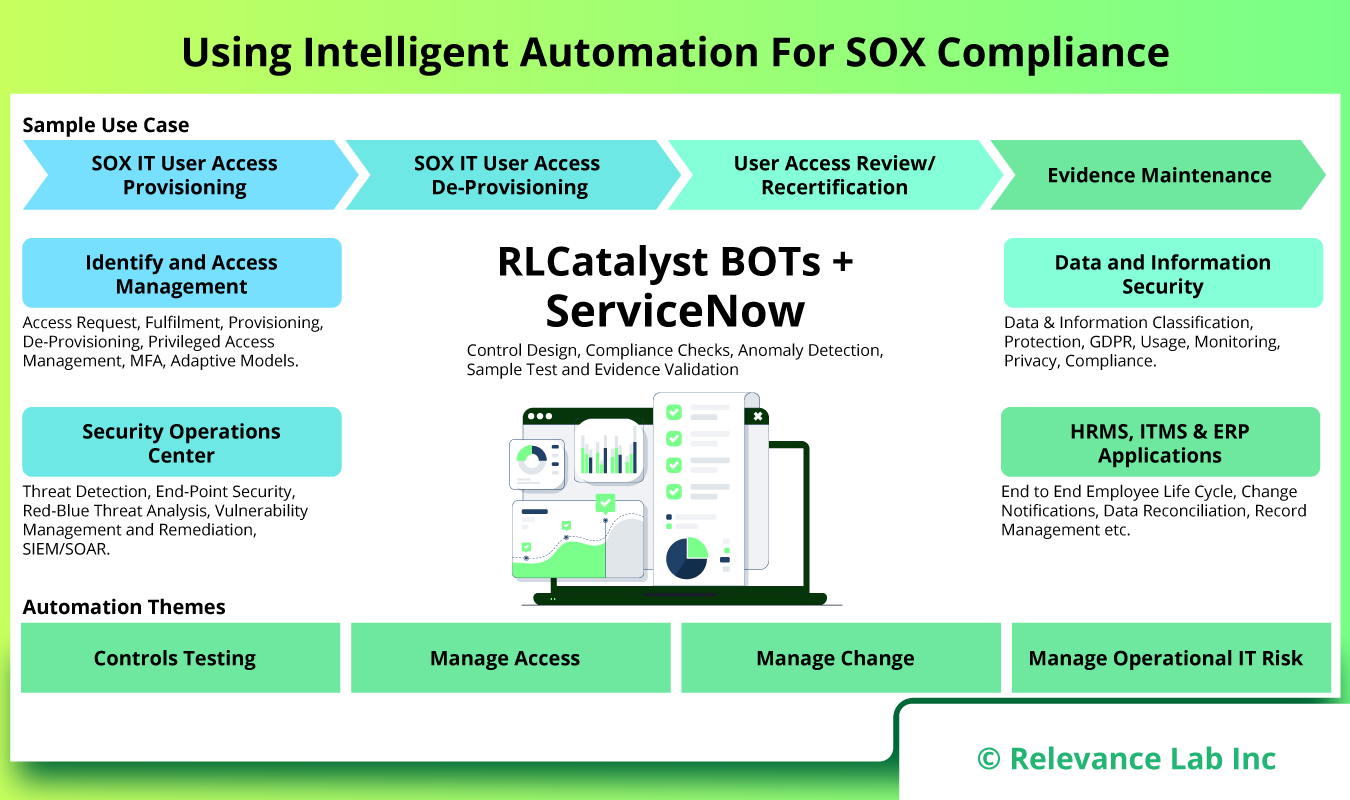

In today’s complex regulatory landscape, organizations across industries are required to comply with various regulations, including the Sarbanes-Oxley Act (SOX). SOX compliance ensures accountability and transparency in financial reporting, protecting investors and the integrity of the financial markets. However, manual compliance processes can be time-consuming, error-prone, and costly.

Relevance Lab’s RLCatalyst and RPA solutions provides a comprehensive suite of automation capabilities that can streamline and simplify the SOX compliance process. Organizations can achieve better quality, velocity, and ROI tracking, while saving significant time and effort.

SOX Compliance Dependencies on User Onboarding & Offboarding

Given the current situation while many employees are working from home or remote areas, there is an increased challenge of managing resources or time. Being relevant to the topic, on user provisioning, there are risks like, identification of unauthorized access to the system for individual users based on the roles or responsibility.

Most organization follow a defined process in user provisioning like, sending a user access request with relevant details including:

- Username

- User Type

- Application

- Roles

- Obtaining line manager approval

- Application owner approval

Based on the policy requirement and finally the IT providing an access grant. Several organizations have been still following a manual process, thereby causing a security risk.

In such a situation automation plays an important role. Automation has helped in reduction of manual work, labor cost, dependency/reliance of resource and time management. An automation process built with proper design, tools, and security reduces the risk of material misstatement, unauthorized access, fraudulent activity, and time management. Usage of ServiceNow has also helped in tracking and archiving of evidence (evidence repository) essential for Compliance. Effective Compliance results in better business performance.

RPA Solutions for SOX Compliance

Robotic process automation (RPA) is quickly becoming a requirement in every industry looking to eliminate repetitive, manual work through automation and behavior mimicry. This will reduce the company’s use of resources, save money and time, and improve the accuracy and standard of work being done. Many businesses are currently not taking use of the potential of deploying RPAs in the IT Compliance Process due to barriers including lack of knowledge, the absence of a standardized methodology, or carrying out these operations in a conventional manner.

Below are the areas which we need to focus on:

- Standardization of Process: There are chances to standardize SOX compliance techniques, frameworks, controls, and processes even though every organization is diverse and uses different technology and processes. Around 30% of the environment in a typical organization may be deemed high-risk, whereas the remaining 70% is medium- to low-risk. To improve the efficiency of the compliance process, a large portion of the paperwork, testing, and reporting related to that 70 percent can be standardized. This would make it possible to concentrate more resources on high-risk locations.

- Automation & Analytics: Opportunities to add robotic process automation (RPA), continuous control monitoring, analytics, and other technology grow as compliance processes become more mainstream. These prospective SOX automation technologies not only have the potential to increase productivity and save costs, but they also offer a new viewpoint on the compliance process by allowing businesses to gain insights from the data.

How Automation Can Reduce Compliance Costs?

- Shortening the duration and effort needed to complete SOX compliance requirements: Many of the time-consuming and repetitive SOX compliance procedures, including data collection, reconciliation, and reporting, can be automated. This can free up your team to focus on more strategic and value-added activities.

- Enhancing the precision and completeness of data related to SOX compliance: Automation can aid in enhancing the precision and thoroughness of SOX compliance data by lowering the possibility of human error. Automation can also aid in ensuring that information regarding SOX compliance is gathered and examined in a timely and consistent manner.

- Recognizing and addressing SOX compliance concerns faster: By giving you access to real-time information about your organization’s controls and procedures, automation can help you detect and address SOX compliance concerns more rapidly. By doing this, you can prevent expensive and disruptive compliance failures.

Automating SOX Compliance using RLCatalyst:

Relevance Lab’s RLCatalyst platform provides a comprehensive suite of automation capabilities that can streamline and simplify the SOX compliance process. By leveraging RLCatalyst, organizations can achieve better quality, velocity, and ROI tracking, while saving significant time and effort.

- Continuous Monitoring: RLCatalyst enables continuous monitoring of controls, ensuring that any deviations or non-compliance issues are identified in real-time. This proactive approach helps organizations stay ahead of potential compliance risks and take immediate corrective actions.

- Documentation and Evidence Management: RLCatalyst’s automation capabilities facilitate the seamless documentation and management of evidence required for SOX compliance. This includes capturing screenshots, logs, and other relevant data, ensuring a clear audit trail for compliance purposes.

- Workflow Automation: RLCatalyst’s workflow automation capabilities enable organizations to automate and streamline the entire compliance process, from control testing to remediation. This eliminates manual errors and ensures consistent adherence to compliance requirements.

- Reporting and Analytics: RLCatalyst provides powerful reporting and analytics features that enable organizations to gain valuable insights into their compliance status. Customizable dashboards, real-time analytics, and automated reporting help stakeholders make data-driven decisions and meet compliance obligations more effectively.

Example – User Access Management

| Risk | Control | Manual | Automation |

| Unauthorized users are granted access to applicable logical access layers. Key financial data/programs are intentionally or unintentionally modified. | New and modified user access to the software is approved by authorized approval as per the company IT policy. All access is appropriately provisioned. | Access to the system is provided manually by IT team based on the approval given as per the IT policy and roles and responsibility requested. SOD (Segregation Of Duties) check is performed manually by Process Owner/ Application owners as per the IT Policy. |

Access to the system is provided automatically by use of auto-provisioning script designed as per the company IT policy. BOT checks for SOD role conflict and provides the information to the Process Owner/Application owners as per the policy. Once the approver rejects the approval request, no access is provided by BOT to the user in the system and audit logs are maintained for Compliance purpose. |

| Unauthorized users are granted privileged rights. Key financial data/programs are intentionally or unintentionally modified. | Privileged access, including administrator accounts and superuser accounts, are appropriately restricted from accessing the software. | Access to the system is provided manually by the IT team based on the given approval as per the IT policy. Manual validation check and approval to be provided by Process Owner/ Application owners on restricted access to the system as per IT company policy. |

Access to the system is provided automatically by use of auto-provisioning script designed as per the company IT policy. Once the approver rejects the approval request, no access is provided by BOT to the user in the system and audit logs are maintained for Compliance purpose. BOT can limit the count and time restriction of access to the system based on the configuration. |

| Unauthorized users are granted access to applicable logical access layers. Key financial data/programs are intentionally or unintentionally modified. | Access requests to the application are properly reviewed and authorized by management | User Access reports need to be extracted manually for access review by use of tools or help of IT.

Review comments need to be provided to IT for de-provisioning of access. |

BOT can help the reviewer to extract the system generated report on the user.

BOT can help to compare active user listing with HR termination listing to identify terminated user. BOT can be configured to de-provision access of user identified in the review report on unauthorized access. |

| Unauthorized users are granted access to applicable logical access layers if not timely removed. | Terminated application user access rights are removed on a timely basis. | System access is de-activated manually by IT team based on the approval provided as per the IT policy. |

System access can be deactivated by use of auto-provisioning script designed as per the company IT policy.

BOT can be configured to check the termination date of the user and de-active system access if SSO is enabled. BOT can be configured to deactivate user access to the system based on approval. |

The table provides a detailed comparison of the manual and automated approach. Automation can bring in 40-50% cost, reliability, and efficiency gains.

Conclusion

SOX compliance is a critical aspect of ensuring the integrity and transparency of financial reporting. By leveraging automation using RLCatalyst and RPA solutions from Relevance Lab, organizations can streamline their SOX compliance processes, reduce manual effort, and mitigate compliance risks. The combination of RLCatalyst’s automation capabilities and RPA solutions provides a comprehensive approach to achieving SOX compliance more efficiently and cost-effectively. The blog was enhanced using our own GenAI Bot to assist in creation.

For more details or enquires, please write to marketing@relevancelab.com

References

What is Compliance as Code?

What is SOX Compliance? 2023 Requirements, Controls and More

Building Bot Boundaries: RPA Controls in SOX Systems

Get Started with Building Your Automation Factory for Cloud

Compliance Requirements for Enterprise Automation (uipath.com)

Automating Compliance Audits|Automation Anywhere

SOX Compliance is an essential part of large enterprises governance process. Leveraging new technology, process and tools, enterprises can now achieve 40-50% Intelligent Automation related to SOX Compliance activities.

Click here for the full story.

As enterprises start adopting GenAI concepts for internal use cases, the primary concerns around using “AI The Right Way” are key to adopting the right architecture with proper guardrails. With early adopters, Relevance Lab has leveraged the AI Compass Framework to build such solutions using Azure OpenAI and Responsible AI best practices. Click here for the full story.