2023 Blog, Blog, DevOps Blog, Featured

In today’s complex regulatory landscape, organizations across industries are required to comply with various regulations, including the Sarbanes-Oxley Act (SOX). SOX compliance ensures accountability and transparency in financial reporting, protecting investors and the integrity of the financial markets. However, manual compliance processes can be time-consuming, error-prone, and costly.

Relevance Lab’s RLCatalyst and RPA solutions provides a comprehensive suite of automation capabilities that can streamline and simplify the SOX compliance process. Organizations can achieve better quality, velocity, and ROI tracking, while saving significant time and effort.

SOX Compliance Dependencies on User Onboarding & Offboarding

Given the current situation while many employees are working from home or remote areas, there is an increased challenge of managing resources or time. Being relevant to the topic, on user provisioning, there are risks like, identification of unauthorized access to the system for individual users based on the roles or responsibility.

Most organization follow a defined process in user provisioning like, sending a user access request with relevant details including:

- Username

- User Type

- Application

- Roles

- Obtaining line manager approval

- Application owner approval

Based on the policy requirement and finally the IT providing an access grant. Several organizations have been still following a manual process, thereby causing a security risk.

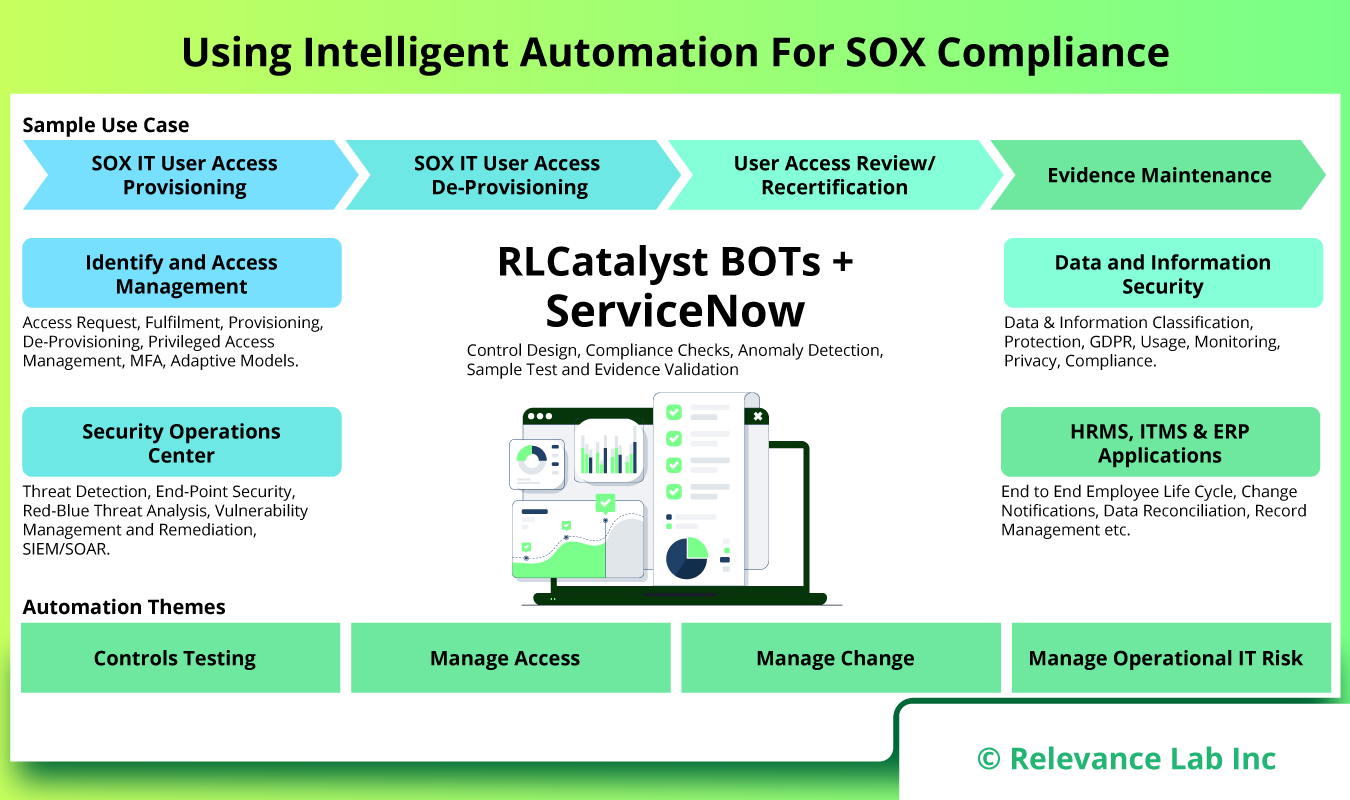

In such a situation automation plays an important role. Automation has helped in reduction of manual work, labor cost, dependency/reliance of resource and time management. An automation process built with proper design, tools, and security reduces the risk of material misstatement, unauthorized access, fraudulent activity, and time management. Usage of ServiceNow has also helped in tracking and archiving of evidence (evidence repository) essential for Compliance. Effective Compliance results in better business performance.

RPA Solutions for SOX Compliance

Robotic process automation (RPA) is quickly becoming a requirement in every industry looking to eliminate repetitive, manual work through automation and behavior mimicry. This will reduce the company’s use of resources, save money and time, and improve the accuracy and standard of work being done. Many businesses are currently not taking use of the potential of deploying RPAs in the IT Compliance Process due to barriers including lack of knowledge, the absence of a standardized methodology, or carrying out these operations in a conventional manner.

Below are the areas which we need to focus on:

- Standardization of Process: There are chances to standardize SOX compliance techniques, frameworks, controls, and processes even though every organization is diverse and uses different technology and processes. Around 30% of the environment in a typical organization may be deemed high-risk, whereas the remaining 70% is medium- to low-risk. To improve the efficiency of the compliance process, a large portion of the paperwork, testing, and reporting related to that 70 percent can be standardized. This would make it possible to concentrate more resources on high-risk locations.

- Automation & Analytics: Opportunities to add robotic process automation (RPA), continuous control monitoring, analytics, and other technology grow as compliance processes become more mainstream. These prospective SOX automation technologies not only have the potential to increase productivity and save costs, but they also offer a new viewpoint on the compliance process by allowing businesses to gain insights from the data.

How Automation Can Reduce Compliance Costs?

- Shortening the duration and effort needed to complete SOX compliance requirements: Many of the time-consuming and repetitive SOX compliance procedures, including data collection, reconciliation, and reporting, can be automated. This can free up your team to focus on more strategic and value-added activities.

- Enhancing the precision and completeness of data related to SOX compliance: Automation can aid in enhancing the precision and thoroughness of SOX compliance data by lowering the possibility of human error. Automation can also aid in ensuring that information regarding SOX compliance is gathered and examined in a timely and consistent manner.

- Recognizing and addressing SOX compliance concerns faster: By giving you access to real-time information about your organization’s controls and procedures, automation can help you detect and address SOX compliance concerns more rapidly. By doing this, you can prevent expensive and disruptive compliance failures.

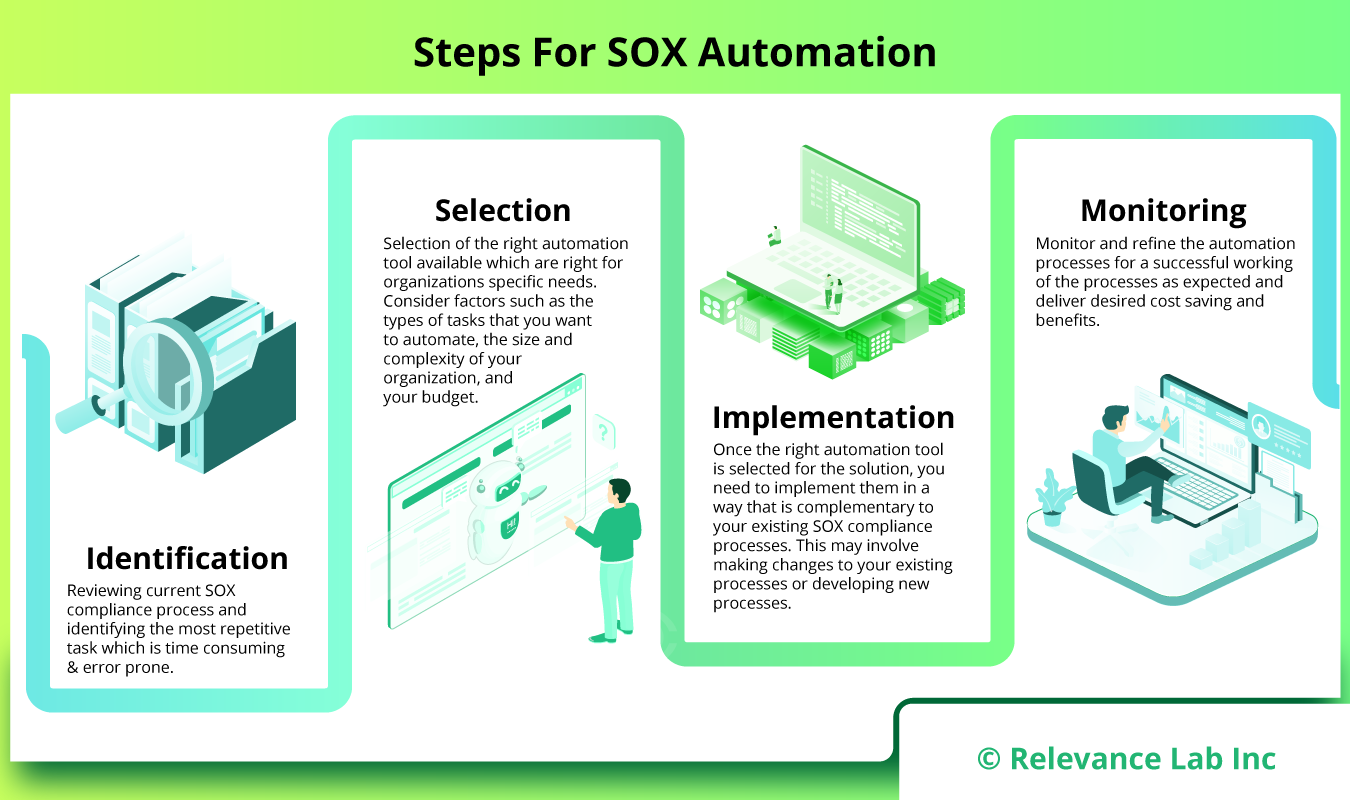

Automating SOX Compliance using RLCatalyst:

Relevance Lab’s RLCatalyst platform provides a comprehensive suite of automation capabilities that can streamline and simplify the SOX compliance process. By leveraging RLCatalyst, organizations can achieve better quality, velocity, and ROI tracking, while saving significant time and effort.

- Continuous Monitoring: RLCatalyst enables continuous monitoring of controls, ensuring that any deviations or non-compliance issues are identified in real-time. This proactive approach helps organizations stay ahead of potential compliance risks and take immediate corrective actions.

- Documentation and Evidence Management: RLCatalyst’s automation capabilities facilitate the seamless documentation and management of evidence required for SOX compliance. This includes capturing screenshots, logs, and other relevant data, ensuring a clear audit trail for compliance purposes.

- Workflow Automation: RLCatalyst’s workflow automation capabilities enable organizations to automate and streamline the entire compliance process, from control testing to remediation. This eliminates manual errors and ensures consistent adherence to compliance requirements.

- Reporting and Analytics: RLCatalyst provides powerful reporting and analytics features that enable organizations to gain valuable insights into their compliance status. Customizable dashboards, real-time analytics, and automated reporting help stakeholders make data-driven decisions and meet compliance obligations more effectively.

Example – User Access Management

| Risk | Control | Manual | Automation |

| Unauthorized users are granted access to applicable logical access layers. Key financial data/programs are intentionally or unintentionally modified. | New and modified user access to the software is approved by authorized approval as per the company IT policy. All access is appropriately provisioned. | Access to the system is provided manually by IT team based on the approval given as per the IT policy and roles and responsibility requested. SOD (Segregation Of Duties) check is performed manually by Process Owner/ Application owners as per the IT Policy. |

Access to the system is provided automatically by use of auto-provisioning script designed as per the company IT policy. BOT checks for SOD role conflict and provides the information to the Process Owner/Application owners as per the policy. Once the approver rejects the approval request, no access is provided by BOT to the user in the system and audit logs are maintained for Compliance purpose. |

| Unauthorized users are granted privileged rights. Key financial data/programs are intentionally or unintentionally modified. | Privileged access, including administrator accounts and superuser accounts, are appropriately restricted from accessing the software. | Access to the system is provided manually by the IT team based on the given approval as per the IT policy. Manual validation check and approval to be provided by Process Owner/ Application owners on restricted access to the system as per IT company policy. |

Access to the system is provided automatically by use of auto-provisioning script designed as per the company IT policy. Once the approver rejects the approval request, no access is provided by BOT to the user in the system and audit logs are maintained for Compliance purpose. BOT can limit the count and time restriction of access to the system based on the configuration. |

| Unauthorized users are granted access to applicable logical access layers. Key financial data/programs are intentionally or unintentionally modified. | Access requests to the application are properly reviewed and authorized by management | User Access reports need to be extracted manually for access review by use of tools or help of IT.

Review comments need to be provided to IT for de-provisioning of access. |

BOT can help the reviewer to extract the system generated report on the user.

BOT can help to compare active user listing with HR termination listing to identify terminated user. BOT can be configured to de-provision access of user identified in the review report on unauthorized access. |

| Unauthorized users are granted access to applicable logical access layers if not timely removed. | Terminated application user access rights are removed on a timely basis. | System access is de-activated manually by IT team based on the approval provided as per the IT policy. |

System access can be deactivated by use of auto-provisioning script designed as per the company IT policy.

BOT can be configured to check the termination date of the user and de-active system access if SSO is enabled. BOT can be configured to deactivate user access to the system based on approval. |

The table provides a detailed comparison of the manual and automated approach. Automation can bring in 40-50% cost, reliability, and efficiency gains.

Conclusion

SOX compliance is a critical aspect of ensuring the integrity and transparency of financial reporting. By leveraging automation using RLCatalyst and RPA solutions from Relevance Lab, organizations can streamline their SOX compliance processes, reduce manual effort, and mitigate compliance risks. The combination of RLCatalyst’s automation capabilities and RPA solutions provides a comprehensive approach to achieving SOX compliance more efficiently and cost-effectively. The blog was enhanced using our own GenAI Bot to assist in creation.

For more details or enquires, please write to marketing@relevancelab.com

References

What is Compliance as Code?

What is SOX Compliance? 2023 Requirements, Controls and More

Building Bot Boundaries: RPA Controls in SOX Systems

Get Started with Building Your Automation Factory for Cloud

Compliance Requirements for Enterprise Automation (uipath.com)

Automating Compliance Audits|Automation Anywhere