2016 Blogs, Blog

As an organization, your success is dependent on how you manage the customer experience, either internal or external. The level of support and the turn around time of incident resolution play a pivotal role in the customer either being delighted or being extremely dissatisfied. Most technology firms have a defined Incident Management System that helps track, monitor and resolve the incident as best they can.

Typically L1/ L2 incidents are the primary and secondary line of support that receives requests/ complaints via different channels such as phone, web, email or even chats regarding some technical difficulty that needs attention. Organizations need to have a scalable, reliable and agile system that can manage incidents without loss of time to ensure that normal business operations are not impacted in any way. Even though we’re in the age of Artificial Intelligence and other technological innovations, many organizations continue to employ manual interventions which are a drain on time, effort and effectiveness.

Automation of Incident Management – perhaps the only way forward

Here are 6 good reasons to automate Incident Management:-

a) High Productivity, Low Costs – when manual interventions for repeatable tasks are lessened due to automation, it frees up staff to focus on more strategic and value-added business tasks. It brings costs down as there are less valuable man hours spent on tasks that are now automated.

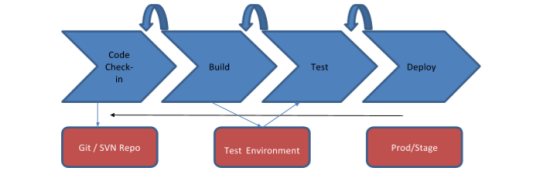

b) Faster Resolution – the entire process is streamlined where incidents are captured through self-service portals, or various other incident reporting mechanisms such as mail, phone, chat, etc. Automation allows for better prioritization and routing to the appropriate resolution group, resulting in faster and improved delivery of incident resolution.

c) Minimize Revenue Losses – If service disruptions are not looked into immediately, businesses stand to lose revenue which impacts reputation. Service delivery automation helps to minimize the negative impact of these disruptions, achieves higher customer satisfaction by restoring services quickly without impacting business stability.

d) Better Collaboration – automation leads to better collaboration between business functions. Because of the bi-directional communication flow, detection, diagnosis, repair and recovery are achieved in record time due to the adoption of automation. Incident management becomes easier, faster and records improved performance due to clearly defined tasks, more collaboration due to increased communication.

e) Effective Planning & Prioritization – Incidents can be prioritized based on the severity of the issue and can be automatically assigned or escalated with complete information to the appropriate task force. This helps in better scheduling and planning of overall incident management leading to a more efficient and effective management of issues.

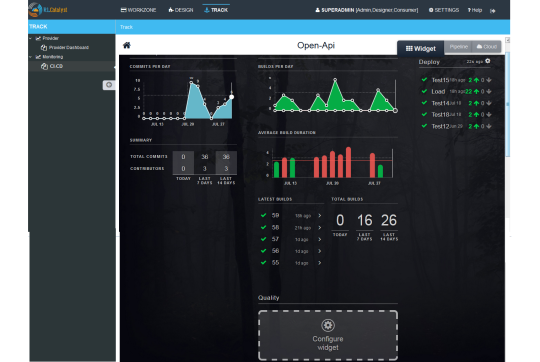

f) Incident Analysis For Overall Improvement of Infrastructure – with an analytics dashboard that you can call up periodically, you can assess the problem areas basis frequency, time, function, etc. This can lead to better-planned expenditure on future infrastructure investments.

Service delivery automation is the practical and sensible approach for organizations if they want to reduce human error, reduce valuable man-hours performing repetitive tasks and improve the quality of delivery significantly.

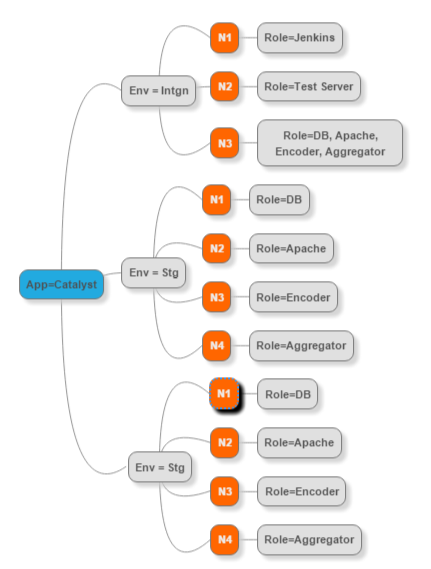

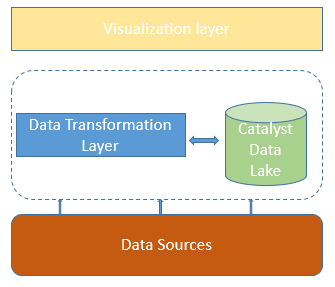

RLCatlayst is integrated with ServiceNow, and helps in ticket resolution automation through its powerful BOTs framework. With RLCatalyst you can automate the provisioning of your infrastructure, keep a catalog of common software stacks, exercise your options on what to use, based on what’s available and improve the overall quality of service delivery. RLCatalyst is an intelligent software which can study discernible patterns and identify frequently logged tickets by users, thus enabling faster incident resolution.

RLCatalyst comes with a library of over 200 BOTS that you can use to customize based on your needs and requirements. With RLCatalyst you get the dual benefit of DevOps automation with service delivery automation, taking your organization to the next level of efficiency and productivity.