2018 Blogs, Blog

Globally, organizations have embraced cloud computing and delivery models for their numerous advantages. Gartner predicts the public cloud market is expected to grow 21.4 percent by the end of 2018, from $153.5 billion in 2017. Cloud computing services provide an opportunity for organizations to consume specific services with delivery models that are most appropriate for them. They help increase the business velocity and reduce the capital expenditure by converting it into operating expenditure.

Capex to Opex StructureCapital expenditure refers to the money spent in purchasing hardware and in building and managing in-house IT infrastructure. With cloud computing, it is easy to access the entire storage and network infrastructure from a data center without any in-house infrastructure requirements. Cloud service providers also offer the required hardware infrastructure and resource provisioning as per business requirements. Resources can be consumed according to the need of the hour. Cloud computing also offers flexibility and scalability as per business demands.

All these factors help organizations move from a fixed cost structure for capital expenditure to a variable cost structure for the operating expenditure.

Cost of Assets and IT Service ManagementAfter moving to a variable cost structure, organizations must look at the components of its cost structure. They include the cost of assets and the cost of service or cost of IT service management. Cost of assets show a considerable reduction after moving the entire infrastructure or assets to cloud. The cost of service remains vital as it depends on the day-to-day IT operations and represents the day-after-cloud scenario. The leverage of cloud computing can only be realized if the cost of IT service management is brought down.

Growing ITSM or ITOPs Market and High StakesWhile IT service management (ITSM) has taken a new avatar as IT operations management (ITOM), incident management remains the critical IT support process in every organization. The incident response market is expanding rapidly as more enterprises are moving to cloud every year. According to Markets and Markets, the incident response market is expected to grow to $33.76 billion by the year 2023 from $ 13.38 billion in 2018. The key factors that drive the incident response market are heavy financial losses post incident occurrence, rise in security breaches targeting enterprises and compliance requirements such as the EU’s General Data Protection Regulation (GDPR).

Service fallout or service degradation can impact key business operations. A survey conducted by ITIC indicates that 33 percent of enterprises reported that one hour of downtime could cost them $1 million to more than $5 million.

Cost per IT Ticket: The Least Common Denominator of Cost of ITSMAs organizations have high stakes in ensuring that business services run smooth, IT ops teams have additional responsibility in responding to incidents faster without compromising on the quality of service. The two important metrics for any incident management process are 1) cost per IT ticket and 2) mean time to resolution (MTTR). While cost per ticket impacts the overall operating expenditure, MTTR impacts customer satisfaction. The higher the MTTR, the more time it takes to resolve the tickets and, hence, the lower customer satisfaction.

Cost per ticket is the total monthly operating expenditure of the IT ops team (IT service desk) divided by its monthly ticket volume. According to an HDI study, the average cost per service desk ticket in North America is $15.56. Cost per ticket increases as the ticket gets escalated and moves up the life cycle. For an L3 ticket, the average cost per ticket in North America is about $80-$100+.

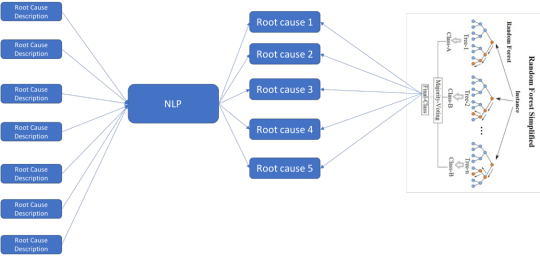

Severity vs. Volume of IT TicketsWith our experience in managing the cloud IT ops for our clients, we understand that organizations normally look at the volume and severity of IT tickets. They target the High Severity and High Volume quadrants to reduce the cost of the tickets. However, with our experience, we strongly feel that organizations should start their journey with the low hanging fruits such as the Low Severity tickets, which are repeatable in nature and can be automated using bots.

In the next blog, we will elaborate on this approach that can help organizations in measuring and reducing the cost of IT tickets.

About the Author: Neeraj Deuskar is the Director and Global Head of Marketing for the Relevance Lab (www.relevancelab.com). Relevance Lab is a DevOps and Automation specialist company- making cloud adoption easy for global enterprises. In his current role, Neeraj is formulating and implementing the global marketing strategy with the key responsibilities of making the brand and the pipeline impact. Prior to his current role, Neeraj managed the global marketing teams for various IT product and services organizations and handled various responsibilities including strategy formulation, product and solutions marketing, demand generation, digital marketing, influencers’ marketing, thought leadership and branding. Neeraj is B.E. in Production Engineering and MBA in Marketing, both from the University of Mumbai, India.

(This blog was originally published in DevOps.com and can be read here: https://devops.com/cost-per-it-ticket-think-beyond-opex-in-your-cloud-journey/ )