2022 Blogs, Blog, Featured

Software architecture provides a high-level overview of what a software system looks like. At the very minimum, it shows the various logical pieces of the overall solution and the interaction between those pieces. (See C4 Model for architecture diagramming). The software architecture is like a map of the terrain for anybody who must deal with the system. Contrary to what many might think, software architecture is important even for non-engineering functions like sales, as many customers like to review the architecture to see how well it fits within their enterprise and whether it could introduce future issues by its adoption.

Goals of the Architecture

It is important to determine the goals for the system when deciding on the architecture. This should include both short-term and long-term goals.

Some of our important goals for RLCatalyst Research Gateway are:

1. Ease of Use

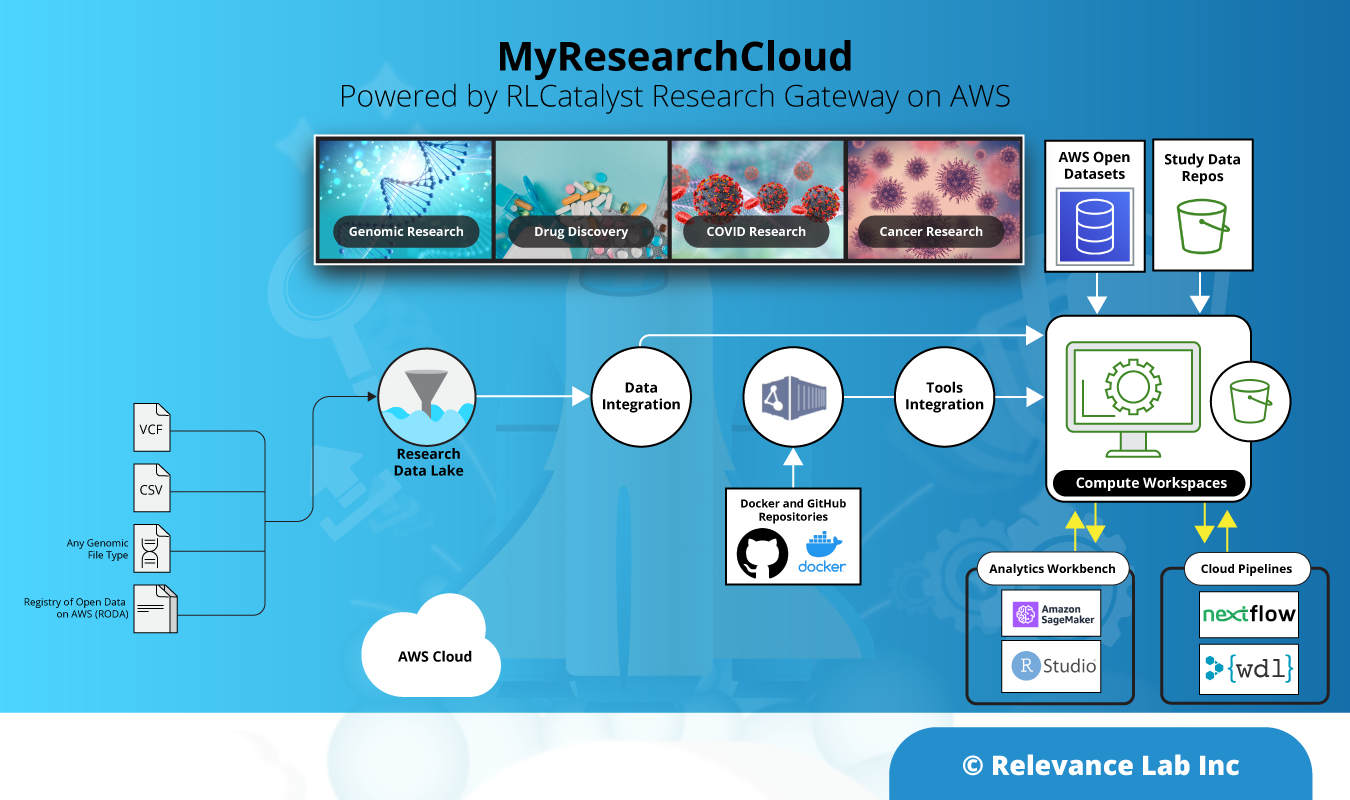

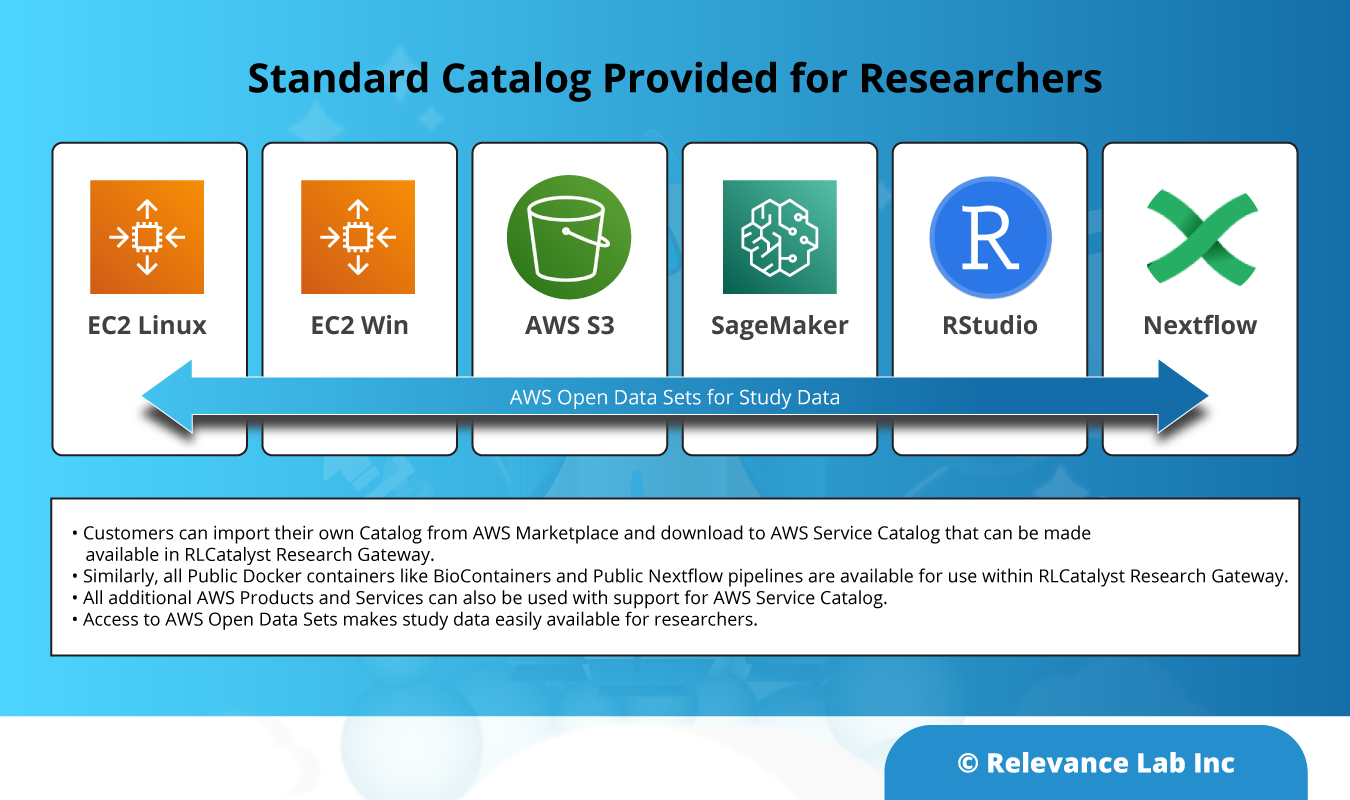

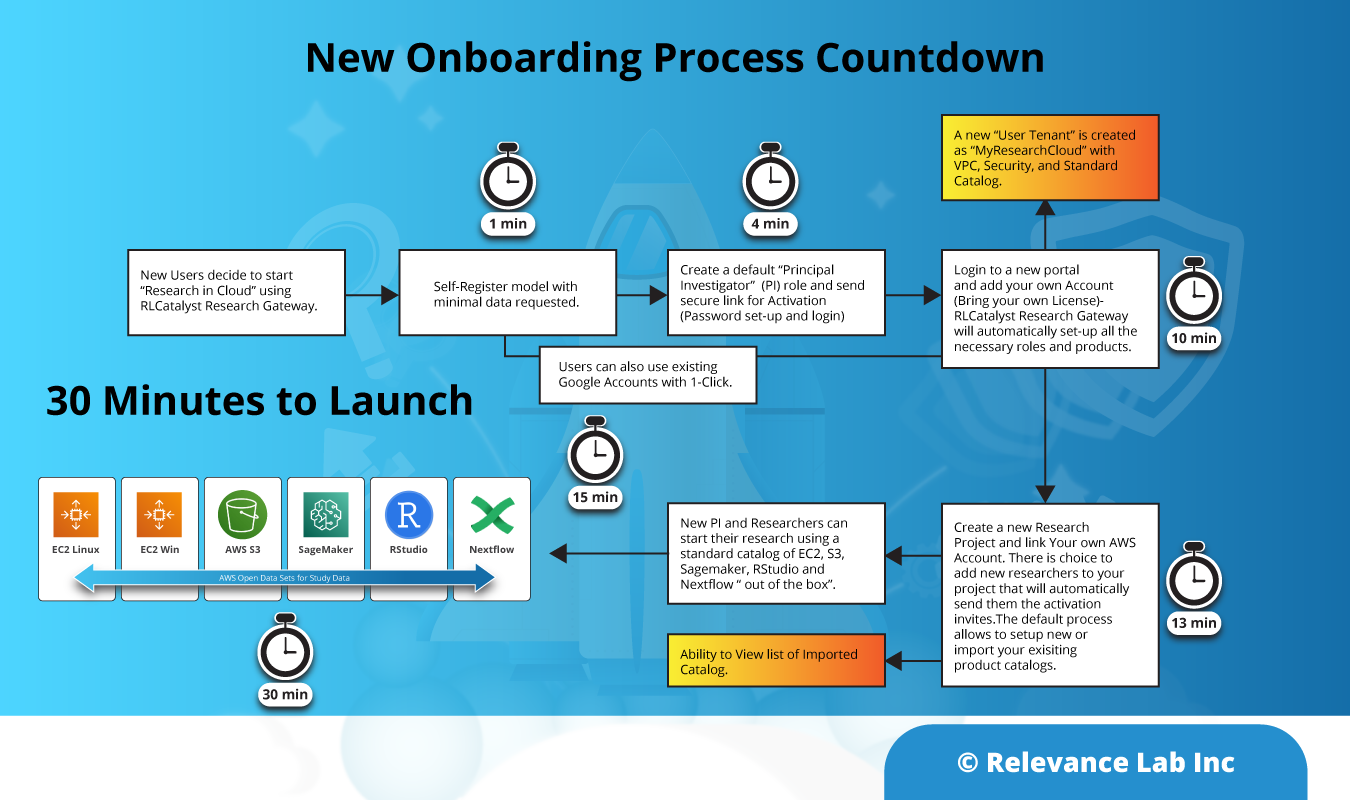

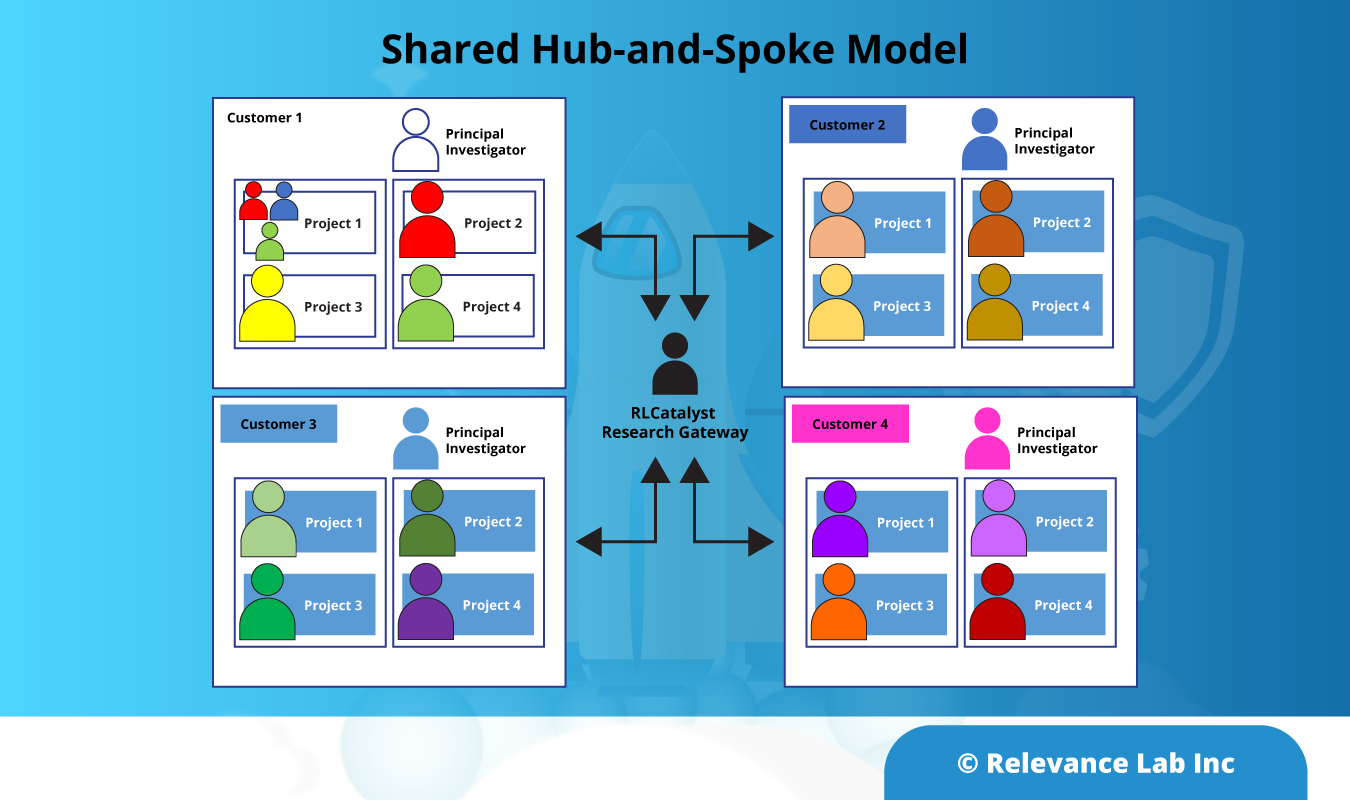

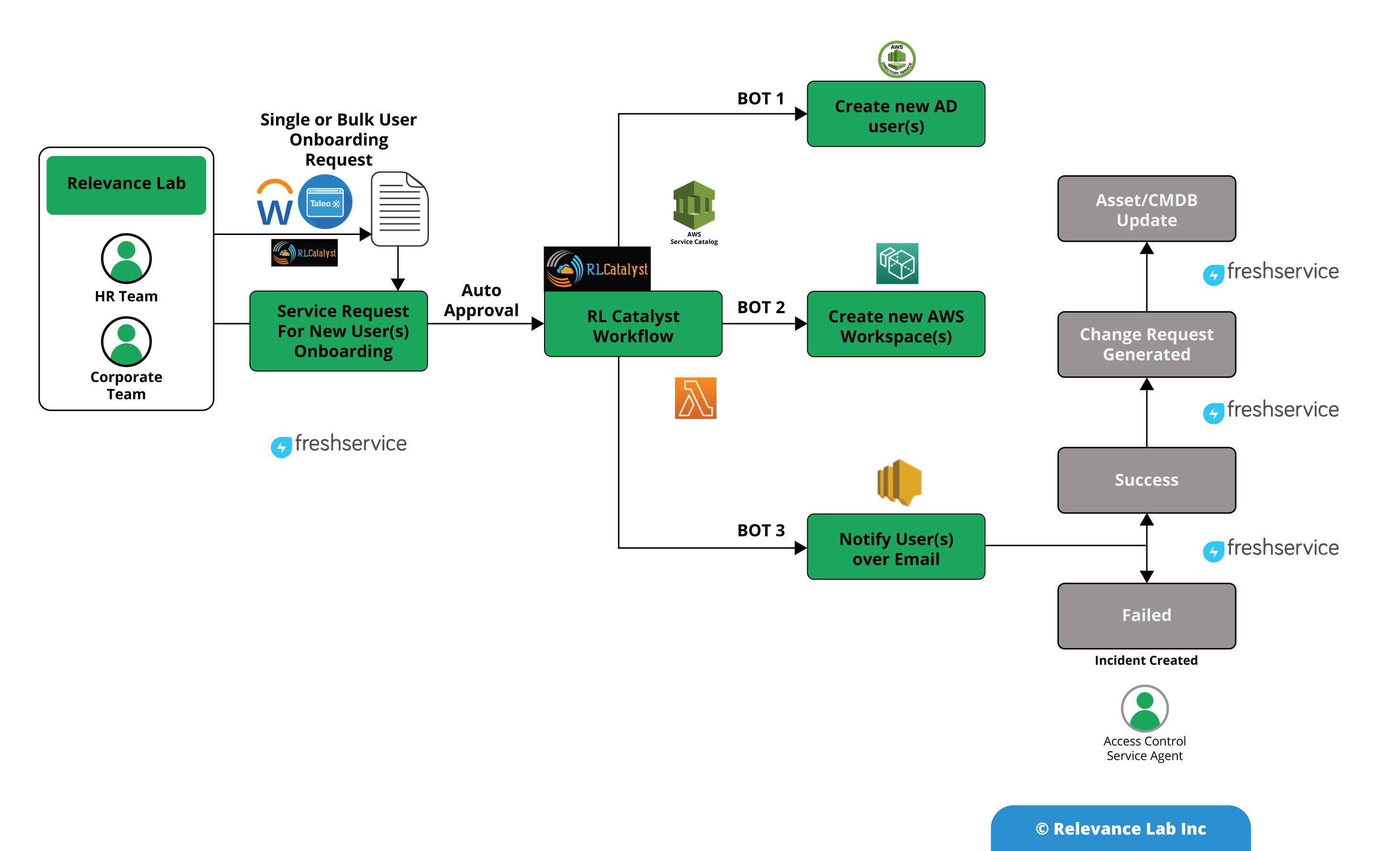

The basic question in our mind is always “How would customers like to use this system?”. Our product is targeted to researchers and academics who want to use the scalability and elasticity of the AWS cloud for ad-hoc and high-performance computing needs. These users are not experts at using the AWS console. So, we made things extremely simple for the user. Researchers can order products with a single click, and the portal sets up their resources without the user needing to understand any of the underlying complexities. Users can also interact with the products through the portal, eliminating the need to set up anything outside the portal (though they always have that option).

We also kept in mind the administrators of the system for whom this might just be one amongst many others that they must manage. Thus, we made it easy for the administrator to add AWS accounts, create Organizational Units, and integrated Identity Providers. Our goals were: administrators to get the system up and running in less than 30 minutes.

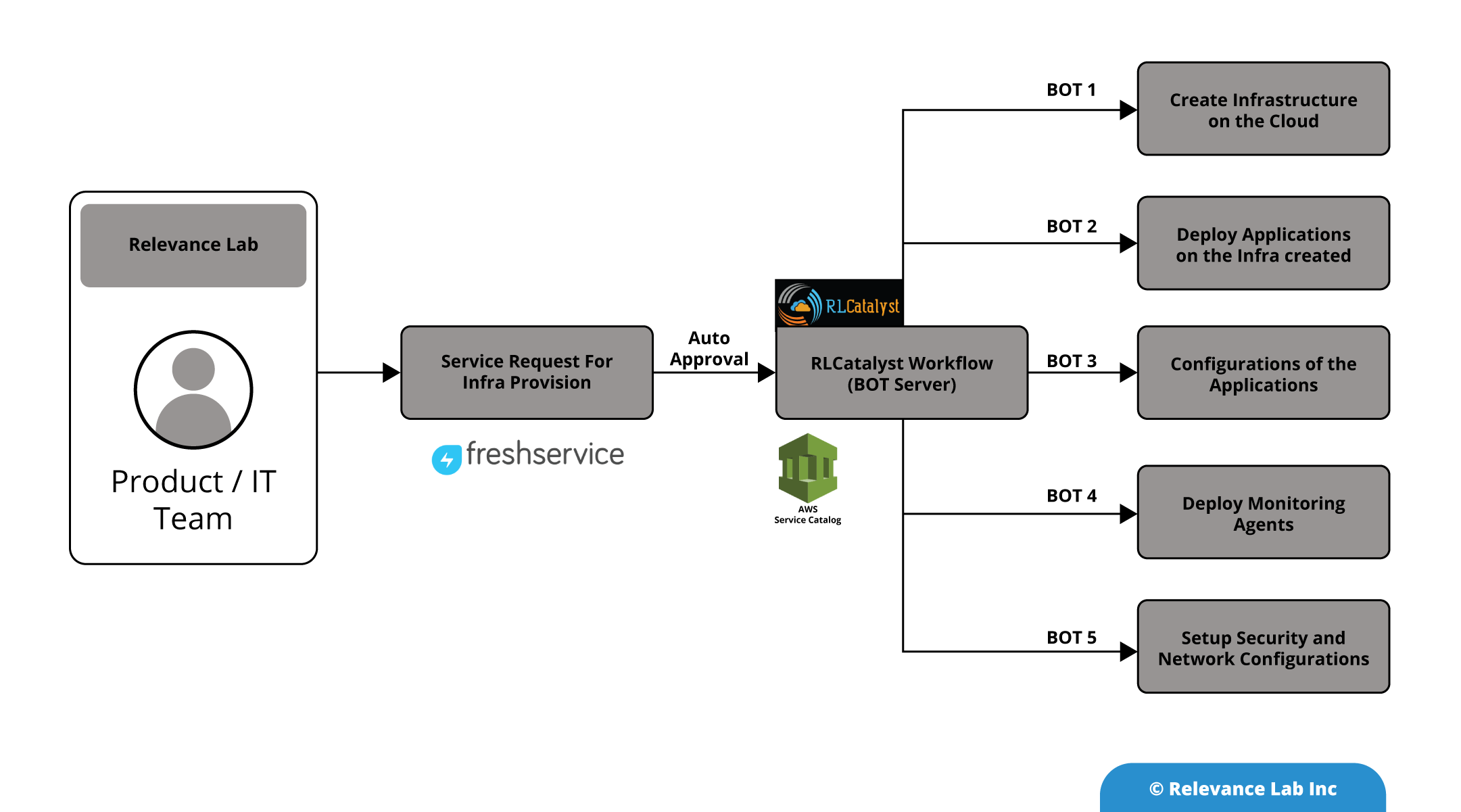

2. Scalability, performance, and reliability

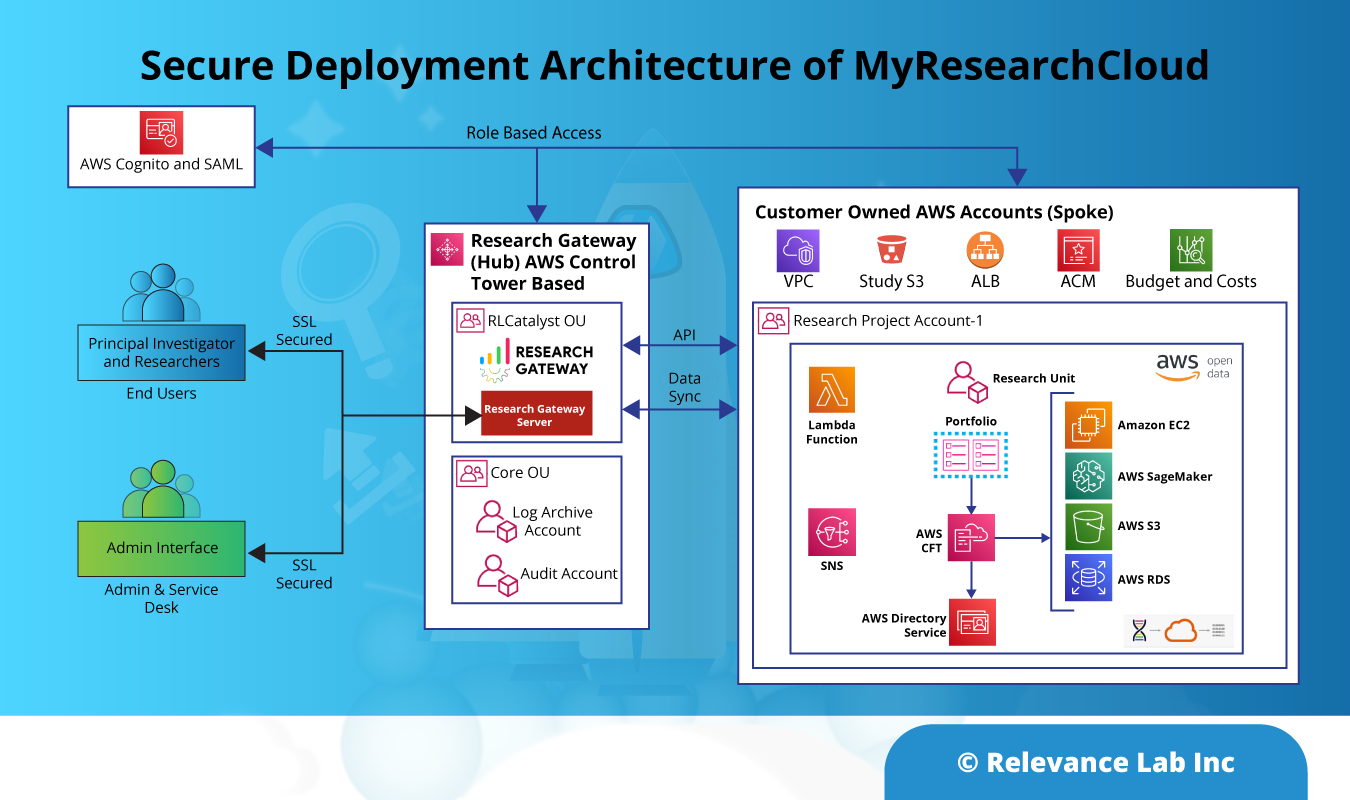

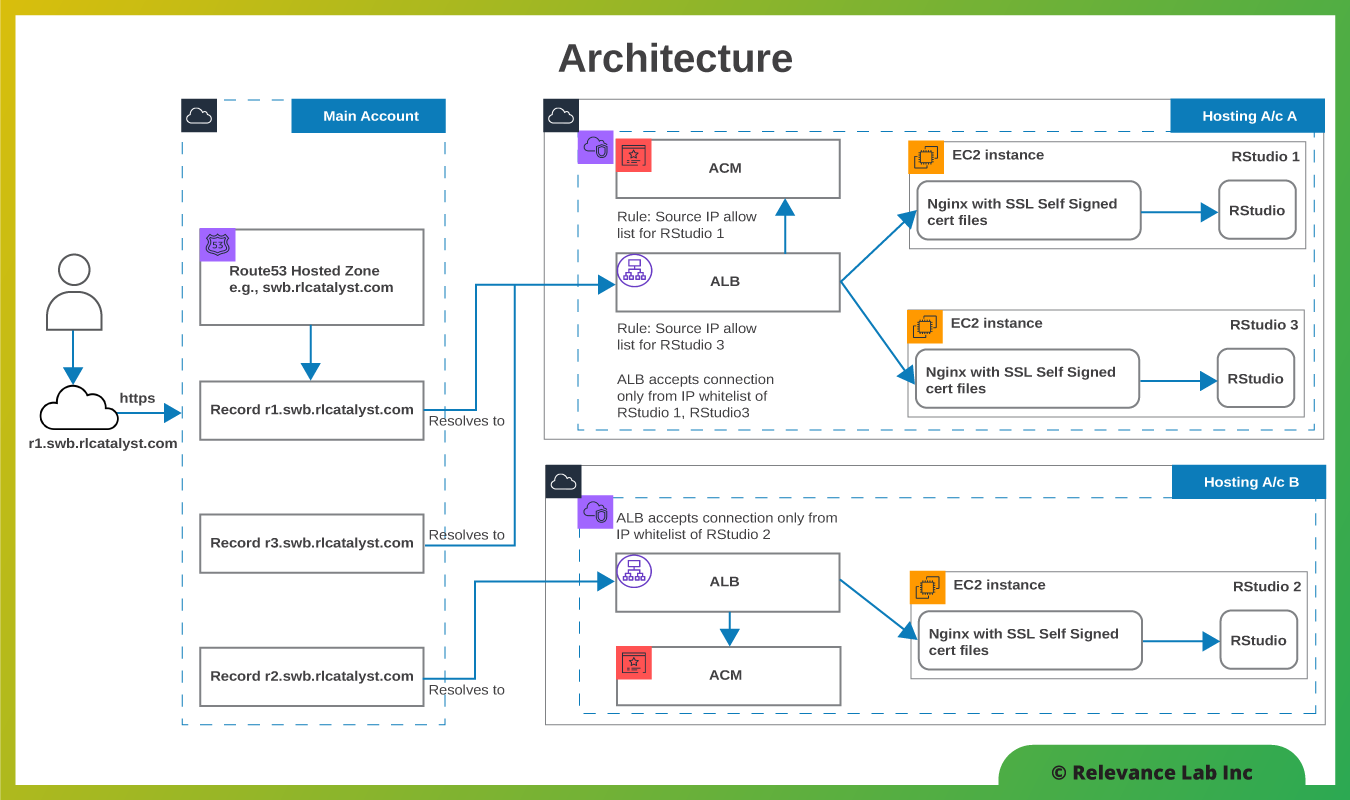

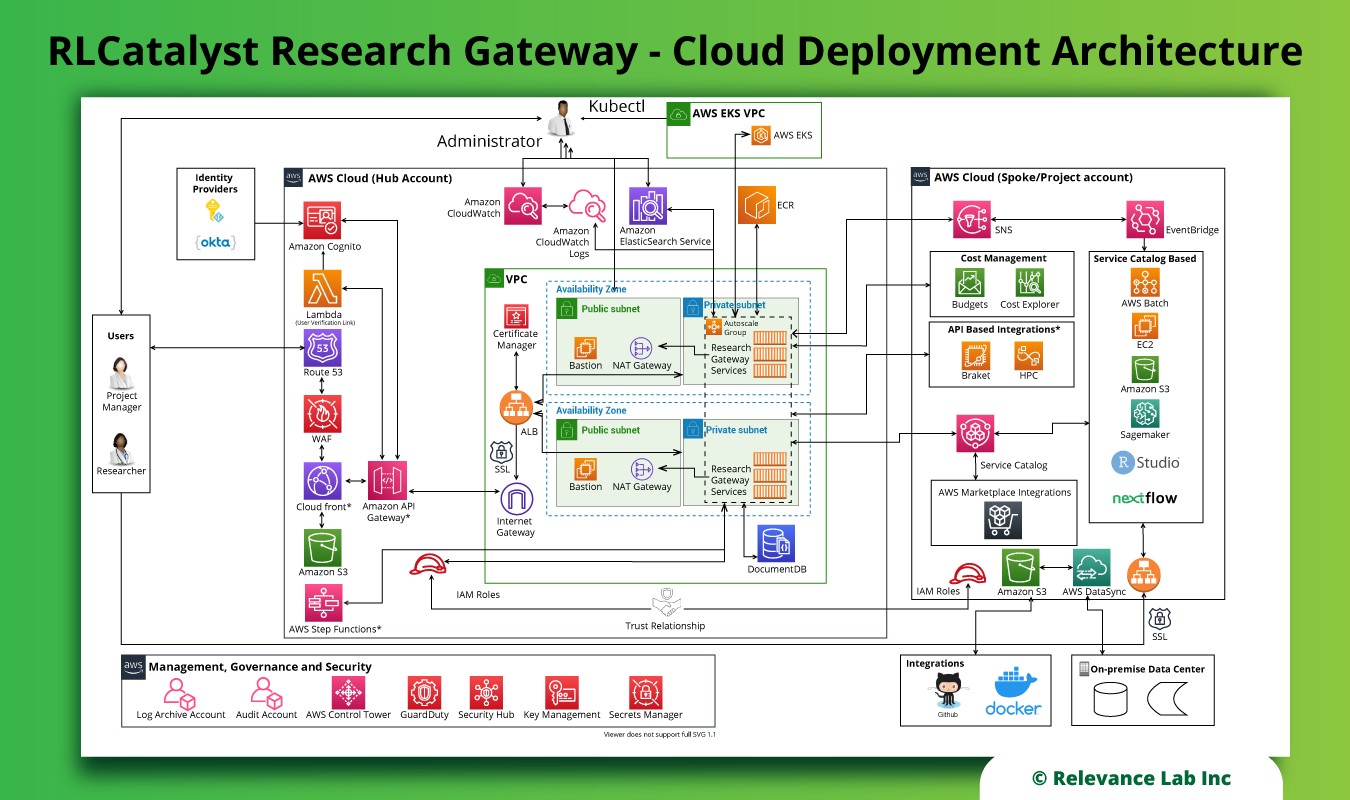

We followed the best practices recommended by AWS, and where possible, used standardized architecture models so that users would find it easy as well as familiar. For example, we deploy our system into a VPC with public and private subnets. The subnets are spread across multiple Availability Zones to guard against the possibility of one availability zone going down. The computing instances are deployed in the private subnet to prevent unauthorized access. We also use auto-scaling groups for the system to be able to pull in additional compute instances when the load is higher.

3. What is the time to market?

One of our main goals was to be able to bring the product to market quickly and put it in front of the customers to gain early and valuable feedback. Developing the product as a partner of AWS was a great help since we were able to use many AWS services for some of the common application needs without spending time in developing our own components for well known use-cases. For example, RLCatalyst Research Gateway does its user management via AWS Cognito, which provides the facility to create users, roles, and groups as well as the ability to interface with other Identity Provider systems.

Similarly, we use AWS DocumentDB (with MongoDB API compatibility) as our database. This allows developers to use a local MongoDB instance, while QA and production systems use AWS DocumentDB with high availability of multi-AZ clusters, automated backups via AWS Backup and Snapshots.

4. Cost efficiency

This is one of the key concerns for every administrator. RLCatalyst Research Gateway uses a scalable architecture that not only lets the system scale up when the load is high but also scales down when the load is less to optimize on the cost. We use EKS clusters to deploy our solution and AWS DocumentDB clusters. This allows us to choose the size and instance type according to the cost considerations.

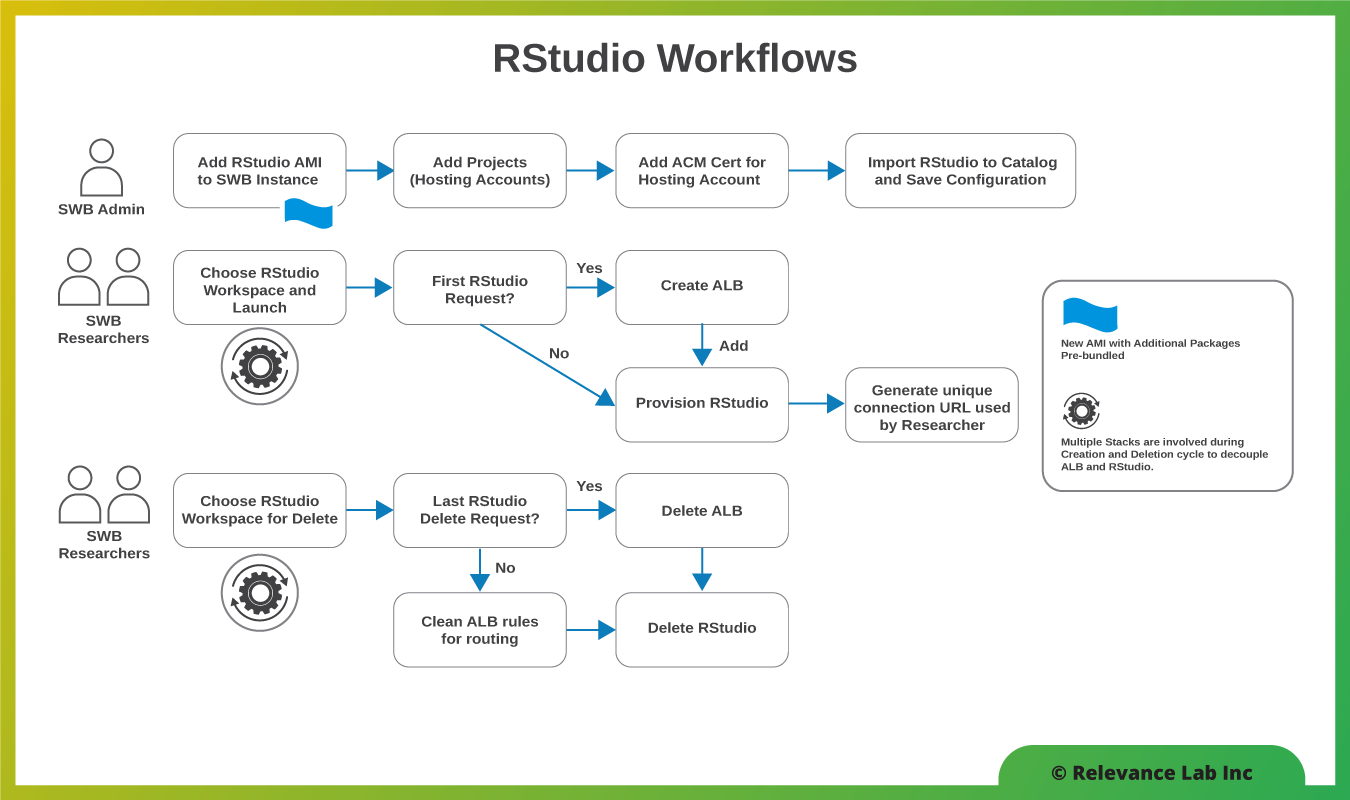

We have also brought in features like the automatic shutdown of resources so that idle compute instances, which are not running any jobs, can shut down after a 15-minute idle time. Additionally, even resources like ALBs are de-provisioned when the last compute instance behind them is de-provisioned.

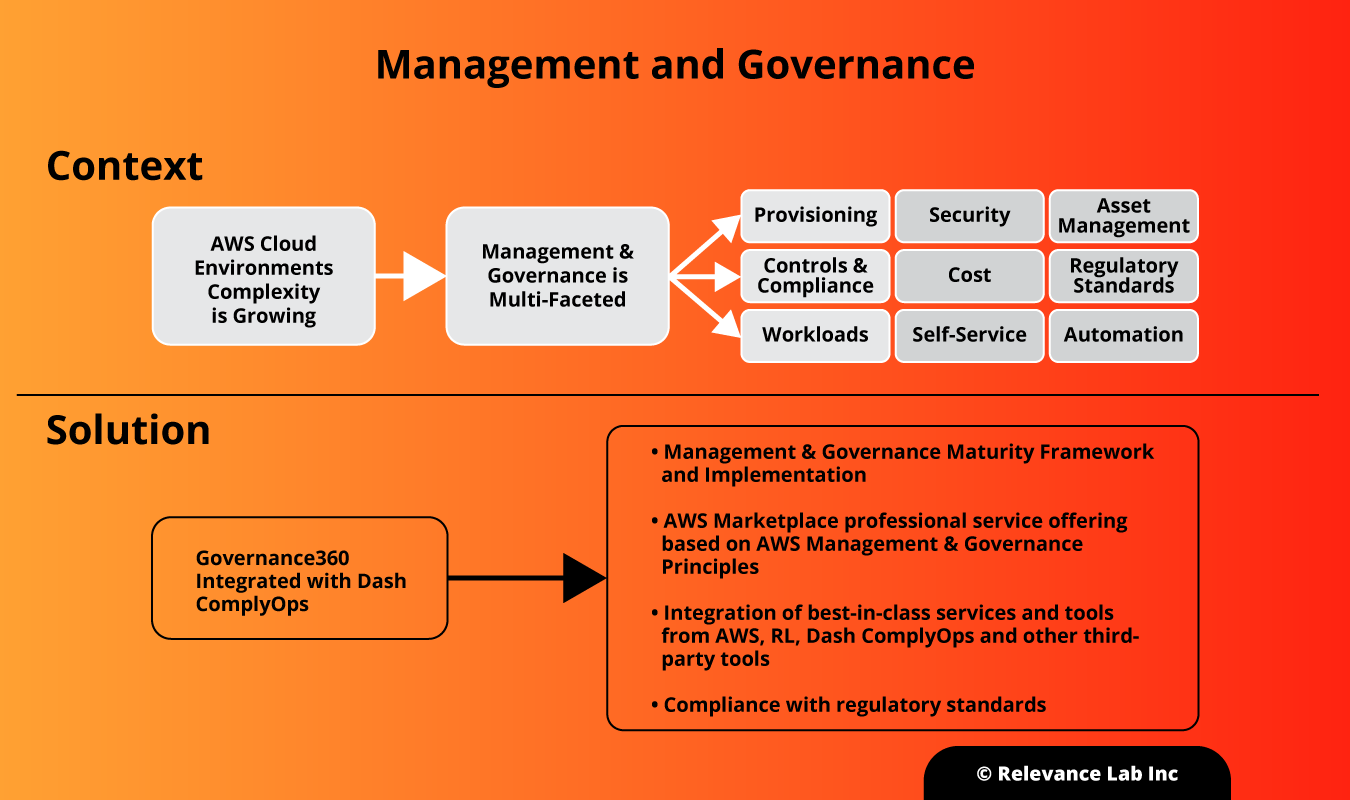

We provide a robust cost governance dashboard, allowing users insights into their usage and budget consumption.

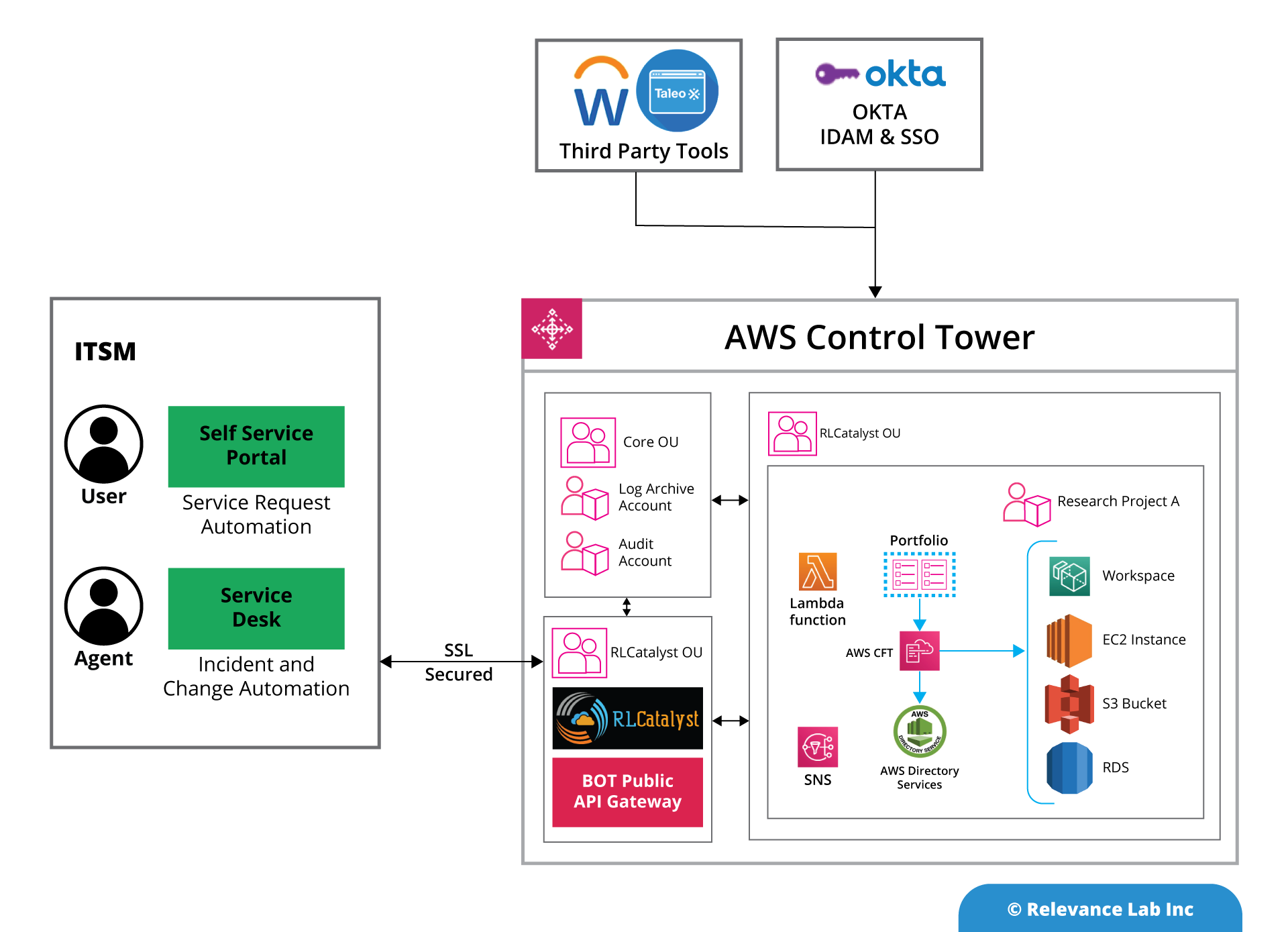

5. Security

Our target customers are in the research and scientific computing area, where data security is a key concern. We are frequently asked, “Will the system be secure? Can it help me meet regulatory requirements and compliances?”. RLCatalyst Research Gateway architecture is developed with security in mind at each level. The use of SSL certificates, encryption of data at rest, and the ability to initiate action at a distance are some of the architecture considerations.

Map of AWS Services

| AWS Service | Purpose | Benefits |

|---|---|---|

| Amazon EC2, Auto-scaling | Elastic Compute | Provides easily managed compute resources without need to manage hardware. Integrates well with Infrastructure as Code (IaC) |

| Amazon Virtual Private Cloud (VPC) | Networking | Provides isolation of resources, easy management of traffic, isolation of traffic. |

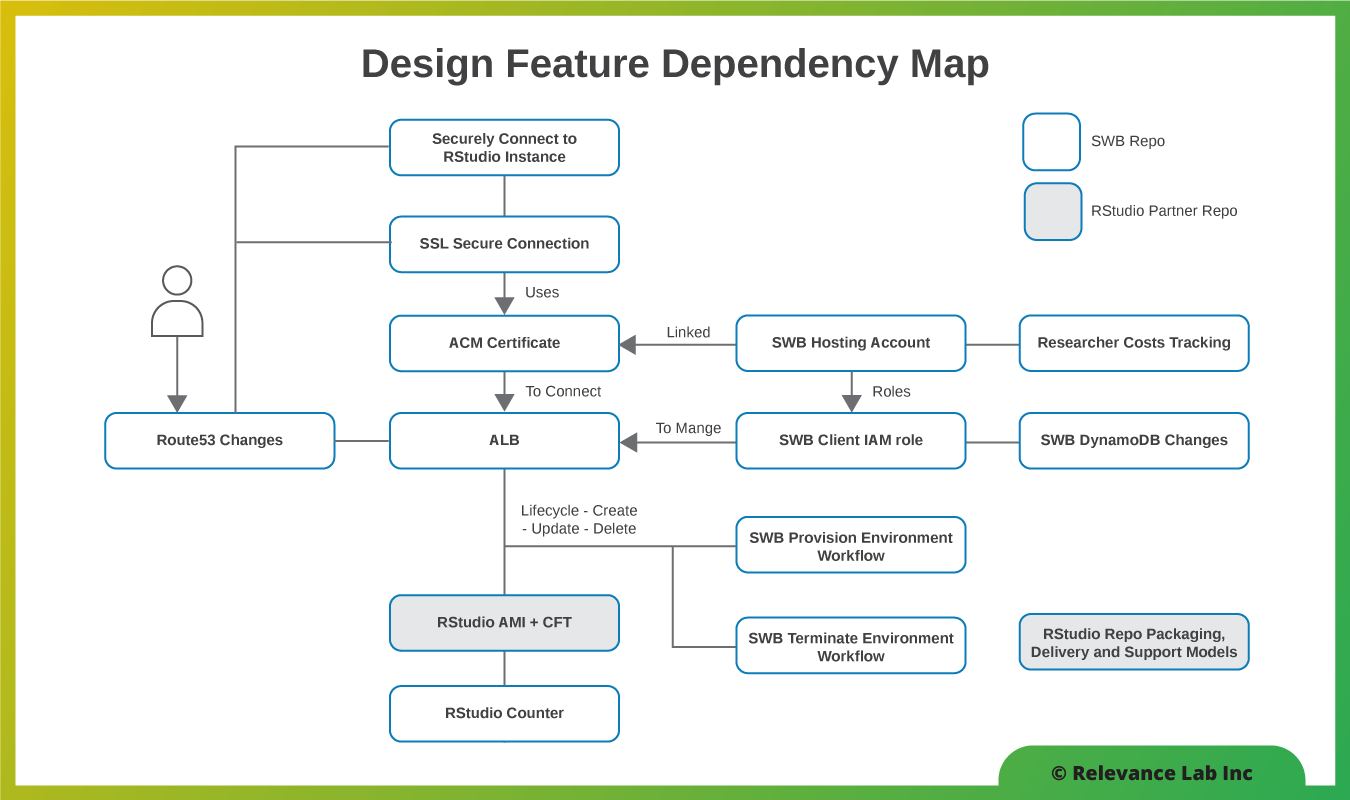

| Application Load Balancer, AWS Certificate Manager | Load-balancer, Secure end-point | Provides an easy way to provide a single end-point which can route traffic to multiple target groups. Integrates with AWS Certificate manager to provide SSL support. |

| AWS CostExplorer, AWS Budgets | Cost and Governance | Provides fine-grained cost and usage data. Notifications when budget thresholds are reached. |

| AWS Service Catalog | Catalog of approved IT Services on AWS | Provides control on what resources can be used in an AWS account. |

| AWS WAF (Web Application Firewall) | Application Firewall | Helps manage malicious traffic |

| Amazon Route53 | DNS (Domain Name System) Services | Provides hosted zones and API access to manage the same. |

| Amazon Cloudfront | CDN (Content Delivery Network) | Caches content closest to end-users to reduce latency and improve customer experience. |

| AWS Cognito | User Management | Authentication and authorization |

| AWS Identity and Access Management (IAM) | Identity Management | Provides support for granular control based on policies and roles. |

| AWS DocumentDb | NoSQL database | MongoDB compatible API |

Validation of the Solution

It is always good to validate your solution with an external review from the experts. AWS offers such an opportunity to all its partners by way of the AWS Foundational Technical Review. The review is valid for two years and is free of cost to partners. Looking at our design through the FTR Lens enabled us to see where our design could get better in terms of using the best practices (especially in the areas of security and cost-efficiency). Once these changes were implemented, we earned the “Reviewed by AWS” badge.

Summary

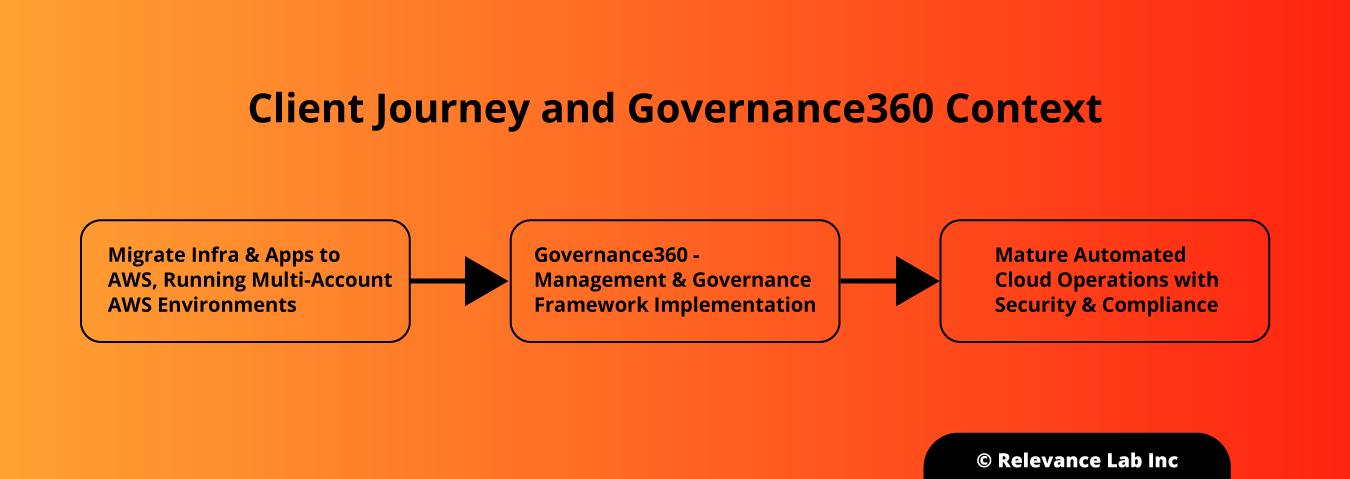

Relevance Lab developed the RLCatalyst Research Gateway in close partnership with AWS. One of the excellent tools available from AWS for any software architecture team is the AWS Well-Architected Framework with its five pillars of Operational Excellence, Security, Reliability, Performance Efficiency, and Cost Efficiency. Working within this framework greatly facilitates the development of a robust architecture that serves not only current but also future goals.

To know more about RLCatalyst Research Gateway architecture, feel free to write to marketing@relevancelab.com.

References

How to speed up the GEOS-Chem Earth Science Research using AWS Cloud?

Driving Frictionless Scientific Research on AWS Cloud

Leveraging AWS HPC for Accelerating Scientific Research on Cloud

Health Informatics and Genomics on AWS with RLCatalyst Research Gateway

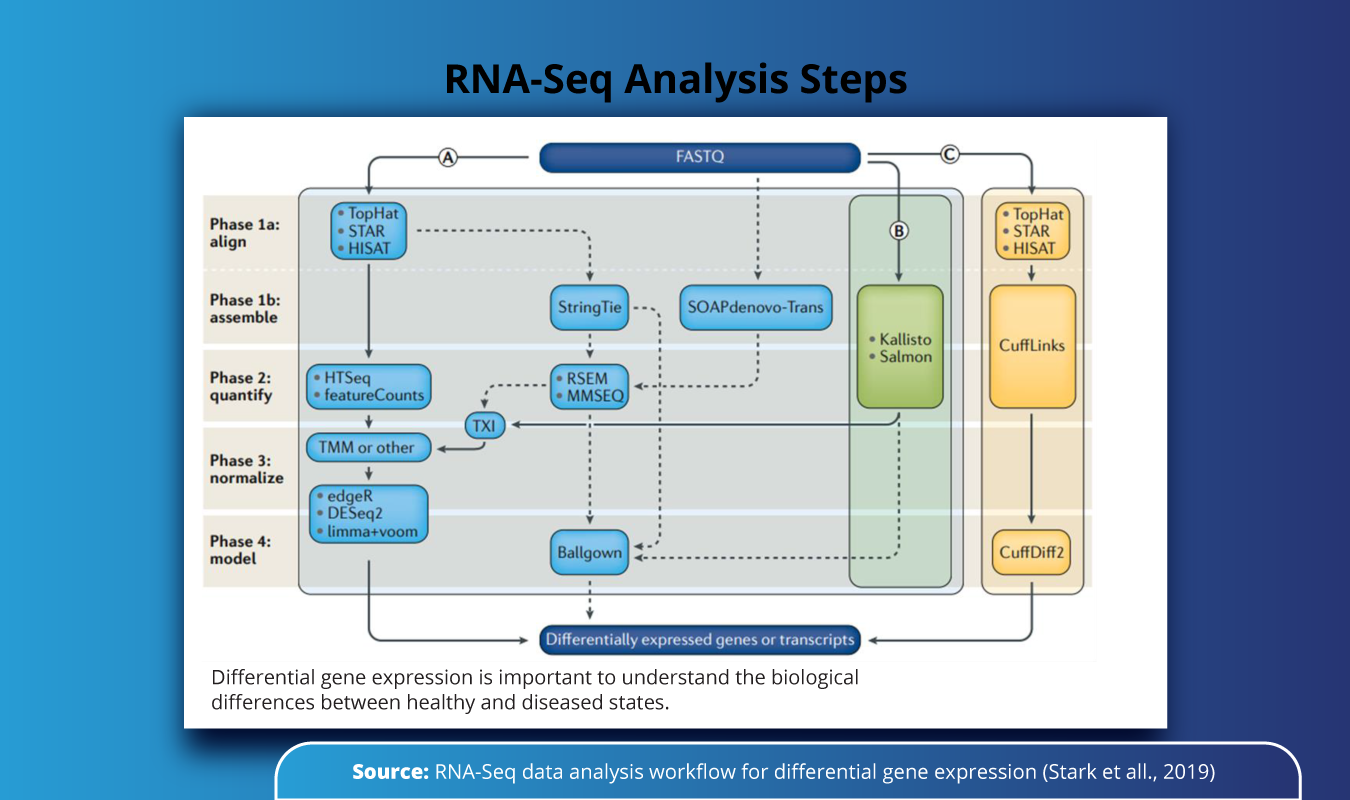

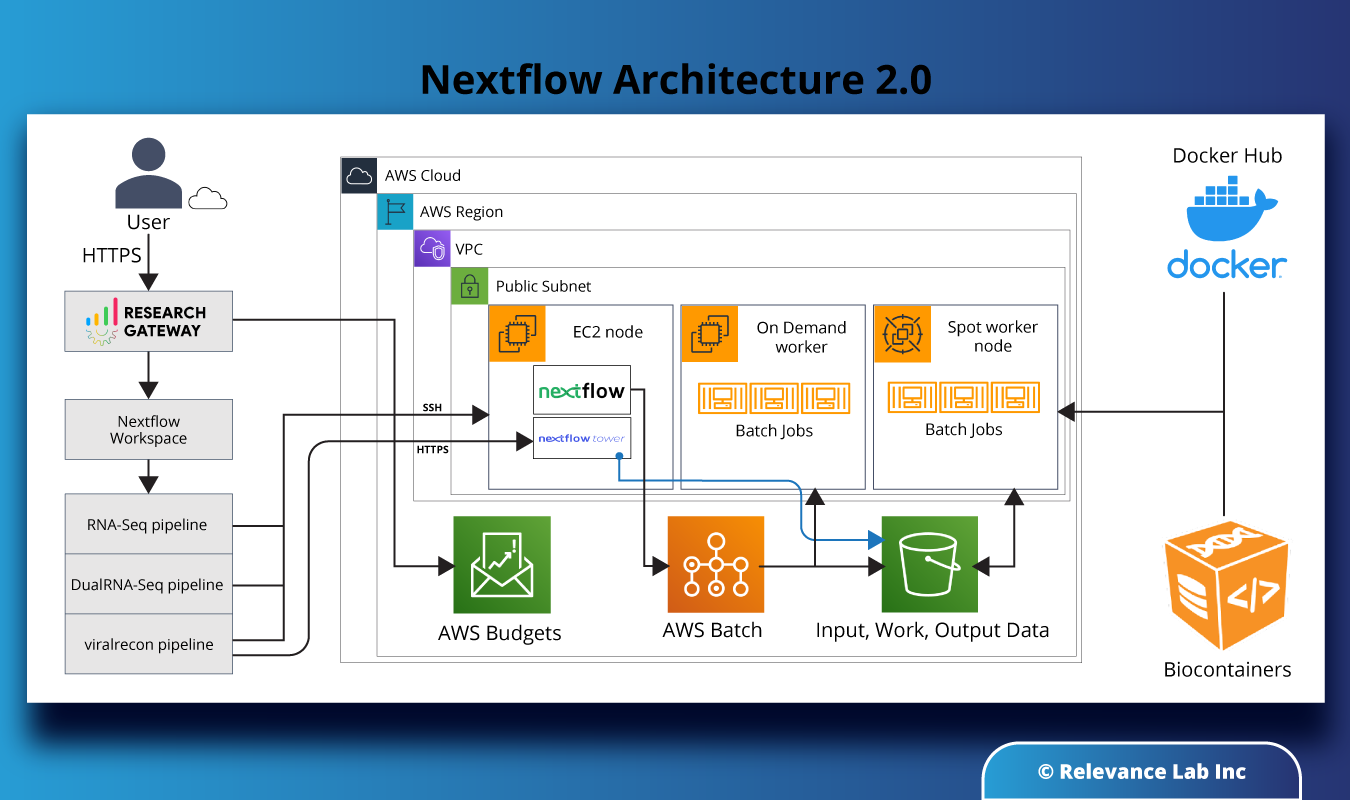

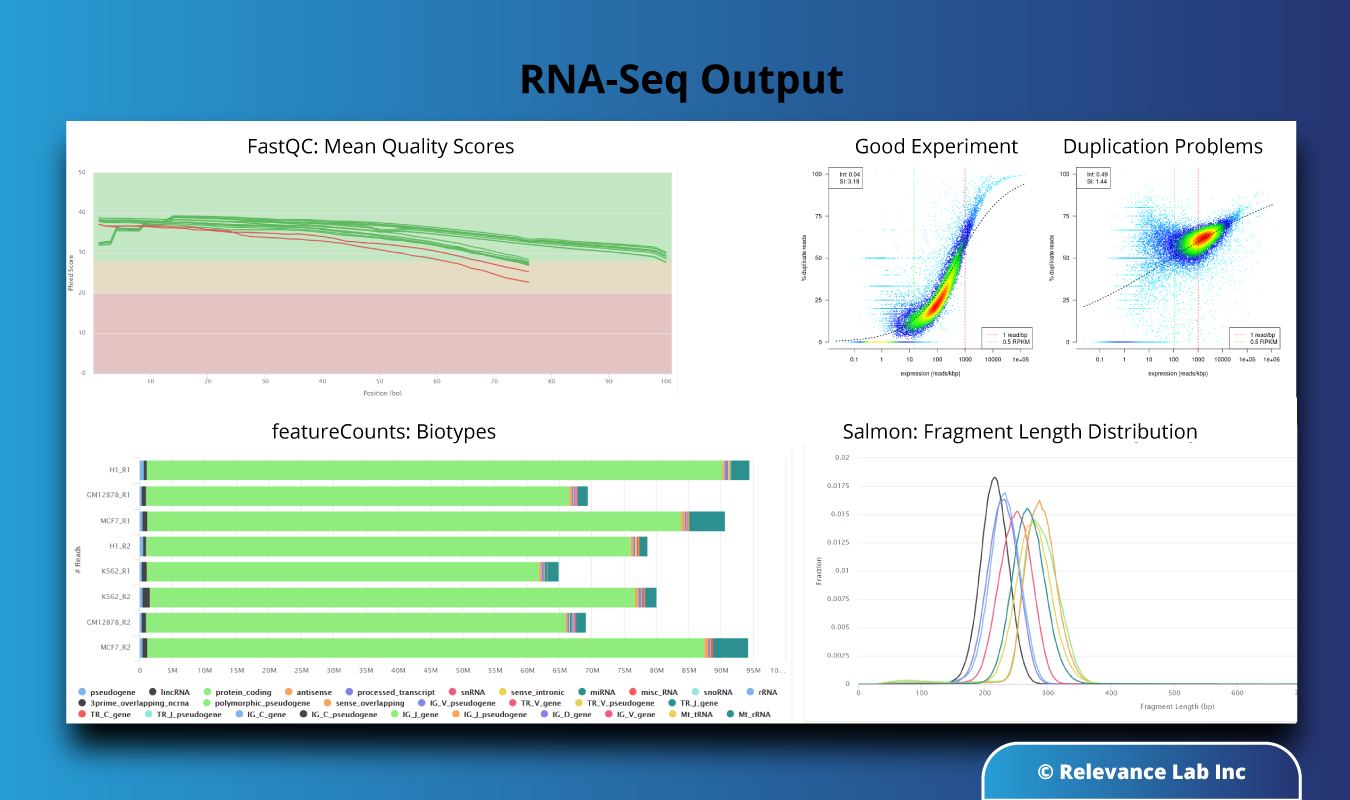

Enabling Researchers with Next-Generation Sequencing (NGS) Leveraging Nextflow and AWS

8-Steps to Set-Up RLCatalyst Research Gateway