2020 Blog, Blog, DevOps Blog, Featured, ServiceOne, ServiceNow

Using GIT configuration management integration in Application Development to achieve higher velocity and quality when releasing value-added features and products

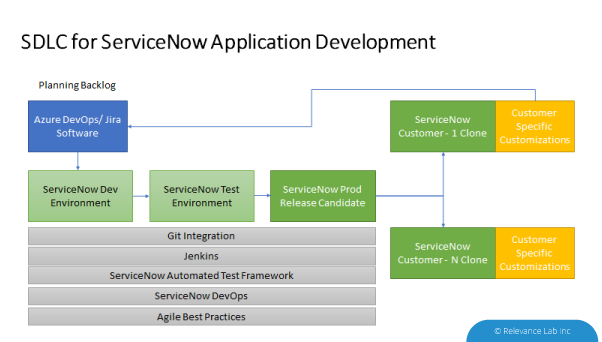

ServiceNow offers a fantastic platform for developing applications. All infrastructure, security, application management and scaling etc.is taken up by ServiceNow and the application developers can concentrate on their core competencies within their application domain. However, several challenges are faced by companies that are trying to develop applications on ServiceNow and distribute them to multiple customers. In this article, we take a look at some of the challenges and solutions to those challenges.

A typical ServiceNow customization or application is distributed with several of the following elements:

- Update Sets

- Template changes

- Data Migration

- Role creation

- Script changes

Distribution of an application is typically done via an Update Set which captures all the delta changes on top of a well-known baseline. This base-line could be the base version of a specific ServiceNow release (like Orlando or Madrid) plus a specific patch level for that release. To understand the intricacies of distributing an application we have to first understand the concept of a Global application versus a scoped application.

Typically only applications developed by ServiceNow are in the global scope. However before the Application Scoping feature was released, custom applications also resided in the global scope. This means that other applications can read the application data, make API requests, and change the configuration records.

Scoped applications, which are now the default, are uniquely identified along with their associated artifacts with a namespace identifier. No other application can access the data, configuration records, or the API unless specifically allowed by the application administrator.

While distributing applications, it is easy to do so using update sets if the application has a private scope since there are no challenges with global data dependencies.

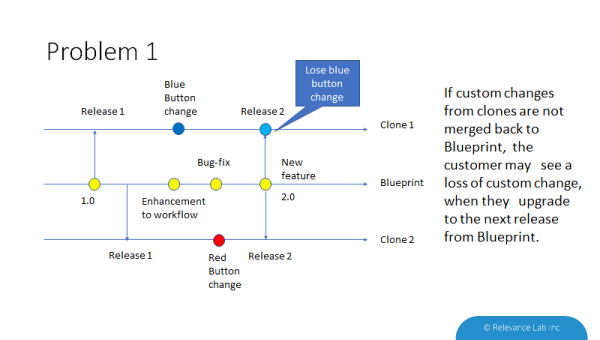

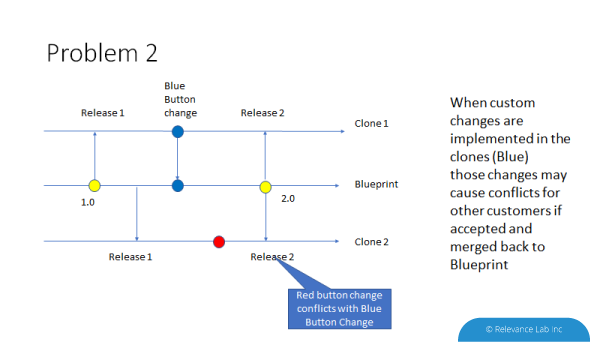

The second challenge is with customizations done after distributing an application. There are two possible scenarios.

- An application release has been distributed (let’s call it 1.0).

- Customer-1 needs customization in the application (say a blue button is to be added in Form-1). Now customer 1 has 1.0 + Blue Button change.

- Customer-2 needs different customization (say a red button is to be added in Form-1)

- The application developer has also done some other changes in the application and plans to release the 2.0 version of the application.

Problem-1: If application 2.0 is released and Customer-1 upgrades to that release, they lose the blue-button changes. They have to redo the blue-button change and retest.

Problem-2: If the developer accepts blue button changes into the application and releases 2.0 with blue button changes, when Customer-2 upgrades to 2.0, they have a conflict of their red button change with the blue-button change.

These two problems can be solved by using versioning control using Git. When the application developers want to accept blue button changes into 2.0 release they can use the Git merge feature to merge the commit of Blue button changes from customer-1 repo into their own repo.

When customer-2 needs to upgrade to 2.0 version they use the Stash feature of Git to store their red button changes prior to the upgrade. After the upgrade, they can apply the stashed changes to get the red button changes back into their instance.

The ServiceNow source control integration allows application developers to integrate with a GIT repository to save and manage multiple versions of an application from a non-production instance.

Using the best practices of DevOps and Version Control with Git it is much easier to deliver software applications to multiple customers while dealing with the complexities of customized versions. To know more about ServiceNow application best practices and DevOps feel free to contact: marketing@relevancelab.com

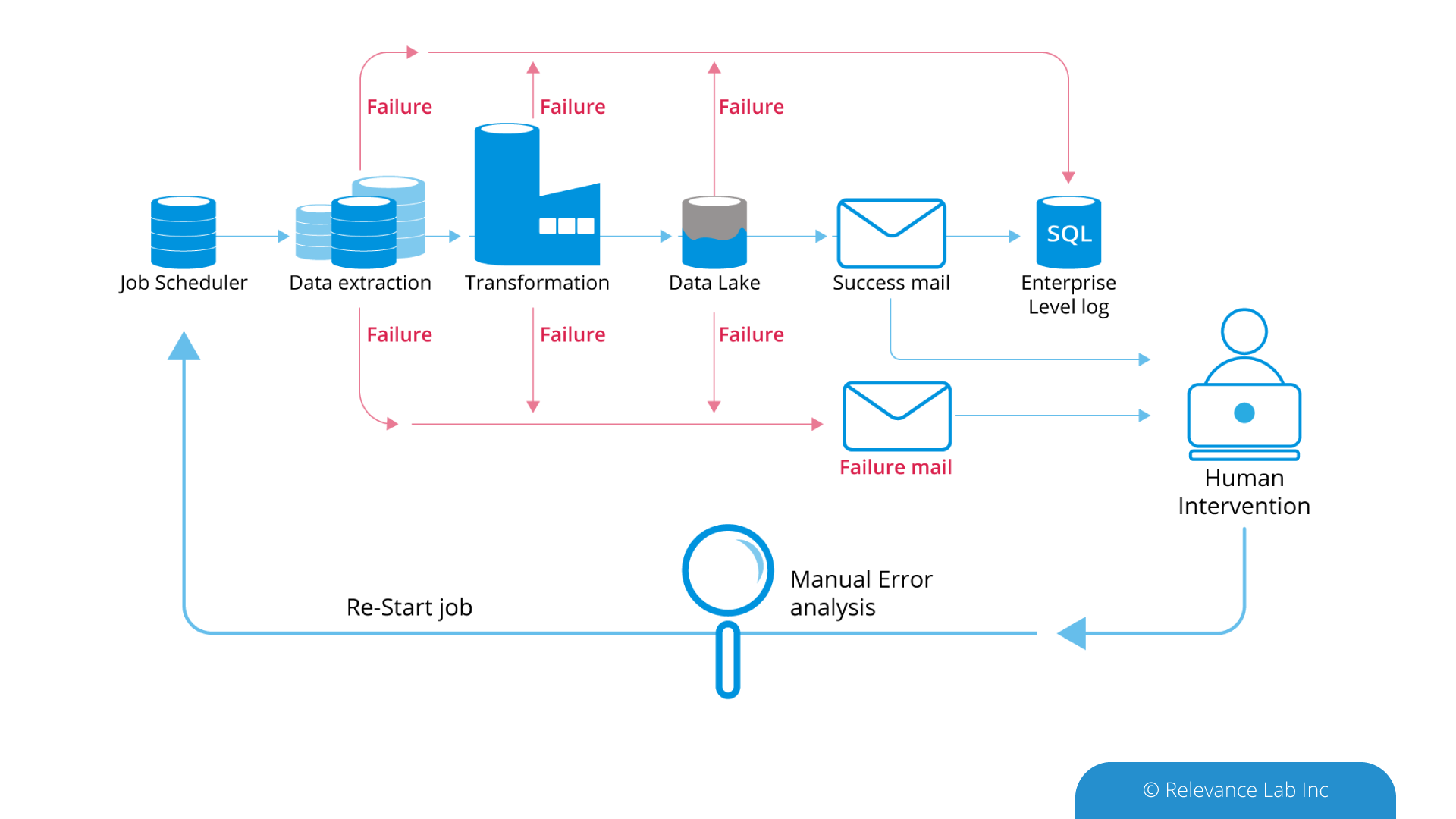

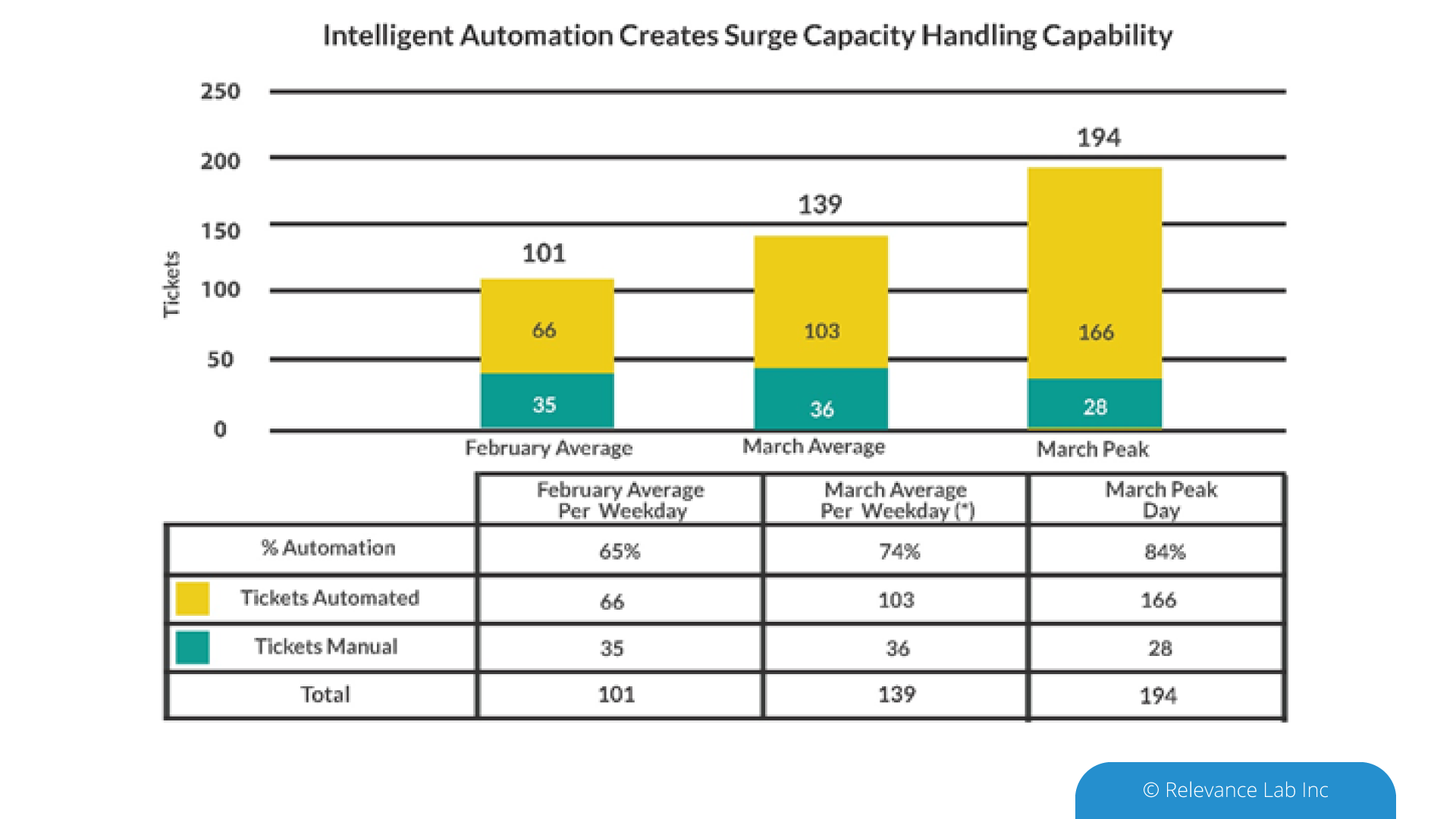

Figure 1: Intelligent Automation Eliminated Service Desk Impact

Figure 1: Intelligent Automation Eliminated Service Desk Impact

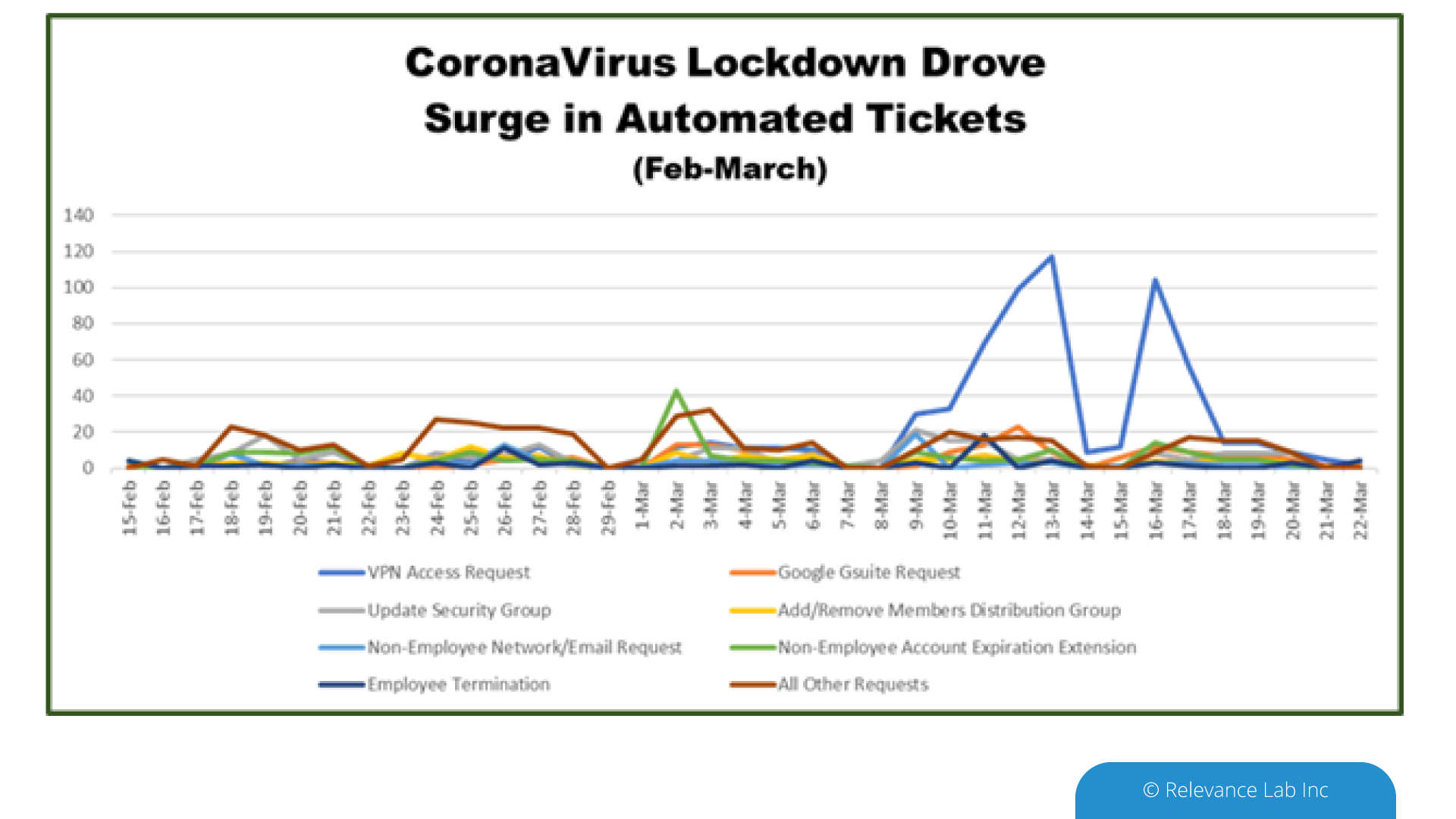

Figure 2: Dramatic Ticket Spike as People Prepared to Work-from-Home

Figure 2: Dramatic Ticket Spike as People Prepared to Work-from-Home