2021 Blog, AppInsights Blog, Blog, Featured

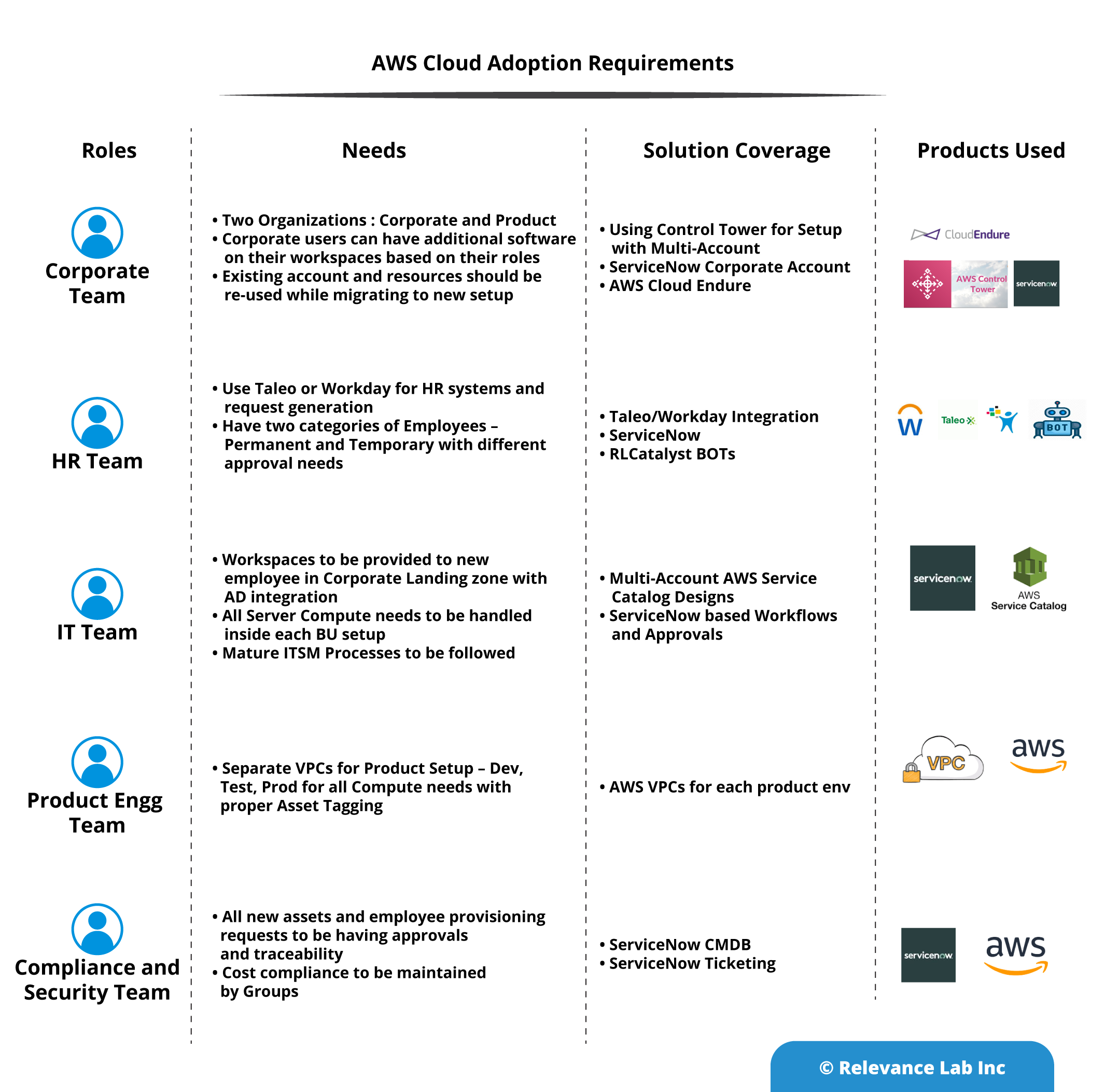

Many AWS customers either integrate ServiceNow into their existing AWS services or set up both ServiceNow and AWS services for simultaneous use. Customers need a near real-time view of their infrastructure and applications spread across their distributed accounts.

Commonly referred to as the “Dynamic Application Configuration Management Database (CMDB) or Dynamic Assets” view, it allows customers to gain integrated visibility into their infrastructures to break down silos and facilitate better decision making. From an end-user perspective as well, there is a need for an “Application Centric” view rather than an “Infrastructure/Assets” view as better visibility ultimately enhances their experience.

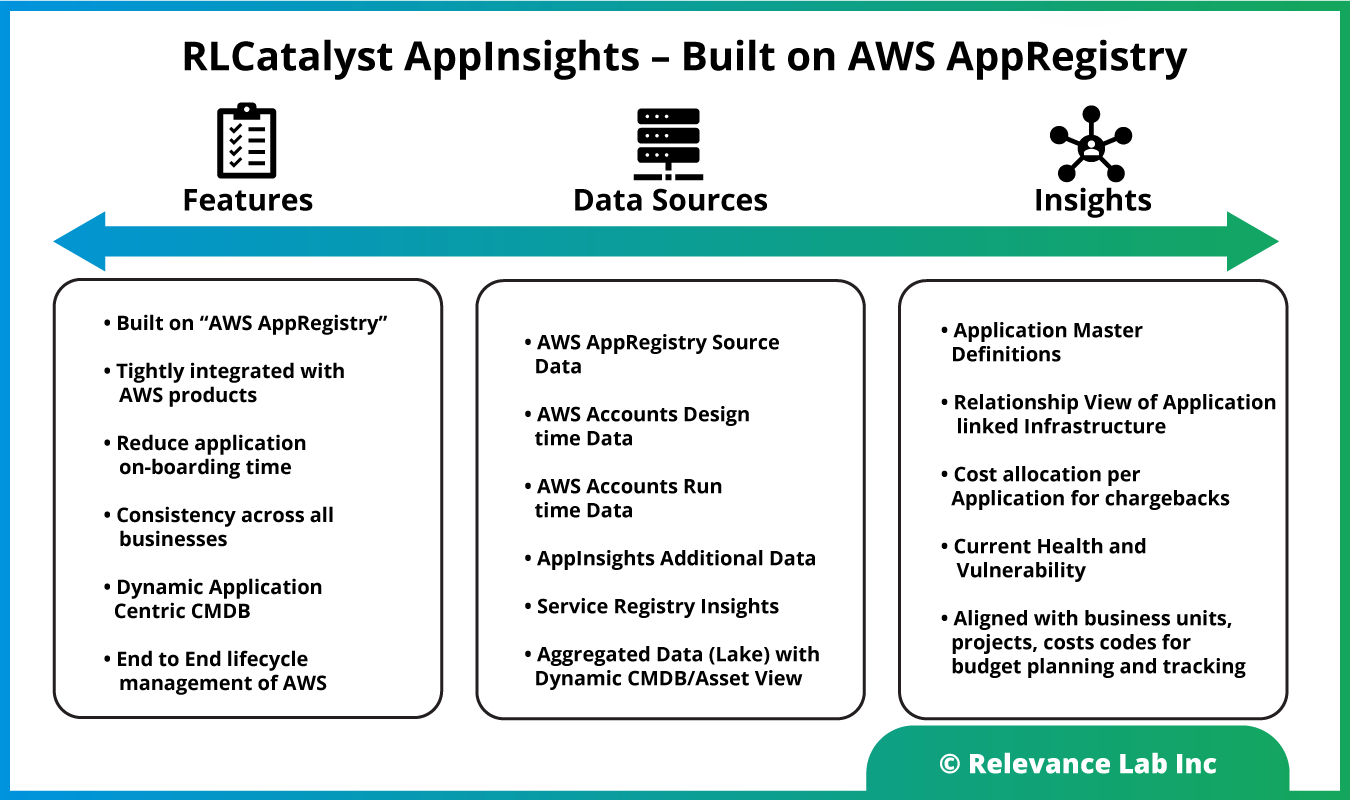

An “Application Centric” View provides the following insights.

- Application master for the enterprise

- Application linked infrastructure currently deployed and in use

- Cost allocation at application levels (useful for chargebacks)

- Current health, issues, and vulnerability with application context for better management

- Better aligned with existing enterprise context of business units, projects, costs codes for budget planning and tracking

Use Case benefits for ServiceNow customers

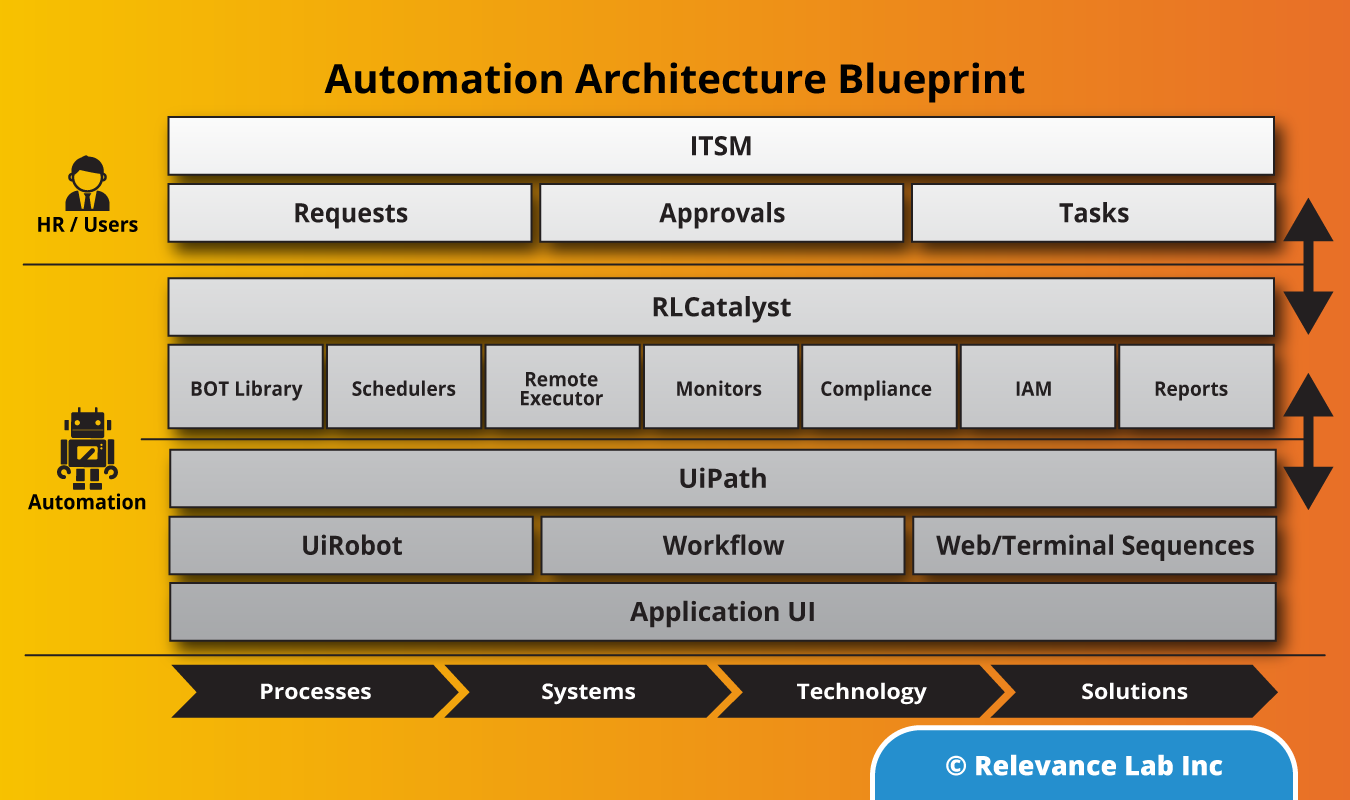

Near real-time view of AWS applications & Infrastructure workloads across multiple AWS accounts in ServiceNow. Customer is enabling self-service for their Managed Service Provider (MSP) and their Developers to:

- Maintain established ITSM policies & processes

- Enforce Consistency

- Ensure Compliance

- Ensure Security

- Eliminate IAM access to underlying services

Use Case benefits for AWS customers

Enabling application self-service for general & technical Users. The customer would like service owners (e.g. HR, Finance, Security & Facilities) to view AWS infrastructure-enabled applications via self-service while ensuring:

- Compliance

- Security

- Reduce application onboarding time

- Optical consistency across all businesses

RLCatalyst AppInsights Solution – Built on AppRegistry

Working closely with AWS partnership groups in addressing the key needs of customers, RLCatalyst AppInsights Solution provides a “Dynamic CMDB” solution that is Application Centric with the following highlights:

- Built on “AWS AppRegistry” and tightly integrated with AWS products

- Combines information from the following Data Sources:

- AWS AppRegistry

- AWS Accounts

- Design time Data (Definitions – Resources, Templates, Costs, Health, etc.)

- Run time Data (Dynamic Information – Resources, Templates, Costs, Health, etc.)

- AppInsights Additional Functionality

- Service Registry Insights

- Aggregated Data (Lake) with Dynamic CMDB/Asset View

- UI Interaction Engine with appropriate backend logic

A well-defined Dynamic Application CMDB is mandatory in cloud infrastructure to track assets effectively and serves as the basis for effective Governance360.

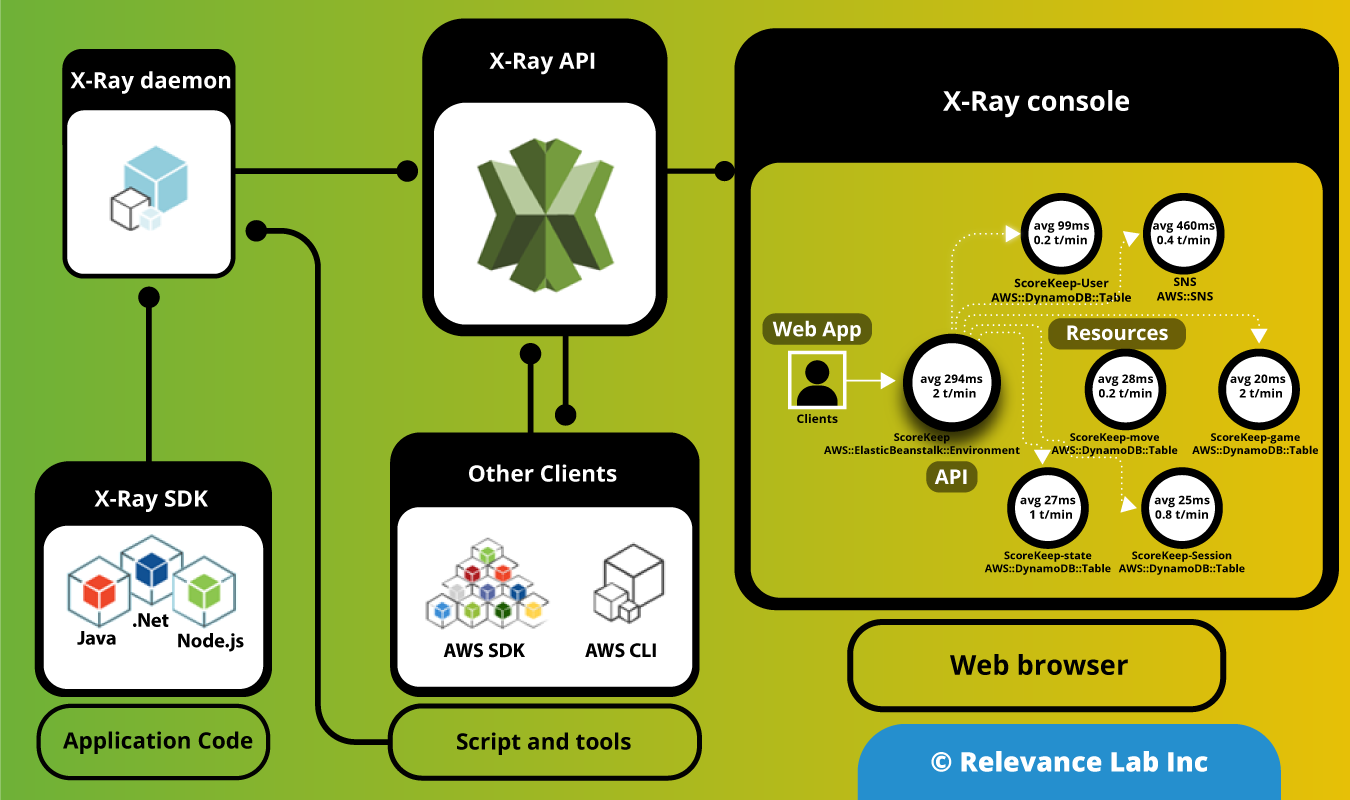

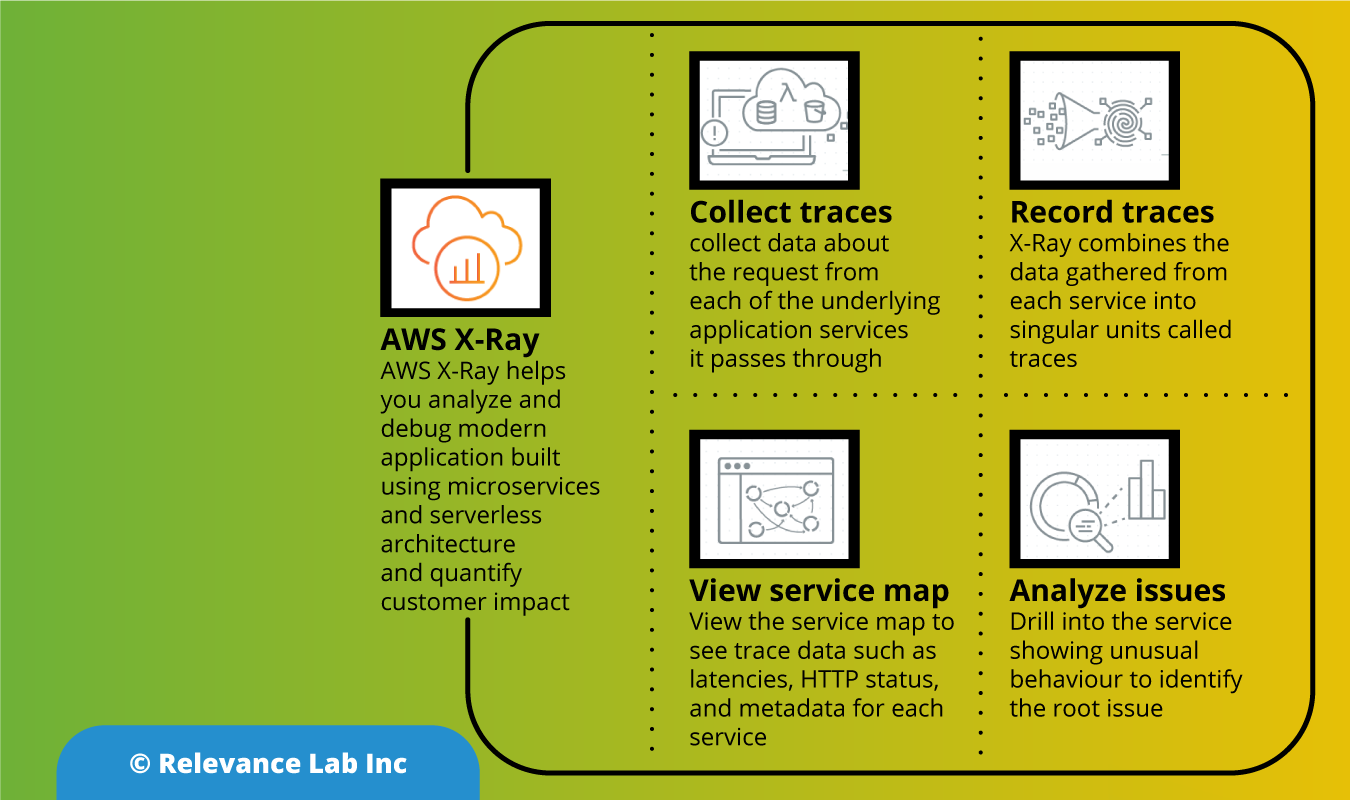

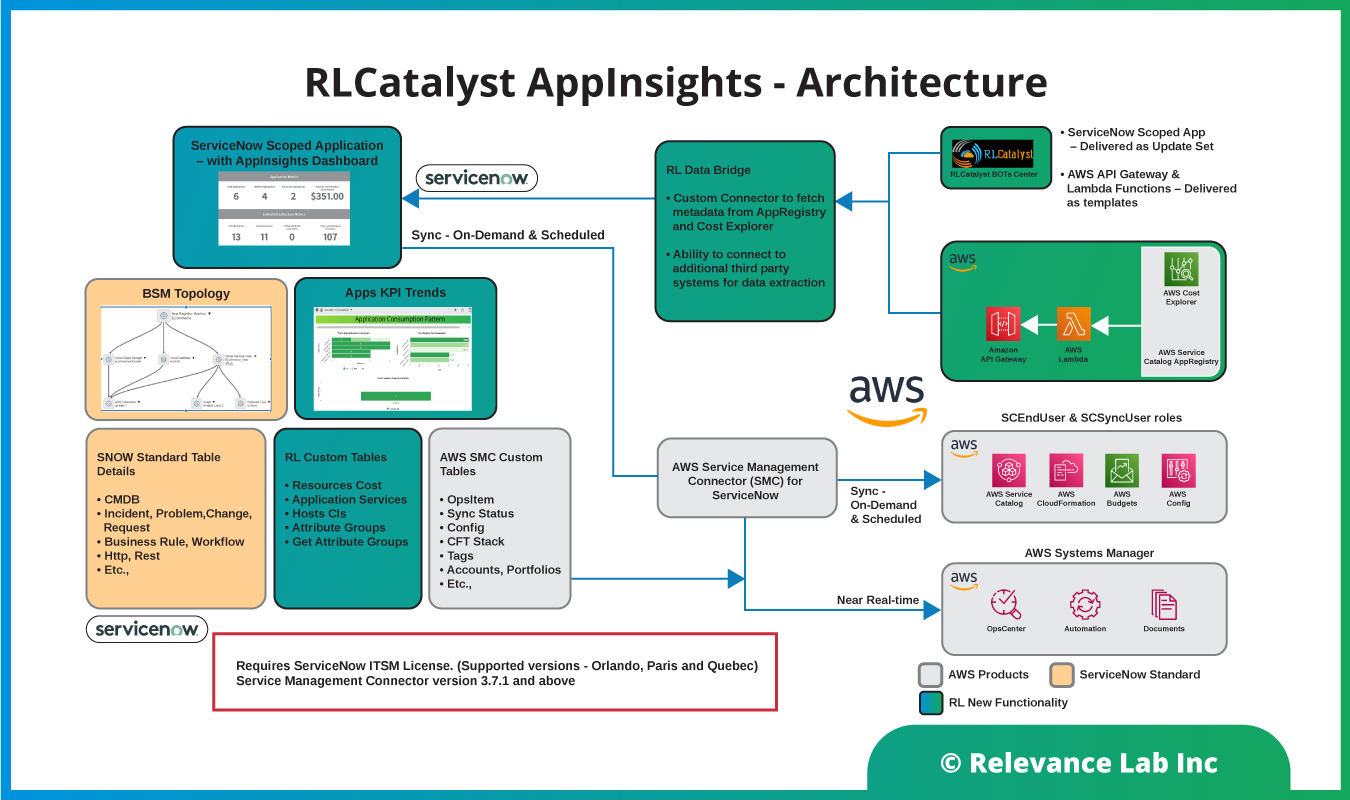

AWS recently released a new feature called AppRegistry to help customers natively build an AWS resources inventory that has insights into uses across applications. AWS Service Catalog AppRegistry allows creating a repository of your applications and associated resources. Customers can define and manage their application metadata. This allows understanding the context of their applications and resources across their environments. These capabilities enable enterprise stakeholders to obtain the information they require for informed strategic and tactical decisions about cloud resources. Using AppRegisty as a base product, we have created a Dynamic Application CMDB solution AppInsights to benefit AWS and ServiceNow customers as explained in the figure below.

Modeling a common customer use case

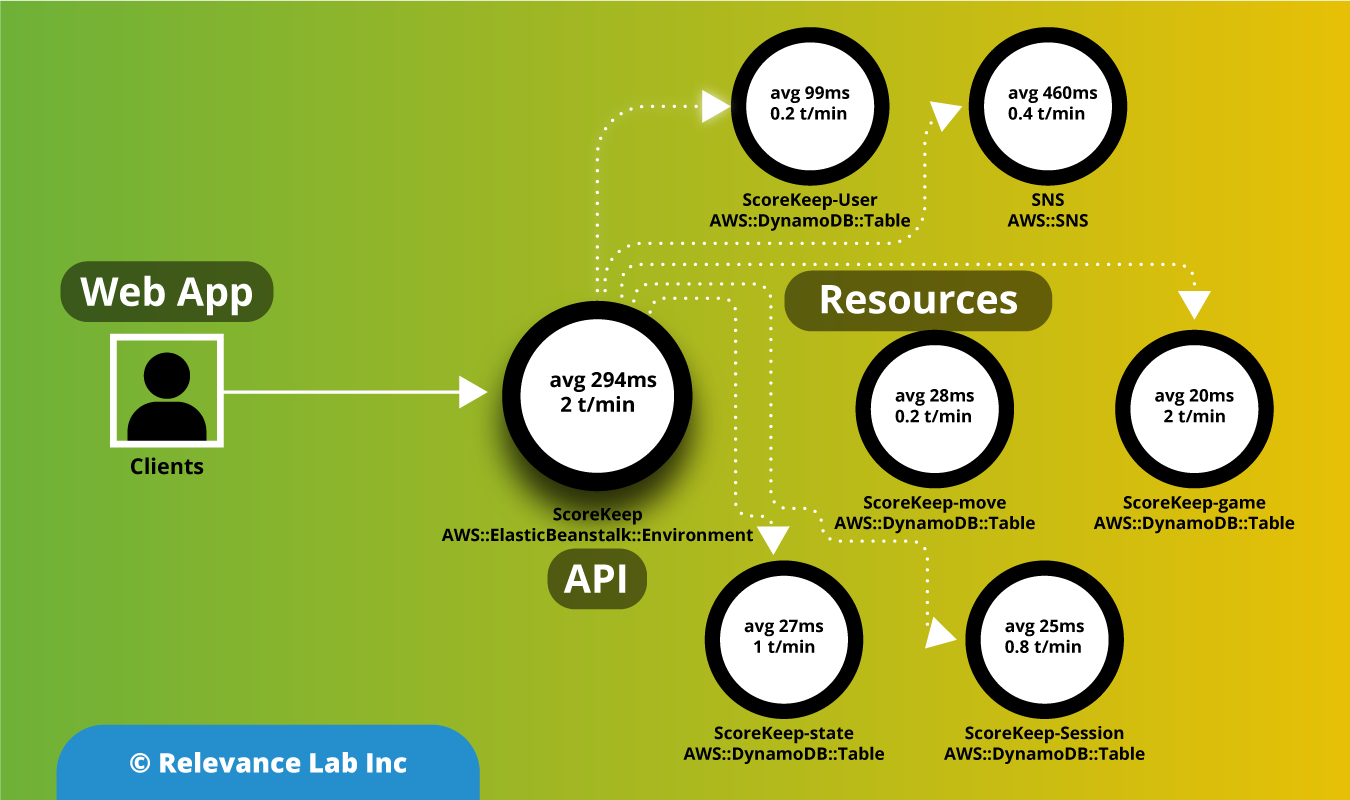

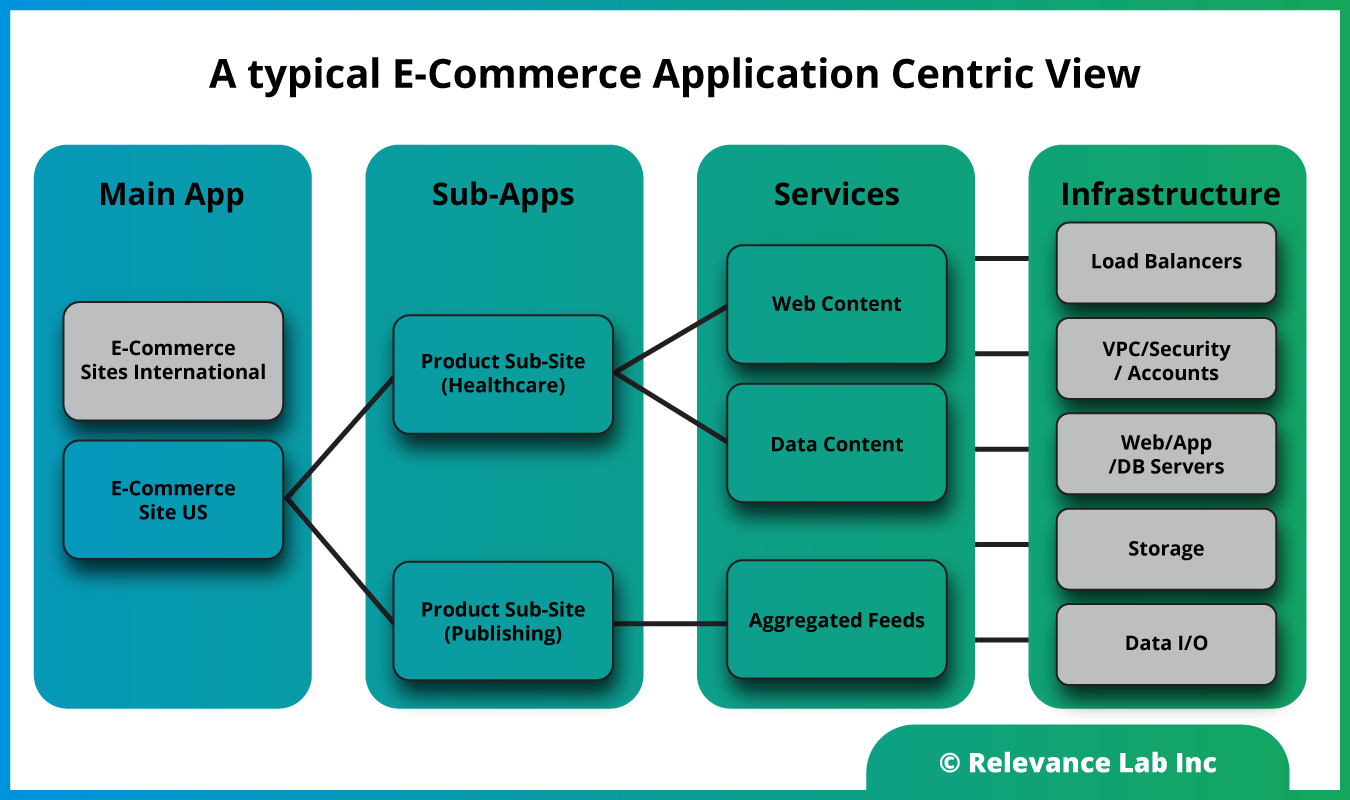

Most customers have multiple applications deployed in different regions constituting sub-applications, underlying web services, and related infrastructure as explained in the figure below. The dynamic nature of cloud assets and automated provisioning with Infrastructure as a Code makes the discovery process and keeping CMDB up to date a non-trivial problem.

As explained above, a typical customer setup would consist of different business units deploying applications in different market regions across a complex and hybrid infrastructure. Most existing CMDB applications provide a static assets view that is incomplete and not well aligned to growing needs for real-time application-centric analysis, costs allocation, and application health insights. This problem has been solved by the AppInsights solution leveraging existing investments of customers on ITSM licenses of ServiceNow and pre-existing solutions from AWS like ServiceManagement connector that are available for no additional costs. The missing piece till recently was an Application-centric meta data linking applications to infrastructure templates.

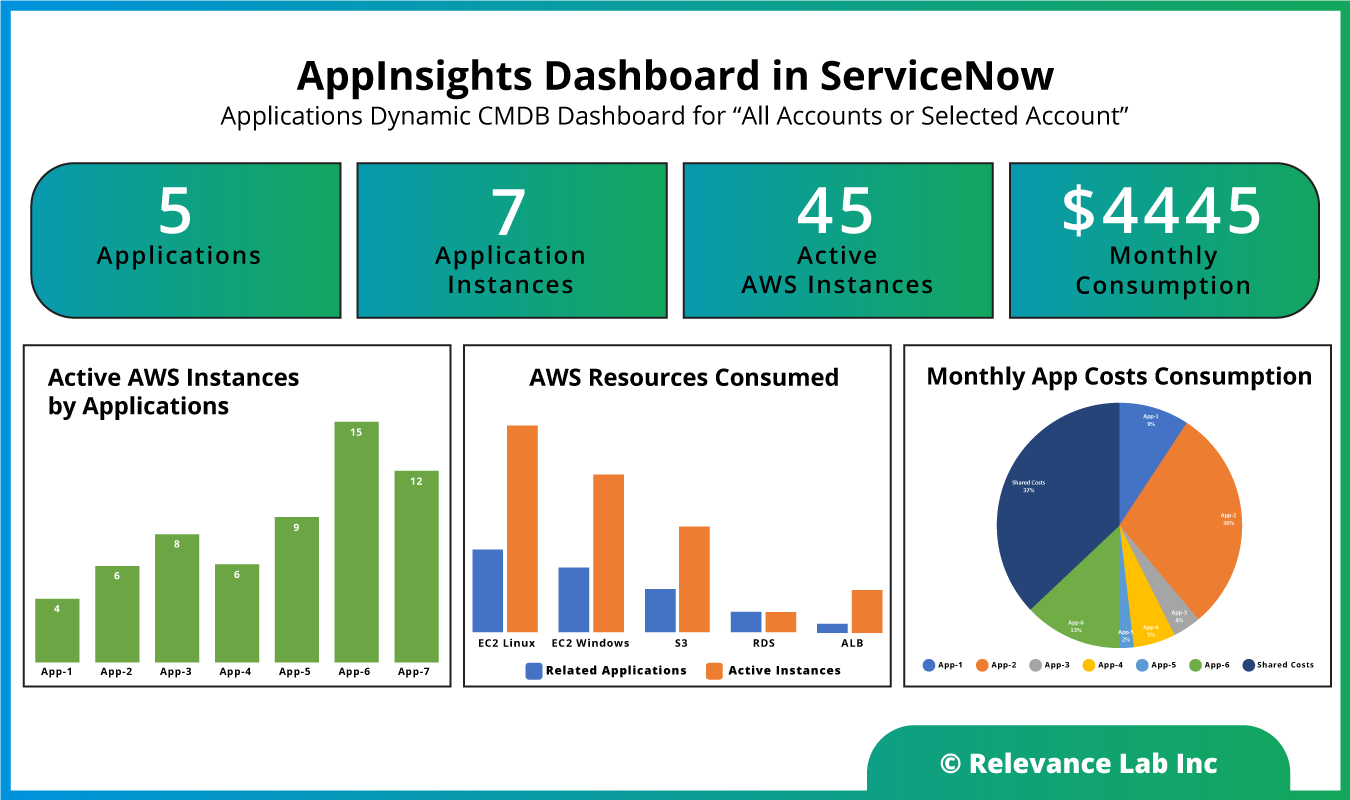

Customers need to be able to see the information across their AWS accounts with details of Application, Infrastructure, and Costs in a simple and elegant manner, as shown below. The basic KPIs tracked in the dashboard are following:

- Dashboard per AWS Account provided (later aggregated information across accounts to be also added)

- Ability to track an Application View with Active Application Instances, AWS Active Resources and Associated Costs

- Trend Charts for Application, Infrastructure and Cost Details

- Drill-down ability to view all applications and associated active instances what are updated dynamically using a period sync option or on-demand use based

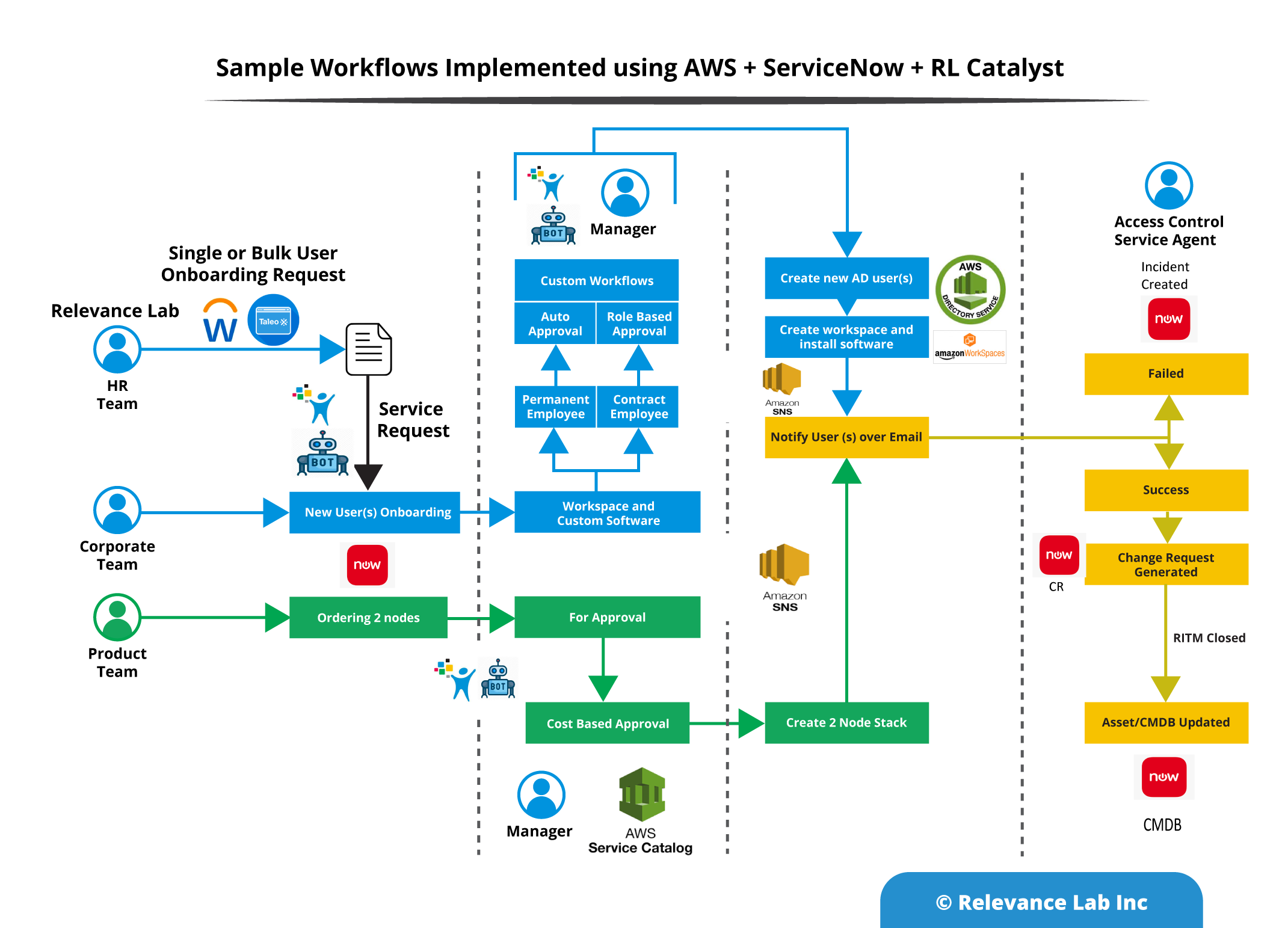

The ability to get a Dynamic Application CMDB is possible by leveraging the AWS Well Architected best practices of “Infrastructure as a Code” relying on AWS Service Catalog, AWS Service Management Connector, AWS CloudFormation Templates, AWS Costs & Budgets, AWS AppRegistry. The application is built as a scoped application inside ServiceNow and leverages the standard ITSM licenses making it easy for customers to adopt and share this solution to business users without the need for having AWS Console access.

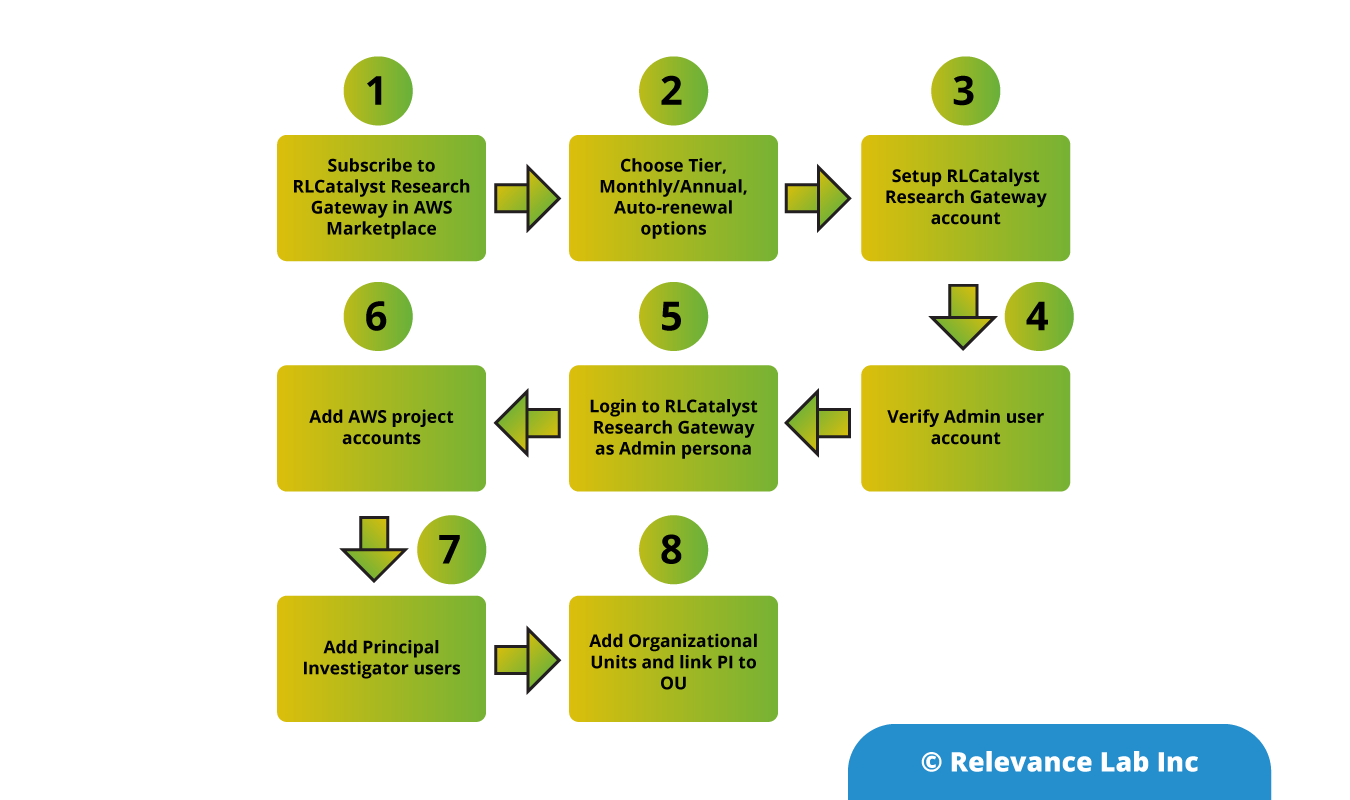

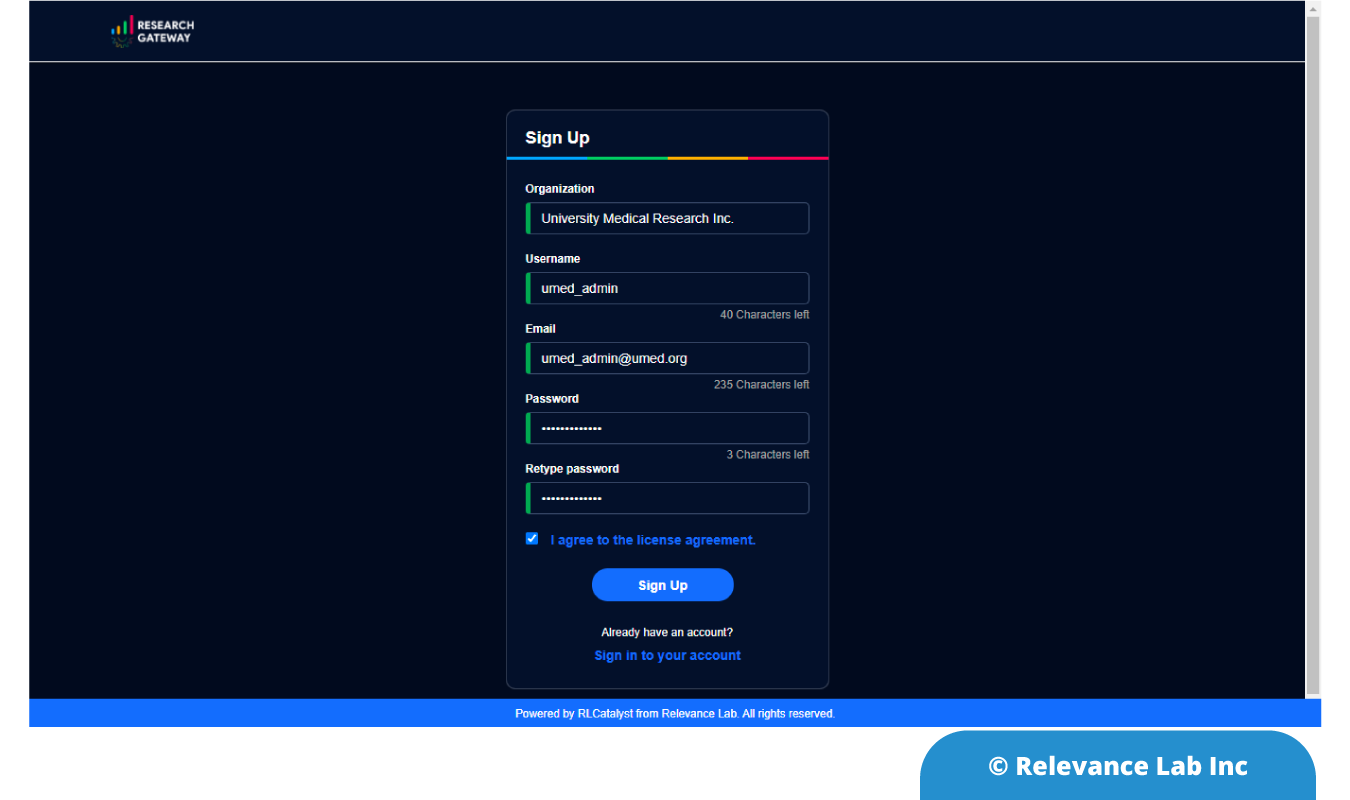

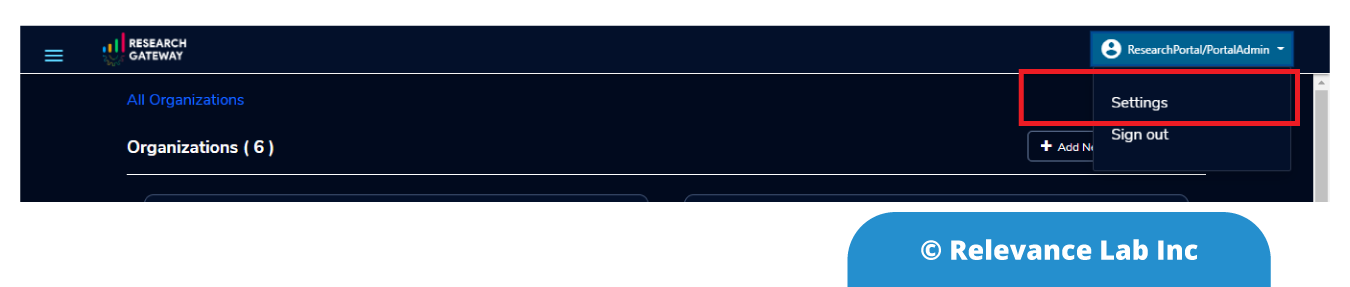

Workflow steps for adoption of RLCatalyst AppInsights are explained below. The solution provided is based on standard AWS and ServiceNow products commonly use in enterprises and build on existing best practices, processes and collaboration models.

| Step-1 | Define AppRegistry Data | Use AppRegistry |

| Step-2 | Link App to Infra Templates – CloudFormation Template (CFT) / Service Catalog (SC) | AWS Accounts Asset Definitions |

| Step-3 | Ensure all Assets Provisioned have App and Service Tagging (Enforce with Guard Rails) | AWS Accounts Asset Runtime Data |

| Step-4 | Register Application Services – Service Registry | Service Registry |

| Step-5 | AppInsights Data Lake refresh with static and dynamic Updates (Aggregated across accounts) | RLCatalyst AppInsights |

| Step-6 | Asset, Cost, Health Dashboard | RLCatalyst AppInsights |

A typical implementation of RLCatalyst AppInsights can be rolled out for a new customer in 4-6 weeks and can provide significant business benefits for multiple groups enabling better Operations support, Self-service requests, application specific diagnostics, asset usage and cost management. The base solution is built on a flexible architecture allowing for more advanced customization to extend with real time health and vulnerability mappings and achieve AIOps maturity. In future there are plans to extend the Application Centric views to cover more granular “Services” tracking for support of Microservice architectures, container based deployments and integration with other PaaS/SaaS based Service integrations.

Summary

Cloud-based dynamic assets create great flexibility but add complexity for near real-time asset and CMDB tracking. While existing solutions using Discovery tools and Service Management connectors provided a partial solution to an Infrastructure centric view of CMDB, a robust Application Centric Dynamic CMDB was a missing solution that is now addressed with RLCatalyst AppInsights built on AppRegistry as explained in the above blog.

For more information, feel free to contact marketing@relevancelab.com.

References

Governance360 – Are you using your AWS Cloud “The Right Way”

ServiceNow CMDB

Increase application visibility and governance using AWS Service Catalog AppRegistry

AWS Security Governance for Enterprises “The Right Way”

Configuration Management in Cloud Environments